Hey @Alphanu, thanks for all the work on the res switching features. I love the idea of not having to configure custom aspect ratios for every core. The idea of having a RetroArch that functions similarly to GroovyMAME is awesome.

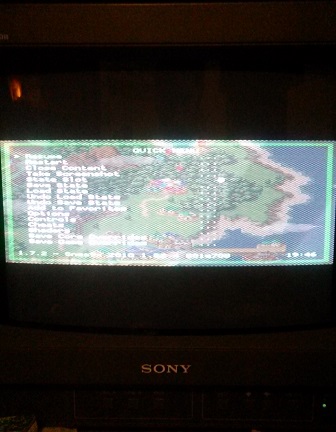

I’m having a bit of trouble however. Res switching seems to not trigger randomly. I’m trying to figure out what causes it, but it will work once and then it will run the game at 480i (2560x480?) the next time I try it, with the same game on the same core on the same settings.

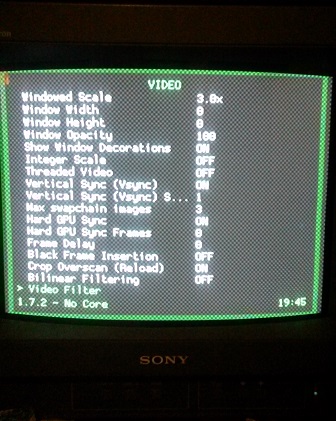

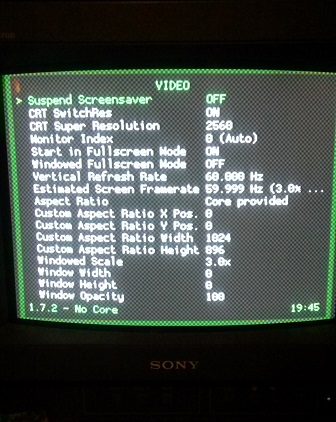

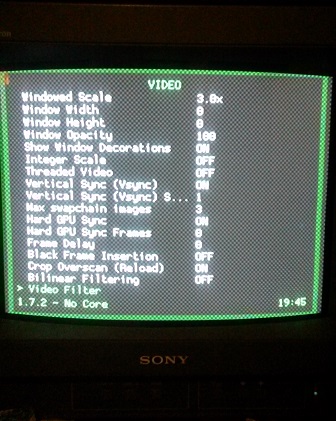

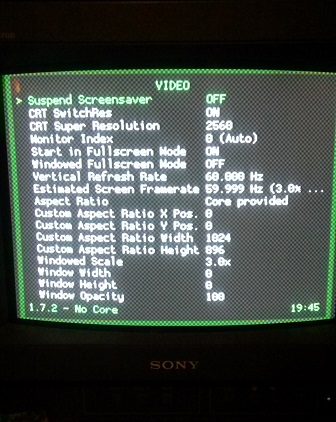

Settings:

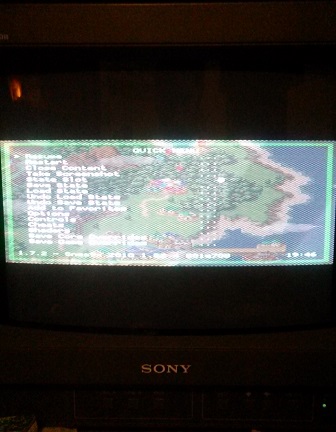

Example:

I’m also not quite clear on what’s going on with the scaling for certain roms (GBA games on mGBA core for instance). It seems to be scaling based on a non-integer. I like to use the health bar in Mega Man games as a quick reference, and they look very garbled.

Also, how does this deal with 50hz games? Admittedly, the only one I want to play is the European version of Sonic CD for the music, but it runs much slower than it should. With the flexibility of being able to switch displaymodes as necessary, is there any way to run it at the intended speed?

Sorry to bombard with issues, I just wanna make sure I’m rigging everything up properly. I’m using the version compiled from your source. Again, thanks for all the work!