That’s what “exclusive” means. The program is given exclusive access to the video hardware.

Nope, because “desktop composition” never bypass, it’s integrated in windows (fullscreen exclusive don’t kill aero, check yourself) . The only way is to turn it off

With windows 8 and 10 it’s even worse because it’s literally integrated in dwm.exe and is not called aero , there is no option as windows 7 to turn off . the only way is to do it with retroarch or kill dwm.exe process

However, I think this problem concerns just directX and not opengl or vulkan driver (steam games or emulator) certainly because desktop composition is based on directX In any case, it is safe and certain for steam game or emulator in directX that “desktop composition” has a bad influence to reduce input lag . It’s really visible on mushihimesama steam version

Edit : important, “disable desktop composition” in retroarch 1.3.6 seems broken, it does not disable aero in windows 7 ; this option works perfectly with version 1.0.0.2 but not with the new version . I do not have windows 8 or 10 to do the test, if a person can test to see the behavior it would be good .

Wasabi, thanks a lot for the advice pertaining to Antimicro. Indeed I have been looking for a way to alter the input polling rate for USB controllers on Windows and it’s really difficult to find something that really achieves the desired effect.

Would you be able to verify or measure whether the program actually changes the poll rate for the entire system, so that it does affect Retroarch while running? From the documentation it would seem that the poll rate is modified exclusively for antimicro’s keyboard redirection, but I would be curious to understand if it’s actually making the whole OS read the inputs of that particular controller in a faster way.

As for disabling the desktop composition, it has been broken for quite a while on RA and I don’t think it ever worked on Windows versions higher than 7. Some users over at the Osu message boards have posted some interesting workarounds to temporarily suspend dwm.exe on Windows 10, including batch files that supposedly automate the process. I haven’t tested them yet since with Windows I’m always fearful that things would horribly break afterwards, but I’d be interested in exploring this further and shaving some more ms off.

Even though at this point it might seem like nitpicking and trying to achieve some mythical level of responsiveness, for certain competitive and arcade titles improving this aspect even by a slight extent is crucial…

[QUOTE=wasabi;53363]for “reduce input lag”, this is important option : “disable desktop composition” for windows , it’s extremly important to desactivate windows aero : http://www.humanbenchmark.com/tests/reactiontime before : 240ms with aero , after 180ms disable aero !

And with anti-micro : https://github.com/AntiMicro/antimicro/releases you can reduce inputlag with gamepad poll rate setting at 1ms (anti micro must run in backround) in option/setting…[/QUOTE]

Thanks a lot man! I´ve testet this and it had no to almost no impact on my livingroom emulation machine (win10) but on my vert mame cab (win7) it takes shmup gaming to a new level. antimicro detects the ipac2 (sure the usb version) out of the box! Looks like me an Battle Garegga messing around again…gosh, my nerves.

Thanks also to all involved in this thread who contributed tips & tricks! Sticky!!!

Battle Garegga… Now, that’s a game that really tests one’s reflexes!

Yes it’s possible : assigned a bouton gamepad turbo to keyboardtest, start retroarch in windowed and désactivated “pause when menu activate” in retroarch,You can check that antimicro operate .

It is the gamepad that is modified, You do not even need to emulate the keys for the trick to work, just operate anti-micro in backround with setting 1ms .Normally all usb devices work at 8 ms, moreover you can see that the keyboard is not affected by the tip if you press it. This trick works like mouse gaming software by asking the cpu to control the device more often, there is just a little more power demanded from the cpu . To finish, the effect is clearmemnt visible if you win a frame (but it can have no impact if you are for example at 26ms because 26-8 = 18ms, in this situation you do not win anything) but if you have 20ms originally , You win a frame (frame = 16,66ms)

[QUOTE=Geomancer;53538] As for disabling the desktop composition, it has been broken for quite a while on RA and I don’t think it ever worked on Windows versions higher than 7. Some users over at the Osu message boards have posted some interesting workarounds to temporarily suspend dwm.exe on Windows 10, including batch files that supposedly automate the process. I haven’t tested them yet since with Windows I’m always fearful that things would horribly break afterwards, but I’d be interested in exploring this further and shaving some more ms off. [/QUOTE]

Okay, thanks . So in this situation it’s better to look elsewhere, that’s one of the reasons I did not want to switch to windows 8 or 10 .

[QUOTE=sterbehilfe;53625]Thanks a lot man! I´ve testet this and it had no to almost no impact on my livingroom emulation machine (win10) but on my vert mame cab (win7) it takes shmup gaming to a new level. antimicro detects the ipac2 (sure the usb version) out of the box! Looks like me an Battle Garegga messing around again…gosh, my nerves.

Thanks also to all involved in this thread who contributed tips & tricks! Sticky!!![/QUOTE]

I am delighted to be able to help you (and thanks to the designer of Antimicro). With all these tricks we can now have a solution to have the lowest latency possible. The games have a new flavor

EDIT : Ok, I have new information; I found a site to understand how it works, It is very well explained : https://ahtn.github.io/scan-rate-estimator/

You can also test your controller on the same principle as “keyboardtest” Also, joytokey can do the same thing as antimicro (I prefer antimicro but the alternatives are good too) Go to : setting/preference/internal/processing speed 16x for activated

Here is what joytokey says about it : By processing the input more frequentely (by the factor of 2-16), latency will be shotened ans very fast automatic repeat will become possible (max 60-500 times per second). However, CPU load will become much heavier, so please use with caution .

After several searches and tests, it is definitely 4ms (for 1000hz) that one can win, against 8ms. (See the link to test the keyboard above for understand), it’s already good and allows to shorten the delay and be able to win a frame. Last thing, I have no improvement with the PS3 controllers, I can not go below 8ms. Surely a technical limitation of PS3 gamepad, there is a no probleme with gamepad PS4 and Xbox360 . I think it’s important to make it clear .

happy new year !

I was testing it today I’m very surprise that in my case, the lag is shortened. I’m using a PS3 Controller through a dongle Bluetooth USB and it works perfectly by now.

Archlinux + RetroArch 1.5.1 in KMS mode + kernel-zen 4.10.4

bsnes-mercury-accuracy: 2 frames

I compiled Retroarch and its libretro cores with special flags + kernel zen.

VSync was ON VSync swap interval = 1 HardGPUSync = off Framedelay = 0

I’m finally back with results of an input lag test I promised to do long ago: A real SNES on a CRT.

This is of course interesting, since it provides a baseline to compare emulators against. It’s also interesting because many people are of the opinion that the SNES on a CRT provides close to zero input lag (or at least next frame latency).

The test setup

- SNES (PAL) connected to TV via RF output

- Original SNES controller rigged with an LED connected to the B button (same kind of mod as my previous tests)

- Super Mario World 2: Yoshi’s Island

- Panasonic TX-28LD2E

- iPhone SE filming at 720p and 240fps

The actual test was carried out as previous tests, i.e. filming the CRT and the LED together while jumping repeatedly with Yoshi. The test was carried out at the very beginning of the intro stage. The video was then manually analyzed, counting the number of frames between the LED lighting up and the character on screen responding.

Since this is a PAL console, the framerate is 50 Hz instead of 60 Hz used by NTSC. All results below have been converted to frames, making them universal. Of course, the PAL console will always have slower input lag in absolute terms, since one frame takes 20 ms instead of 16.7 ms on an NTSC console.

Hypothesis

Input lag when emulating the SNES varies from game to game. When emulating, most games seem to respond to input on either the second or third frame after applying the input. My hypothesis has long been that a real SNES would behave the same. My go-to game for testing the SNES is Yoshi’s Island and when emulating, this game responds to input on the third frame. My test scene is the beginning of the intro level, where Mario and Yoshi are located about 70% down from the top of the screen. This gives an expected input lag of:

0.5 frames on average needed from applying input until reading it in the vblank interval 2 frames where nothing happens 0.7 frames until the electron beam reaches Mario+Yoshi, scanning out the third frame after reading the input

= 3.2 frames of input lag (53 ms on an NTSC console and 64 ms on a PAL console)

The results

For comparison, I’ve included my dedicated RetroArch box as well, which consists of:

- HP Z24i IPS LCD monitor

- Asrock J4205-ITX (Pentium J4205)

- 4GB DDR3-1866

- Integrated Intel HD graphics

- RetroLink SNES replica controller (USB) rigged with an LED connected to the B button

- Ubuntu 16.10

- RetroArch 1.5.0 in KMS mode

- Snes9x2010

- Settings (which affect input lag): video_max_swapchain_images=2, video_frame_delay=8

- Vsync enabled

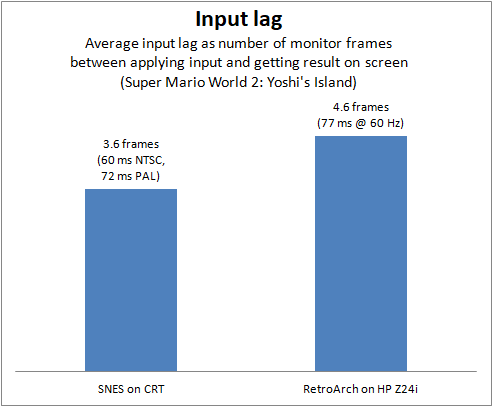

Below is a chart showing average input lag over 25 attempts:

So, there you have it. 3.6 frames is 60 ms on an NTSC console and 72 ms on a PAL console. 4.6 frames is 77 ms on the RetroArch setup. The test confirmed my hypothesis (more or less). This also means that achieving similar input lag on an emulator box (with an LCD monitor) as the real thing is quite possible. A one frame difference is miniscule and unlikely to be felt or otherwise cause noticeable adverse effect on gameplay.

Additional notes

It’s interesting that the input lag on the real SNES is actually slightly worse than I expected, by around 0.4 frames. This difference seems consistent. However, I did notice something which might cause this effect. When analyzing the video, it was easy to determine where the electron beam was when the LED lit up. I assumed that the controller state was read the next time the electron beam reached the bottom of the screen (vblank) and then counted how many SNES frames it took after that vblank until the character on screen jumped.

The fastest result I recorded was that the character responded on the third frame (as expected). Full statistics:

Out of 25 jumps:

- 19 responded on frame 3

- 6 responded on frame 4

As you can see, approximately 1/4 of the attempts resulted in one frame of additional lag before the character moved. My hypothesis on this is that it has something to do with the controller itself and how quickly it latches the input from the button. It seems the additional lag was more likely when the input came close to vblank, indicating that this could be caused by the controller not registering the input in time for vblank when the console reads it. I don’t know enough about the workings of the SNES controller to say for sure, though, so at the moment it’s just a theory.

Conclusion

I hope you enjoyed this post. Hopefully it will also put an end to people thinking that the SNES had no input lag at all (or next frame latency). Keep in mind that some games seem to react faster (and slower), though. For example, Super Metroid reacts one frame faster than Super Mario World and Super Mario World 2.

Ahhh, great info! That’s pretty exciting that we’re only 1 frame behind hardware already.

Thanks for going through the trouble to run these tests.

Amazing! How about RetroArch on a CRT? You can do it using the composite out on the Raspberry PI. Maybe that will even shave of the last frame.

Why choose SNES9x2010? Does the SNES core have much to do with latency?

Interesting. DId you use the PAL Yoshi’s Island rom with Retroarch? Wouldn’t it have been better to use the BSNES core since it has less latency?

Ideally, I’d like to test my J4205 system using a CRT monitor, but I don’t have access to one anymore. But a Raspberry Pi test on the TV could also be interesting.

I use it on my comparatively weak J4205 system because it offers enough compatibility and allows me to use some frame delay. There’s no difference in latency between snes9x2010, snes9x and any of the bsnes cores.

The results for the J4205 system were done previously (late last year) and I used the NTSC ROM. There’s no difference in latency between the bsnes cores and snes9x2010. Where did you hear that?

i believe composite out on a pi adds some processing, but using the GPIO is better. something like this: http://www.rgb-pi.com/ (there are a few different 3rd party solutions and maybe one is better than the others)

some discussion on this here: https://github.com/raspberrypi/firmware/issues/683

i’m not entirely sure where i got the idea that composite adds processing vs GPIO - that is likely wrong. i suppose with the latency test setup you could find out

Thx again Brunnis for this test. I think I have to try a real pc instead of the pi3. On the pi3 it never felt relay smooth for me. But maybe my TV is having to much lag.

do you recall what the situation was with raspberry pi input lag re: dispmanx vs GL(ES) vs DRM?

i recall dispmanx was previously a frame or so better than GL(ES), but then there was a DRM driver that may or may not be viable (i guess it needs the v4 kms driver to be the default in raspbian?). plus i see that the dispmanx driver had some recent changes regarding buffering that could affect things.

if you still have a pi setup, it might be worth retesting. it would be nice to have some sort of definitive answer.

BTW thanks for all of this! still the best input lag investigation around  so many myths around input lag and emulation.

so many myths around input lag and emulation.

The Dispmanx and DRM drivers are one frame faster than the default GLES driver on the Raspberry Pi. Those two drivers match the fastest drivers I’ve tested on the PC side. However, the PC can improve input lag by 1-2 additional frames, since it’s fast enough to use video_max_swapchain_images=2 (improves input lag by one frame) and video_frame_delay (improves input lag by 0-0.9 frames). In the case of my Pentium J4205 system, it’s fast enough to improve the input lag by ~1.5 frames over the RPi.

The Dispmanx driver is slightly faster than the DRM driver (processing wise), which means that in some situations the DRM driver will run at less than 60 FPS while the Dispmanx driver runs at full framerate.

The recent changes that were made shouldn’t affect input lag. I can see that the Dispmanx driver was changed in February to disable triple buffering completely, due to stability reasons. That was not very good for performance reasons, since it’s the same as using video_max_swapchain_images=2 and the RPi is too slow to handle it. I can see this was reverted on April 29, though, so the Dispmanx driver should now be back to how it worked previously (when I did my tests).

Here are approximate input lag results (based on previous testing), using my HP Z24i monitor, snes9x2010 and Yoshi’s Island:

Raspberry Pi with default BCM (GLES) video driver: 7.2 frames Raspberry Pi with Dispmanx video driver: 6.2 frames Raspberry Pi with DRM video driver: 6.2 frames

The figures above can be improved in the case of the Dispmanx and DRM drivers, using video_max_swapchain_images=2 and/or video_frame_delay. Both are likely to cause performance issues, though. This is where more powerful x86 hardware comes in handy and it’s the reason I built my dedicated Pentium J4205 system for RetroArch. That’s still not a super-fast system, but it’s much faster than the RPi and it’s completely passive.

I was basing this off of your first input lag investigation where you say “However, testing on Windows suggests that bsnes-mercury-balanced is quicker than snes9x-next”. However, I didn’t look closely enough at your updated input lag investigation where you improved your test methodology and I can see that in the new tests, input lag for Yoshi’s Island with Snes9x-next Bsnes-Mercury balanced is basically the same.

Note with the way the dispmanx driver works now, this no longer holds true. “Triple” buffering is really just double buffering, the 3rd frame is never used so video_max_swapchain_images=2 is equivalent to video_max_swapchain_images=3 performance wise.

I’m not sure why it allocates the useless 3rd buffer, I just went in and fixed the race conditions so I could turn on the “triple” (actually double) buffering support again since after the revert dispmanx was unusably slow.

In the end, the dispmanx driver is quite wonky but it works well enough for low latency 8 bit era console emulation, which is really all I personally use my RPI3 for. I was tempted to do a full rewrite of the driver, including text rendering support for retroarch messages, but I’m not sure it’s really worth the time.

For anything 16/32 bit, folks should really just get a low end intel. The RPI3 is certainly cheap, but it provides a compromised experience for anything other than 8 bit console emulation.

EDIT: To be clear, when I say compromised, I’m referring to input lag. If you don’t care/notice input lag, the RPI3 can perform quite well.

EDIT 2: I went ahead and fixed the useless 3rd buffer thing and restored “max_swapchain_images” to the way it (correctly) worked originally: https://github.com/andrewlxer/RetroArch/commit/d0b54f97aa8ca30e0fbf0b2f7f71eff89f100d83

I’ll submit upstream after some stability testing.