I’m finally back with results of an input lag test I promised to do long ago: A real SNES on a CRT.

This is of course interesting, since it provides a baseline to compare emulators against. It’s also interesting because many people are of the opinion that the SNES on a CRT provides close to zero input lag (or at least next frame latency).

The test setup

- SNES (PAL) connected to TV via RF output

- Original SNES controller rigged with an LED connected to the B button (same kind of mod as my previous tests)

- Super Mario World 2: Yoshi’s Island

- Panasonic TX-28LD2E

- iPhone SE filming at 720p and 240fps

The actual test was carried out as previous tests, i.e. filming the CRT and the LED together while jumping repeatedly with Yoshi. The test was carried out at the very beginning of the intro stage. The video was then manually analyzed, counting the number of frames between the LED lighting up and the character on screen responding.

Since this is a PAL console, the framerate is 50 Hz instead of 60 Hz used by NTSC. All results below have been converted to frames, making them universal. Of course, the PAL console will always have slower input lag in absolute terms, since one frame takes 20 ms instead of 16.7 ms on an NTSC console.

Hypothesis

Input lag when emulating the SNES varies from game to game. When emulating, most games seem to respond to input on either the second or third frame after applying the input. My hypothesis has long been that a real SNES would behave the same. My go-to game for testing the SNES is Yoshi’s Island and when emulating, this game responds to input on the third frame. My test scene is the beginning of the intro level, where Mario and Yoshi are located about 70% down from the top of the screen. This gives an expected input lag of:

0.5 frames on average needed from applying input until reading it in the vblank interval

2 frames where nothing happens

0.7 frames until the electron beam reaches Mario+Yoshi, scanning out the third frame after reading the input

= 3.2 frames of input lag (53 ms on an NTSC console and 64 ms on a PAL console)

The results

For comparison, I’ve included my dedicated RetroArch box as well, which consists of:

- HP Z24i IPS LCD monitor

- Asrock J4205-ITX (Pentium J4205)

- 4GB DDR3-1866

- Integrated Intel HD graphics

- RetroLink SNES replica controller (USB) rigged with an LED connected to the B button

- Ubuntu 16.10

- RetroArch 1.5.0 in KMS mode

- Snes9x2010

- Settings (which affect input lag): video_max_swapchain_images=2, video_frame_delay=8

- Vsync enabled

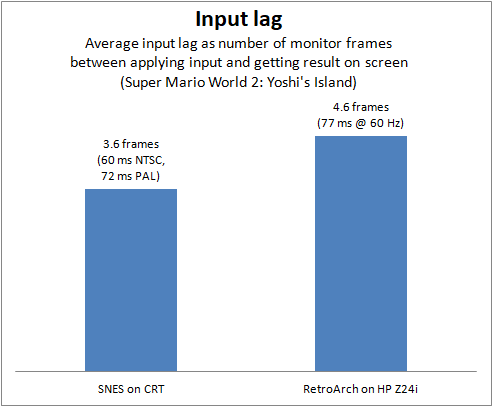

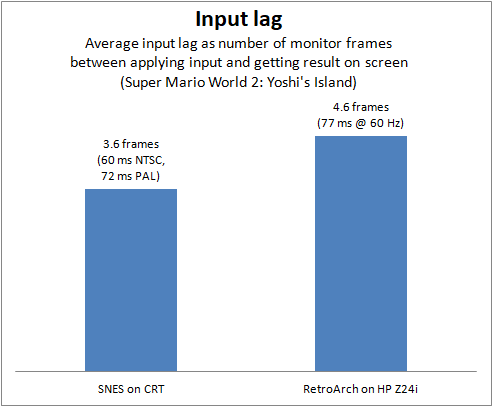

Below is a chart showing average input lag over 25 attempts:

So, there you have it. 3.6 frames is 60 ms on an NTSC console and 72 ms on a PAL console. 4.6 frames is 77 ms on the RetroArch setup. The test confirmed my hypothesis (more or less). This also means that achieving similar input lag on an emulator box (with an LCD monitor) as the real thing is quite possible. A one frame difference is miniscule and unlikely to be felt or otherwise cause noticeable adverse effect on gameplay.

Additional notes

It’s interesting that the input lag on the real SNES is actually slightly worse than I expected, by around 0.4 frames. This difference seems consistent. However, I did notice something which might cause this effect. When analyzing the video, it was easy to determine where the electron beam was when the LED lit up. I assumed that the controller state was read the next time the electron beam reached the bottom of the screen (vblank) and then counted how many SNES frames it took after that vblank until the character on screen jumped.

The fastest result I recorded was that the character responded on the third frame (as expected). Full statistics:

Out of 25 jumps:

- 19 responded on frame 3

- 6 responded on frame 4

As you can see, approximately 1/4 of the attempts resulted in one frame of additional lag before the character moved. My hypothesis on this is that it has something to do with the controller itself and how quickly it latches the input from the button. It seems the additional lag was more likely when the input came close to vblank, indicating that this could be caused by the controller not registering the input in time for vblank when the console reads it. I don’t know enough about the workings of the SNES controller to say for sure, though, so at the moment it’s just a theory.

Conclusion

I hope you enjoyed this post. Hopefully it will also put an end to people thinking that the SNES had no input lag at all (or next frame latency). Keep in mind that some games seem to react faster (and slower), though. For example, Super Metroid reacts one frame faster than Super Mario World and Super Mario World 2.