I recently acquired a SNES Classic Mini, mainly to get the controllers. I figured it could be interesting to use them on my other systems via the Raphnet low-latency classic controller to USB adapter (http://www.raphnet-tech.com/products/wusbmote_1player_adapter_v2/index.php). One thing that has bothered me for a while is that I haven’t been able to account for all the latency that I’ve measured in my tests so far. Subtracting all the known sources, there’s always been some lag left (around 0.7 frames). The sources could be:

- The HP Z24i display I’m using. Although it has been tested to have less than 1 ms input lag (yes, input lag, not response time or anything else), I have no way of confirming it.

- Operating system and driver overhead.

- Gamepad/controller.

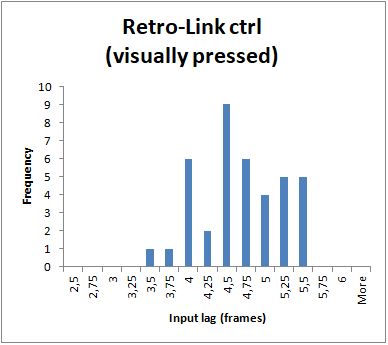

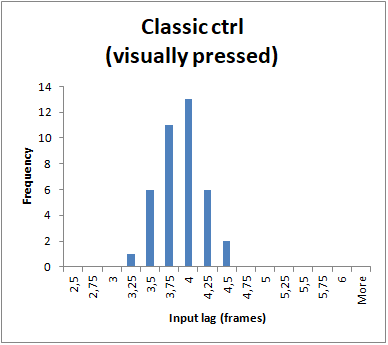

So, I got the adapters delivered a couple of weeks ago and have run a few tests. The results are intriguing. I’ll be comparing the results to my trusty (but crappy) LED-rigged Retro-Link controller that I’ve used for all my past tests. Since I have no intention of attacking the SNES Classic controllers with the soldering iron, I have not installed a LED on any of them. Instead, I’ve tested this controller by filming it from the side, in front of the screen, while pressing the jump button. To get a feeling for how much this affects the result compared to the LED method, I’ve choosen to test the Retro-Link controller in this way as well (in addition to using the LED). That way, we’ll be able to see how much the two methods differ. So, I’ll be reporting the following:

- Retro-Link controller: Time from LED lighting up until character reacts on screen

- Retro-Link controller: Time from button appears pressed down until character reacts on screen

- SNES Classic Mini controller: Time from button appears pressed down until character reacts on screen

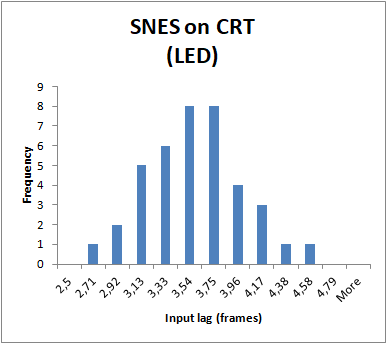

As a bonus, I’ll be including numbers for a real SNES on a CRT TV as well. I’ve shown results from this test previously, but I will include a bit more detailed results today.

The Raphnet adapter

[Image courtesy of Raphnet Technologies]

Before we dive into the results, let’s take a quick look at the Raphnet Technologies Classic Controller to USB Adapter V2. This is a pretty serious adapater that is configurable via a Windows/Linux application and features upgradable firmware. One of the things that is configurable is how often the adapter polls the controller for input. Please note that this is not the same as the USB polling (more on that below). We have two components here: 1) How often the adapter polls the controller itself and 2) How often the computer polls the adapter (i.e. USB polling rate). On the single player version of the adapter, the adapter can be set to poll the controller with a period as low as 1 ms (2 ms for the 2-player version).

As for the USB interface, one of the things that makes this adapter so good is that it is hard coded to use the fastest polling frequency the USB standard allows: 1000 Hz (as opposed to 125 Hz, which is the default used by pretty much all gamepads). This means that you don’t have to fiddle with operating system or driver hacks to use the faster polling rate. It just works. This fast polling rate more or less eliminates input lag caused by the USB interface, cutting the average input lag from 4 ms to 0.5 ms and the maximum from 8 ms to 1 ms.

If we combine the 1 ms USB polling rate with the 1 ms controller polling rate, we get a maximum lag of just 2 ms and a blistering average of 1 ms. [This actually depends on how these two poll events are aligned to each other, but those figures are worst case.]

The hardware and software setup

I’ve used my iPhone 8 to record videos of the monitor and LEDs/controllers at 240 FPS. I’ve then counted the frames from the LED lighting up or button appearing pressed down until the character on screen reacts (jumps). The results presented further down are based on 39 samples for each test case. Below are screenshots of each test case. The test scene is the starting of the very first level in Yoshi’s Island.

Retro-Link controller:

SNES Classic controller:

Real SNES on CRT TV:

This time I used my Dell E5450 laptop for testing, instead of my 6700K desktop. This was out of convenience and I was not out to demonstrate absolute lowest lag anyway, but rather the difference between the Retro-Link and the Raphnet adapter + SNES Classic controller combo.

Dell E5450

- Core i5-5300U (Broadwell)

- 16 GB DDR3-1600 CL11 (1T)

- Windows 10 Version 1709 (OS version 16299.125)

- Intel GPU driver 20.19.15.4531 (with default settings)

- RetroArch nightly from November 12 2017

- HP Z24i 1920x1200 monitor, connected to the Dell laptop via DVI. Native resolution was used in all tests.

- Super Mario World 2: Yoshi’s Island (NTSC)

- snes9x2010

RetroArch settings

- Default, except:

- video_fullscreen = “true”

- video_windowed_fullscreen = “false”

- video_hard_sync = “true”

- video_frame_delay = “6”

- video_smooth = “false”

Raphnet Technologies ADAP-1XWUSBMOTE_V2

- Adapter configured to poll controller with 1 ms interval (fastest setting available)

And, just to be clear, vsync was enabled for all tests.

Finally, I’m including results from a real SNES as well. That setup consists of:

- SNES (PAL) connected to CRT TV (Panasonic TX-28LD2E) via RF output

- Original SNES controller rigged with an LED connected to the B button

- Super Mario World 2: Yoshi’s Island (PAL)

The results

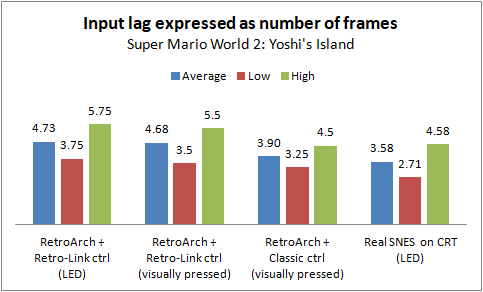

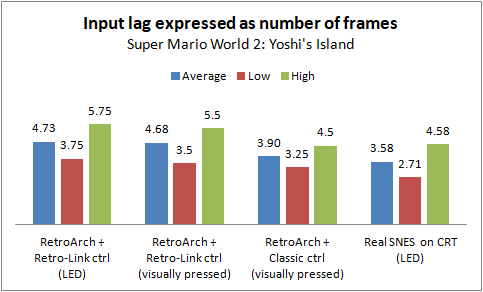

Without further ado, here are the results:

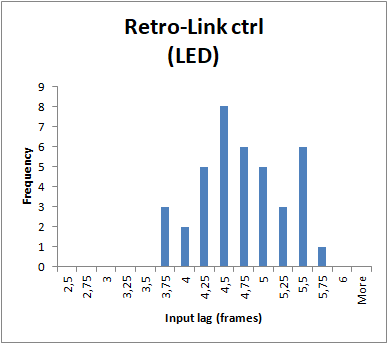

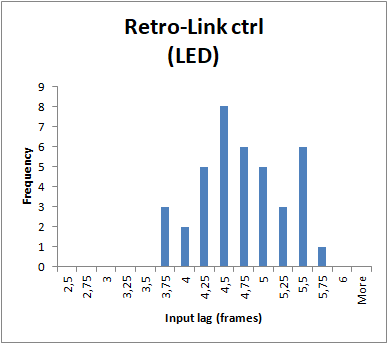

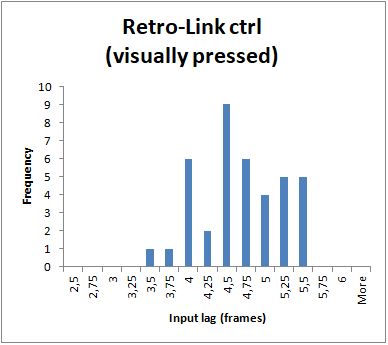

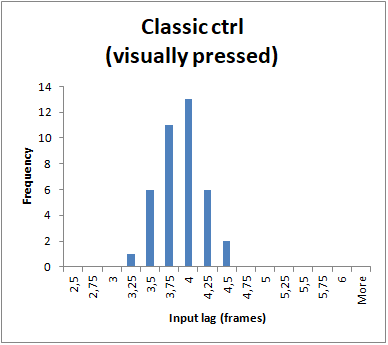

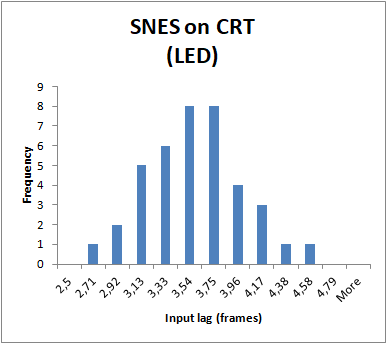

I’m also providing some charts showing the distribution of the samples for each test case:

(The reason for the “strange” looking values on the X axis of the last histogram above is that the SNES tested is a PAL console, so the ratio between the camera FPS (240) and console FPS (50) is different compared to the emulated test cases)

Result analysis

I don’t know how you feel, but I think these results are pretty awesome. I had suspected that the Retro-Link controller had some inherent lag, but this finally proves it. But let’s not get ahead of ourselves. First, let’s conclude that analyzing the lag by visually determining when the button is pressed seems to marginally understate the input lag. It only has a miniscule effect on the average (0.05 frames) but can affect the min/max numbers slightly more (0.25 frames). All in all, I’d still say that analyzing input lag this way is a decent method, although an LED is preferred.

With that out of the way, we can see that the Raphnet adapter + Classic controller shaves off 0.78 frames on average. We also see a clear tightening between the min/max numbers, going from a difference of 2 frames to just 1.25 frames. This is to be expected with the tighter polling.

Let’s take the measured average of 3.90 frames and subtract all the known sources of input lag. 3.90 frames is 65 ms at 60 FPS, so:

65 ms

- 0.5 ms (average until next controller poll)

- 0.5 ms (average until next USB poll)

- 8.33 ms (average until start of next frame)

- 50 ms (3 frames. Yoshi's Island has a built in delay which means the result of an action is visible in the third frame.)

+ 6 ms (since we're using a frame delay of 6 in RetroArch)

- 11.67 ms (0.7 frames. This is how long it takes to scan out the image on the screen until it reaches the character's position in the bottom half of the screen.)

= 0 (!!!)

I was actually pretty shocked when I first did this calculation. It’s obviously a fluke that it pans out so perfectly, but even if the result would have been plus/minus a few ms, the conclusion is the same: all delays of any significance have been accounted for.

With the above result, we can draw some conclusions:

- Contrary to popular belief, there doesn’t have to be any detrimental effect from using vsync. It doesn’t add any input lag on this test setup running RetroArch with these settings.

- The HP Z24i really does have negligible input lag, as once tested by Prad.de (they measured less than 1 ms). I have long believed this to be the case, but it’s nice to get strong evidence to support this. It’s also good to be able to show this, since some people, for some reason, still believe that LCD technology has this built-in input lag that cannot be eliminated. Some people have even made up stories about how HDMI itself adds input lag that, again, cannot be eliminated.

- Operating system and driver overhead is negligible. There’s obviously some time needed to process the input and make it available to the application, but it’s simply not significant enough to worry about. The results also don’t show any big fluctuations in input lag, proving that the operating system is able to poll and take care of the input in a fairly consistent manner.

These points go against a lot of the “common knowledge” that is repeated time and time again in input lag related discussions. My guess is that most of that “knowledge” is simply made up/assumed and the people that repeat this stuff haven’t really bothered to check if it’s actually true or of any significance.

So, what if we put the results into perspective and compare to a real SNES? First of all, the RetroArch results presented in this post could be further improved by simply increasing the frame delay setting. I used 6 because the laptop can’t handle any higher. My 6700K can handle a setting of 12. That would reduce the average by another ~0.36 frames, to just 3.54 frames. I don’t believe anyone has ever demonstrated input lag this low in SNES emulation previously.

What we see is that we can actually match the SNES’s average input lag, despite using an LCD monitor. Granted, when I’m analyzing the videos I stop counting as soon as I see a hint of the pixels changing, so you might want to add a few milliseconds to compensate for the pixel response time. While my monitor isn’t the fastest when it comes to response times, some LCD monitors can be pretty quick (I have not investigated the possibility of getting a monitor that has as low input lag as mine, but with faster response times, though).

The one area where the real SNES does have a small advantage is in minimum input lag. Theoretically, we could almost match this as well, but it would require us to max out the frame delay at 15 ms and that, in turn, would require a very fast computer. Not really practical. However, RetroArch with a frame delay of ~12 would just be 0.2 to 0.3 frames slower in minimum input lag and I’d go out on a limb and say that noone would notice the difference.

One caveat should be mentioned here, in regards to the tests of the actual SNES: The tested console is a PAL version. The input lag result presented earlier is reported as number of frames, with the assumption being that PAL and NTSC consoles would respond in the same number of frames. Obviously, the PAL version would respond slower in absolute terms, since it has a lower frame rate (each frame takes 20 ms, compared to 16.67 ms on the NTSC version). I have not seen anything to suggest that there would be any additional lag on a PAL console, other than that caused by the lower frame rate, but without testing an NTSC console myself I can’t guarantee that it would respond in the same number of frames. As I’ve mentioned in an earlier post, there is around 0.3 - 0.4 frames of input lag in the average result for the PAL SNES that I’ve been unable to account for. Maybe it’s there on the NTSC version as well and maybe not. Even if the NTSC console were to respond quicker than the PAL version, the window for improvement is just those 0.3 - 0.4 frames, so it wouldn’t fundamentally change things.

To round this post off, we can finally conclude that the old adage that “emulation is by definition laggy”, isn’t really true. What is true is that emulation is often laggy to some degree, due to bad or incorrectly setup software, incorrectly chosen components (or component choices that were necessitated by other factors) or due to performance constraints, such as in the case of the Raspberry Pi and similar hardware. However, it’s good to see that it doesn’t have to be this way. It’s also good to see that there’s no exotic hardware or software needed. These tests have used a regular desktop monitor and laptop. Well, I guess the most exotic things here are the Raphnet adapter and the SNES Classic controller. However, Raphnet offer a version of their adapter for the original SNES controller, so that’s an easier/cheaper way for most to get this kind of fast input. It’s just a shame that manufacturers of USB controllers aren’t more serious and at least offer the ability of using a faster poll rate.

Thanks for reading!