That looks gorgeous!

Great to see frame time and deviation exposed so conveniently. I have a feeling I’ll be spending a lot more time playing with these stats than playing games

That looks gorgeous!

Great to see frame time and deviation exposed so conveniently. I have a feeling I’ll be spending a lot more time playing with these stats than playing games

Ah, possibly I misunderstood?

Is Frame Time supposed to display the time in ms that RetroArch spent to create the frame, or the time in ms that the frame spends on the screen?

The latest nightly on macOS seems to be showing time-on-screen: https://imgur.com/a/ngdYr

Just check the sourcecode I guess to be absolutely sure. These values were previously reported at RetroArch exit if you invoked it from the commandline, all I did was hook them up to be able to be seen ingame.

I can clearly see that it’s not showing the time it took to create the frame.

The relevant times would be:

In case you’d like to improve its behavior through a pull request, let me give you some pointers -

gfx/video_driver.c (line 2399):

Here is where frame_time gets set.

Then, later on, we set video_info.frame_time here -

This is the value that gets used in the statistics.

Good news! I found a way to do beam racing in a cross-platform manner (see video at bottom of post):

Two emulators have successfully implemented the BlurBusters lagless VSYNC ON experiment (via tearingless VSYNC OFF); so it’s actually successfully validated:

– Toni’s WinUAE now has real time beam chasing. 40ms input lag reduced to less than 5ms! http://eab.abime.net/showthread.php?t=88777&page=8

– Calamity’s experimental (unreleased) change to GroovyMAME, patch, same-frame lag:

Related developer-oriented forum thread (read all forum pages)

Toni made his Beam racing compatible with GSYNC and FreeSync, with my help, via fast-beamracing. So you can have even less lag with VRR. VRR still scans top-to-bottom, just faster. So the emulator CPU runs ultra-fast (e.g. 4x faster) whenever a refresh cycle is scanning out (e.g. 1/240sec scanout top-to-bottom of a “60Hz” refresh cycle). It’s like Einstien where everything is relative, it’s synchronizing 1:1 between emulated raster and real-world raster, just faster-scanouts followed by longer-pauses between refresh cycles. As a result, you can have slightly less input lag than the original device being emulated, if you combine VRR + fast-beamracing. Beam racing can also be done on selective refresh cycles (e.g. every other refresh cycle during 120Hz), via surge-emulator-CPU-executes in synchronization with a fast-scanning-out real-world raster.

For understanding the LCD scanout, GSYNC beam racing instructions (raster scan line synchronization to real-world raster position on a GSYNC scanout or FreeSync scanout). Page1, Page2 – though for practical considerations, it works best ONLY on 120Hz+ VRR displays due to a an annoying graphics driver quirk. Toni of WinUAE asked me many questions and I’ve successfully helped him implement beam-racing with GSYNC/FreeSync to have even less lag. That said, beam racing works on slow refresh cycles, fast refresh cycles, and variable refresh cycles – as long as there’s a refresh cycle that’s pretty close to the emulator interval, beam racing can be done on specific chosen refresh cycles – basically catching the caboose of a passing train of a display scanout (or triggering your own scanout in the case of VRR), if you will.

And it doesn’t have to be perfect synchronization between emulator raster and real-world raster, it can be done at the frame-slice level:

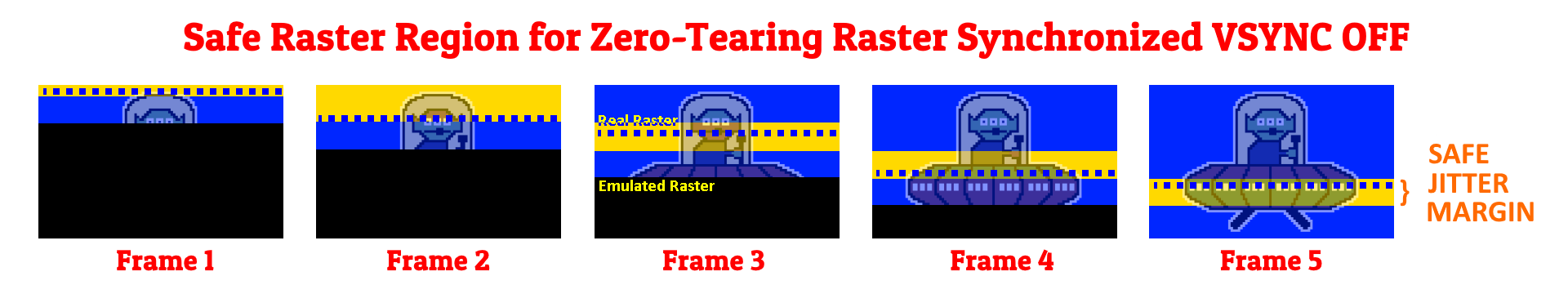

So a lagless VSYNC ON emulated via VSYNC OFF, with zero tearing, because the tearing occurs on duplicate frameslices, and duplicates has no effect, so no tearline – viola!

Toni said it was easier than expected to add beam-racing support in WinUAE to successfully synchronize the emulator raster with the real-world raster. And apparently, you can use higher Hz just fine too (just selectively beam-race the appropriate refresh cycles, in an accelerated surge cycle, it’s simply faster top-to-bottom scanouts) – CPU performance and GPU performance willing. I can do up to 7,000 frameslices per second, so my lag from emulator-pixel-render to photons hitting my eyes can be as little as 2/7000ths of a second plus whatever the pixel response is (at least for bufferless gaming LCD monitors and for CRT displays). Buffered LCDs will add more lag, but won’t interfere with beam racing the video signal, so that’s not our emulator author’s concern to worry about how the display buffers the frames, but most good desktop gaming LCDs don’t have buffer latency anymore (at their highest Hz) and are capable of synchronous panel refresh to signal scanout, with only GtG (pixel response) lag.

Although right now RetroArch is fully frame-based, there’s no reason why it could (eventually, slowly, carefully, over the years) add support for optional raster hooks later on in the coming years.

But given the potential rearchitecturing issues, I’d suggest waiting for other simpler emulators to pave the way first, before inserting beam racing workflows into Retroarch. Let’s finish a cross platform beam racing implementation first.

I’m achieving precision tearline positioning with only a microsecond clock offset from VSYNC timestamps, so I don’t need access to a raster register (that’s only cake frosting):

And that’s without access to a raster scan line register! Just generic VSYNC OFF + precision clock counter offsets from a VSYNC timestamp. (Many ways to get a VSYNC timestamp on many platforms, even while running in VSYNC OFF mode). VSYNC OFF tearlines are always raster-exact. – You’re simply becoming a tearline jedi once you understand displays as well as some of us do.

Why this is fantastic!

Don’t be surprised if you’ll see it on Nvidia cards in a few months, unpatented good ideas are stolen swiftly.

They already use beam-racing with virtual reality.

What we’re doing is applying beam-racing with emulator rasters in a practical way for the first time. Real-time synchronization between emulator raster and real raster for lagless emulator operation is now a magical reality. Toni WinUAE author said it is the emulator holy grail! It’s worth the implementation difficulty! But it was easier for Toni than expected.

But virtual reality has been already doing it at the coarse strip level: – Android beam-racing for GearVR

https://www.imgtec.com/blog/reducing-latency-in-vr-by-using-single-buffered-strip-rendering/

– NVIDIA VRWorks front buffer rendering requires beam-racing to work

But what I’ve developed is a way to do this with emulators & do it reliably at the frameslice approximation level, using only VSYNC OFF (without needing front buffer, though that would help achieve single-scanline slices in the future). Perfectly with zero tearing, with more frame slice strips than the 4-strip beam-racing VR renderers, and with a safety jitter margin so raster-sync-imperfections are completely invisible (until the error is big, and they’re usually just single-frame accidental appearance of tearing). Other than that, in normal operation, it just looks like perfect VSYNC ON, smooth just like the original device, without VSYNC ON lag. A lagless VSYNC ON.

That, we indeedy, can definitely, finally reliably do – even in C# and script-like languages – and it can get practically line-exact if one decides to do optional hardware scanline polling. The emulator holy grail!!

We’re already skipping input lag caused by games internal logic in another thread, so we’re not easily impressed!

Joke aside, that’s interesting.

I think the beam racing implementation sounds more ideal for me as a CRT user but both ideas are pretty freakin clever.

While it’s a boon for emulator users with CRT, it’s also equally majorly lag-reducing for LCD too.

It’s compatible with any display (yes, even VRR if you use the GSYNC+VSYNC OFF or the FreeSync+VSYNC OFF simultaneous combo technique as linked). Since outputting onto display cables, are by necessity, top-to-bottom raster serializations of pixels from a 2D plane (screen data), even DisplayPort micropackets are still raster-accurate serializations. Everything on any display cable is top-to-bottom. We’re just piggybacking on that fact that all video outputs on a graphics card still scans out top-to-bottom.

The only thing that really throws it off is rotated display – e.g. left-to-right – but if you’ve got a left-to-right scanning emulator (e.g. Galaxian or Pac Man) – then you can even beam chase left-to-right scanouts too. To enable beam racing synchronization (sync between emu raster + real raster) you need to keep scanout direction in the same direction.

If you ever used a Leo Bodnar Lag Tester, you know that it has three flashing squares, Top/Center/Bottom to measure lag of different parts of a display. It measures lag from VBLANK to the square. SO bottom square often has more lag, unless the display is strobed/pulsed (e.g. LightBoost, DLP, Plasma) then the TOP/CENTER/BOTTOM squares are equally laggy.

The latency reduction offsets are similar regardless what an LCD does – if the LCD (e.g. fast TN LCD) had 3ms/11ms/19ms TOP/CENTER/BOTTOM input lag from Leo Bodnar Lag Tester – beam racing makes Top/Center/Bottom equally 3ms on many LCD panels because you’ve bypassed the scanout latency by essentially streaming the rasters in realtime onto the cable. When you use Leo Bodnar on a CRT, it also measures more lag for bottom edge, but it’s lagless if you do beamchased output.

So what you see as “11ms” on DisplayLag.com (CENTER square on Leo Bodnar Lag Tester) will actually be “3ms” lag with the beam-racing method, because beam-racing equalizes the entire display surface to match the input lag of the TOP-edge square in Leo Bodnar Lag Tester. (see…bypassing scanout lag) The lowest value becomes equalized for the entire screen surface.

(Niggly bit for advanced readers: Well, VSYNC OFF frame slices are their own mini lag-gradients, but a 1000-frame-slice-per-second implementation will have only 1/1000 = 1ms lag gradient throughout the frame slice strip … The more frame slices per second, the tinier these mini lag-gradients become. Instead of a lag gradient for the whole display in the vertical dimension, the lag gradients are shrunk to individual frame slices, so each frame slice may be (example numbers only) 3.17ms-thru-4.17ms lag apiece, depending on which scanline inside the frame slice. This frame slice lag-gradient behavior was confirmed via an oscilloscope. That said, these tiny lag gradients are much smaller than full-refresh-cycle lag gradients. Not relevant topic matter for most people here, even emulator developers, but I mention this only for mathematical completeness’ sake.)

Whatever Leo Bodnar Lag Tester measures for input lag for the TOP square, becomes the input lag of the MIDDLE and BOTTOM when you use beam-raced output. The lag is essentially equallized for the whole display surface. So no additional bottom-edge lag when you do beam-raced output even to LCDs. As many know, Blur Busters does latency tests, and some emu authors have posted high speed video proof on the forum thread now, so it’s validated – realtime beam racing bypasses the mandatory scanout latency of full-framebuffer implementations.

So can we have a proof of concept implementation for this in RetroArch?

It can be done in somebody’s fork or whatever, just so long as people can play with it, experiment and then report back as to how well it’s working.

I’m happy to help any volunteer implement experimental beam racing in any emulator. Tony has been asking me many questions privately.

Currently, myself, Calamity (GroovyMAME) and Tony (WinUAE) are collaborating to refine this, and I’m currently writing an open source cross-platform beam racing demo (Hello World type program for beam racing newbies). Back-n-fourth talk is currently occuring in pages 4-5 of this thread, though even more emails have been sent privately amongst us. If any coding volunteer wants to join in, we can help any coder become a Tearline Jedi too for their respective emulator work. If no coder resources are available, wait for creation of a cross-platform beam racing helper framework.

Is there anybody here who feels inclined to take this guy’s ideas and try to make a working proof of concept for RetroArch with it? Apparently if what he says above is to be believed, they’d be more than willing to provide feedback and help in that process.

This is a great idea! On a high level, the effect of this is the same as using Hard GPU Sync + a very high Frame Delay setting in RetroArch. However, using this method you’re moving from being focused on running the emulator as fast as possible to generate the whole frame (usually CPU limited) to being able to page flip as fast as possible (GPU limited).

I’m guessing this can be slightly tricky to implement in RetroArch, since it would require an API update in addition to core support. It really should be looked into, though.

It’s actually better than framedelay because it runs the video updates in sync with real hardware. So for systems that do beam racing, like e.g. Atari 2600 etc, it is able to show current frame changes, like the real hardware does. With framedelay, however high you set the value, this is never possible.

I’m concerned running constantly at 480 or 600fps will make a lot of coil whine and probably won’t be that good for your GPU.

It’s actually better than framedelay because it runs the video updates in sync with real hardware. So for systems that do beam racing, like e.g. Atari 2600 etc, it is able to show current frame changes, like the real hardware does. With framedelay, however high you set the value, this is never possible.

Yes, if the real system does input polling during scan-out, this method is superior. If input polling is done at beginning of vblank, very high frame delay will provide similar input lag performance.

I’m concerned running constantly at 480 or 600fps will make a lot of coil whine and probably won’t be that good for your GPU.

There’s no difference between high-GPU 60fps and low-GPU 600fps.

For example, 600fps in Quake uses less GPU horsepower than 60fps in Crysis 3.

Similarly, 600fps using non-HLSL in some of my installed emulator uses less GPU horsepower than 60fps in HLSL (depending on resolution & complexity)

That said, Toni is able to run HLSL shaders simultaneously with beam racing with WinUAE, albiet at only ~6-8 frameslices per refresh cycle on his lower-powered graphics card than mine.

Running frame rates above refresh rates are quite common in competitive gaming, and does not tax a monitor any more. The same number of pixels per second is output of the video output. You’re just putting fresher frame slices onto the video output in real time, creating frameslice-based beam chasing.

Metaphorically one is simply simulating front-buffer rendering via framesliced VSYNC OFF. Most waste is in gobbling up memory bandwidth flipping mostly-duplicate frames (with only a frameslice changed).

I’m guessing this can be slightly tricky to implement in RetroArch, since it would require an API update in addition to core support. It really should be looked into, though.

Thinking architecturally:

Once front buffer rendering is made possible again by NVIDIA or AMD (they just added some (relatively hidden) APIs for that for VR, so it’s only a matter of time before one of us figure out how to send a special flag to enable that mode for emulator use), we can rasterplot pixels or scanlines directly into that front buffer instead! No page flipping, bufferless operation.

So slice approach can be skipped and can becomes single scanlines. Darn near line-exact beam racing, with emulated raster only a couple scanlines ahead of the real-world raster (on a scaled-compensated basis), is possible on modern computers with that tight a margin (RealTime priority, nothing else running). Ideally you want 0.5ms-1.0ms of jitter margin for most system, but I’ve seen raster-exact VSYNC OFF tearlines, so beam racing with that tiny sliver of margin should be protected-for as a future emulator path (in an access-to-front-buffer situation)

HLSL/shaders/etc (curved scanlines, etc) requires some considerations – the realworld raster needs to stay above the topmost pixels of the highest-up pixels of any curves/geometrically distorted HLSL scanlines. But other than that, beam racing is compatible with HLSL (with a fairly large graphics performance penalty). A simple scanline offset (or even units of offset as a count of 1000ths of screenheight, or such) is easily used to compensate for any HLSL/shaders/etc geometric distortions like simulated tube-curves, by giving a little more beamchasing jitter margin distance between the realworld raster and HLSL-fancied emulator raster (roughly ~5% of screen height in terms beam chase margin emu-vs-real raster is usually sufficient).

Long term, I suspect that the recommended coding methodology (by willing emu authors) is an optional once-every-raster callback hook of some sort, in future line-exact or cycle-exact emulators, to transmit one scanline of pixels to the graphics renderer routine, effectively calling an outside function with the currently partially-completed frame buffer. Such a theoretical outside function can then do what it wants with the incomplete framebuffer such as [a] copy the completed scanline direct to front buffer, [b] OR intermittently “buffer” up that scanline (or do nothing, if we’re just given access to the emulator’s module own frame buffer) & decide to send a frameslice for tearingless VSYNC OFF beamracing at frameslice granularity, [c] OR essentially do nothing until frame is complete and then do full buffer for traditional full-framebuffer low-lag methods (currently done). Either [a] [b] [c] can decide to do its own appropriate pauses/sleeps/busywaits to synchronize (whether for a framedelay system, or a raster beam racing system), including doing surge-executions. So it could be compatible with all possible current & future synchronization methods including beam racing & front buffer rendering. Theoretically this could further improve emulation accuracy! Beam racing improves game preservation by becoming even ever more faithful to the original behaviours of original machines (original lag, original beam racing, original realtime rasters delivered in much more identical realtimeness) – so this architectural “thought exercise” is worthwhile to think about the easist way to proceed (on a coding POV).

For some emulators it’s super-easy to do (as Tony found out it was easier than expected for his WinUAE), but for other emulators is hard… It takes someone likes me who understands universal display mechanics (the High-Hz world, the VRR world, the scanout latency behaviours) to teach the emulator authors how to do a cross-platform universal beam racer, so that’s what I’m currently doing on an initial basis to the first willing emu authors. (Not everyone understands how to beam-race a variable refresh rate display for example!) I’ve notified a few others, and are watching, see how cross-platform and ease-of-use, before implementing in their emulator.

Ideally, emulator modules needs to have sufficient discovery data added to them in addition to other attributes like resolution etc – (A) Is this emulation module a raster-based system (TRUE/FALSE)?, and (B) Scanout direction (UP/DOWN/LEFT/RIGHT attribute). Platforms have screen rotation detection APIs, native orientation is top-to-bottom (with few minor exceptions like the reverse scanout on Oneplus 5…but there are VR APIs already to detect that too on Android GearVR beam-chased VR that’s being done). But we at least need that emulator module info so we can compare scan direction being equal in order to enable beam racing on rotatable screens (only one orientation will be beam racing compatible when doing beam-racing on a tablet or phone – YES, beam racing actually working on phones too if you have access to either VSYNC OFF -or- access to front buffer). Some emulator modules provide this info but not all of them. You only want to enable beam chasing if (A) and (B) are compatible. (e.g. HLE type emulators will have A being false). If you’re rotating a computer monitor for a MAME-type arcade cabinet build, and the left-to-right scanout is same direction, then (B) is true. But if the screen rotations are different, (B) would be false.

Long term, HLSL/fuzzy-scanlines/shader renderers may need to be optimized to render the screen partially (at least at a coarse frame strip based – even 4 strips per refresh cycle can create up to a 75% lag reductio), to gain more framerates, or make HLSL/fuzzy-scanlines/shader renderers compatible with future front-buffer rendering. WinUAE’s currently do a full screen shader rerender AFAIK, which is inefficient, but it can technically be optimized to become frameslice compatible.

The base stuff can be quite easy (once you figure things out) but thinking architecturally-long term (e.g. making sure it’s future front buffer rendering compatible, optimizing HLSL/shaders/fuzzyline renderers to segmented or strip operation, making beam racing algorithm is made VRR compatible, making sure it’s fast/slow-scanout compatible, making sure it’s screen rotation compatible, etc) can get a little daunting. That’s where my helpfulness comes in huge so far. “The devil is in the details”

It’s actually better than framedelay because it runs the video updates in sync with real hardware. So for systems that do beam racing, like e.g. Atari 2600 etc, it is able to show current frame changes, like the real hardware does. With framedelay, however high you set the value, this is never possible.

Yes, this is correct. The impressive framedelay techniques were a big help but it’s not the final input-lag holy grail – nothing gets more better/faithful/original than raster synchronization. The reproduction of original machine latency, no matter where the input read occured in the refresh cycle.

If a game reads input mid-frame or near bottom of the frame, and does a screen update based on that, just before the raster draws… In full-framebuffer emulators there’s still scanout lag as the whole frame waits to be displayed on the screen. Beam chasing avoids that, as input reads can now be done in real-time while the current frame is being scanned out.

Also, depending on how it’s configured – beam racing actually can require less CPU performance than the surge-execution method – you only need to execute at realtime speed at 60Hz, for 1/60sec scanouts to successfully get the original lagless feel – even for mid-screen input reads – the only condition is streaming the scanlines out realtime (either via VSYNC OFF or via front buffer). This is an important consideration for advanced cycle-exact emulators like higan and others – being CPU hungry emulators, these are ideal candidates for latency-reduction via beam racing. Also underpowered platforms like Raspberry PI and microcontrollers, or emulator-based Ben Heck style homebrews (or whatever potato you try to run emulator code on)

After reading this, if you decide there’s no shame waiting a year for other emulators to get mature beam racing. No problem! That said, some of it is fun programming actually, brings back the “raster interrupt” days in a way if you ever enjoyed programming that like some of us did! So if one of you decide you want to do a first shot at RetoArch experimental branch, beginning with a few easily-compatible emulator modules, come join our discussions. Our little (but probably growing) circle will be glad to help teach you how to become a Tearline Jedi, the art of raster-exact VSYNC OFF tearline control & disappearance – this unlocks lag-reducing beam racing skills – to allow you to begin experimenting. If private email preferred, say hello to [email protected]

Hi, I’m looking at this topic with a lot of interest. Concerning directX 11, it is actually less resource-intensive than open GL and is therefore to be preferred on windows . With desktop composition disabled (absolutely necessary with directX), it is equivalent to openGL with hard gpu sync to 0 . I’m really hoping for an implementation of hard gpu sync with directX11 to see what happens.