Oh ok it’s not that bad if that’s with SMW which isn’t the most responsive.

About the 16ms difference, yes your brain correct things a bit but at a certain point (going out of a range of comfort) it makes a difference, especially with certain kind of games.

If you’re into shmups or fps and have play them for a while you begin to notice the difference / get better results at lower lag level.

I recently acquired a SNES Classic Mini, mainly to get the controllers. I figured it could be interesting to use them on my other systems via the Raphnet low-latency classic controller to USB adapter (http://www.raphnet-tech.com/products/wusbmote_1player_adapter_v2/index.php). One thing that has bothered me for a while is that I haven’t been able to account for all the latency that I’ve measured in my tests so far. Subtracting all the known sources, there’s always been some lag left (around 0.7 frames). The sources could be:

- The HP Z24i display I’m using. Although it has been tested to have less than 1 ms input lag (yes, input lag, not response time or anything else), I have no way of confirming it.

- Operating system and driver overhead.

- Gamepad/controller.

So, I got the adapters delivered a couple of weeks ago and have run a few tests. The results are intriguing. I’ll be comparing the results to my trusty (but crappy) LED-rigged Retro-Link controller that I’ve used for all my past tests. Since I have no intention of attacking the SNES Classic controllers with the soldering iron, I have not installed a LED on any of them. Instead, I’ve tested this controller by filming it from the side, in front of the screen, while pressing the jump button. To get a feeling for how much this affects the result compared to the LED method, I’ve choosen to test the Retro-Link controller in this way as well (in addition to using the LED). That way, we’ll be able to see how much the two methods differ. So, I’ll be reporting the following:

- Retro-Link controller: Time from LED lighting up until character reacts on screen

- Retro-Link controller: Time from button appears pressed down until character reacts on screen

- SNES Classic Mini controller: Time from button appears pressed down until character reacts on screen

As a bonus, I’ll be including numbers for a real SNES on a CRT TV as well. I’ve shown results from this test previously, but I will include a bit more detailed results today.

The Raphnet adapter

[Image courtesy of Raphnet Technologies]Before we dive into the results, let’s take a quick look at the Raphnet Technologies Classic Controller to USB Adapter V2. This is a pretty serious adapater that is configurable via a Windows/Linux application and features upgradable firmware. One of the things that is configurable is how often the adapter polls the controller for input. Please note that this is not the same as the USB polling (more on that below). We have two components here: 1) How often the adapter polls the controller itself and 2) How often the computer polls the adapter (i.e. USB polling rate). On the single player version of the adapter, the adapter can be set to poll the controller with a period as low as 1 ms (2 ms for the 2-player version).

As for the USB interface, one of the things that makes this adapter so good is that it is hard coded to use the fastest polling frequency the USB standard allows: 1000 Hz (as opposed to 125 Hz, which is the default used by pretty much all gamepads). This means that you don’t have to fiddle with operating system or driver hacks to use the faster polling rate. It just works. This fast polling rate more or less eliminates input lag caused by the USB interface, cutting the average input lag from 4 ms to 0.5 ms and the maximum from 8 ms to 1 ms.

If we combine the 1 ms USB polling rate with the 1 ms controller polling rate, we get a maximum lag of just 2 ms and a blistering average of 1 ms. [This actually depends on how these two poll events are aligned to each other, but those figures are worst case.]

The hardware and software setup

I’ve used my iPhone 8 to record videos of the monitor and LEDs/controllers at 240 FPS. I’ve then counted the frames from the LED lighting up or button appearing pressed down until the character on screen reacts (jumps). The results presented further down are based on 39 samples for each test case. Below are screenshots of each test case. The test scene is the starting of the very first level in Yoshi’s Island.

Retro-Link controller:

SNES Classic controller:

Real SNES on CRT TV:

This time I used my Dell E5450 laptop for testing, instead of my 6700K desktop. This was out of convenience and I was not out to demonstrate absolute lowest lag anyway, but rather the difference between the Retro-Link and the Raphnet adapter + SNES Classic controller combo.

Dell E5450

- Core i5-5300U (Broadwell)

- 16 GB DDR3-1600 CL11 (1T)

- Windows 10 Version 1709 (OS version 16299.125)

- Intel GPU driver 20.19.15.4531 (with default settings)

- RetroArch nightly from November 12 2017

- HP Z24i 1920x1200 monitor, connected to the Dell laptop via DVI. Native resolution was used in all tests.

- Super Mario World 2: Yoshi’s Island (NTSC)

- snes9x2010

RetroArch settings

- Default, except:

- video_fullscreen = “true”

- video_windowed_fullscreen = “false”

- video_hard_sync = “true”

- video_frame_delay = “6”

- video_smooth = “false”

Raphnet Technologies ADAP-1XWUSBMOTE_V2

- Adapter configured to poll controller with 1 ms interval (fastest setting available)

And, just to be clear, vsync was enabled for all tests.

Finally, I’m including results from a real SNES as well. That setup consists of:

- SNES (PAL) connected to CRT TV (Panasonic TX-28LD2E) via RF output

- Original SNES controller rigged with an LED connected to the B button

- Super Mario World 2: Yoshi’s Island (PAL)

The results

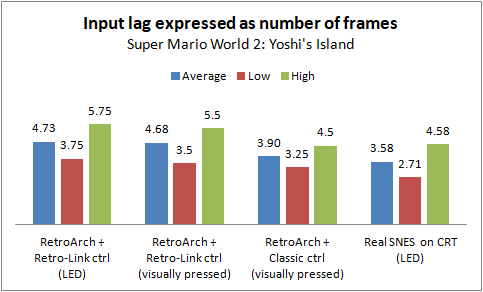

Without further ado, here are the results:

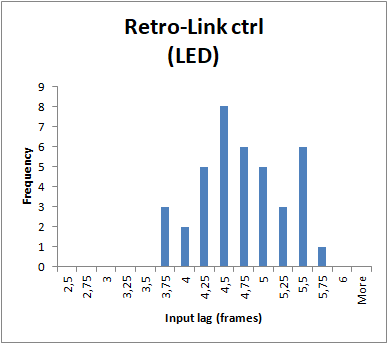

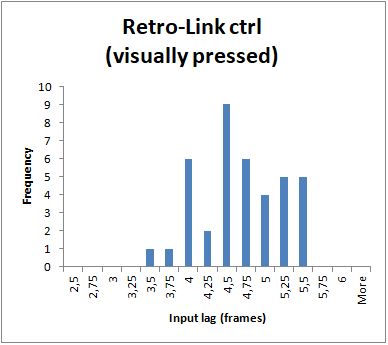

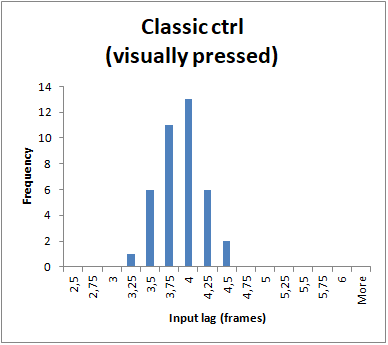

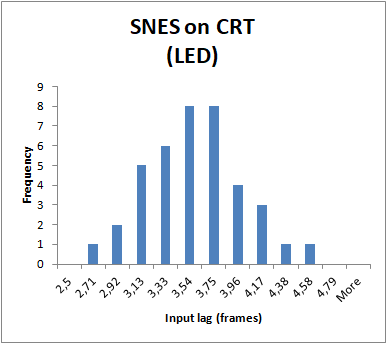

I’m also providing some charts showing the distribution of the samples for each test case:

(The reason for the “strange” looking values on the X axis of the last histogram above is that the SNES tested is a PAL console, so the ratio between the camera FPS (240) and console FPS (50) is different compared to the emulated test cases)

Result analysis

I don’t know how you feel, but I think these results are pretty awesome. I had suspected that the Retro-Link controller had some inherent lag, but this finally proves it. But let’s not get ahead of ourselves. First, let’s conclude that analyzing the lag by visually determining when the button is pressed seems to marginally understate the input lag. It only has a miniscule effect on the average (0.05 frames) but can affect the min/max numbers slightly more (0.25 frames). All in all, I’d still say that analyzing input lag this way is a decent method, although an LED is preferred.

With that out of the way, we can see that the Raphnet adapter + Classic controller shaves off 0.78 frames on average. We also see a clear tightening between the min/max numbers, going from a difference of 2 frames to just 1.25 frames. This is to be expected with the tighter polling.

Let’s take the measured average of 3.90 frames and subtract all the known sources of input lag. 3.90 frames is 65 ms at 60 FPS, so:

65 ms

- 0.5 ms (average until next controller poll)

- 0.5 ms (average until next USB poll)

- 8.33 ms (average until start of next frame)

- 50 ms (3 frames. Yoshi's Island has a built in delay which means the result of an action is visible in the third frame.)

+ 6 ms (since we're using a frame delay of 6 in RetroArch)

- 11.67 ms (0.7 frames. This is how long it takes to scan out the image on the screen until it reaches the character's position in the bottom half of the screen.)

= 0 (!!!)

I was actually pretty shocked when I first did this calculation. It’s obviously a fluke that it pans out so perfectly, but even if the result would have been plus/minus a few ms, the conclusion is the same: all delays of any significance have been accounted for.

With the above result, we can draw some conclusions:

- Contrary to popular belief, there doesn’t have to be any detrimental effect from using vsync. It doesn’t add any input lag on this test setup running RetroArch with these settings.

- The HP Z24i really does have negligible input lag, as once tested by Prad.de (they measured less than 1 ms). I have long believed this to be the case, but it’s nice to get strong evidence to support this. It’s also good to be able to show this, since some people, for some reason, still believe that LCD technology has this built-in input lag that cannot be eliminated. Some people have even made up stories about how HDMI itself adds input lag that, again, cannot be eliminated.

- Operating system and driver overhead is negligible. There’s obviously some time needed to process the input and make it available to the application, but it’s simply not significant enough to worry about. The results also don’t show any big fluctuations in input lag, proving that the operating system is able to poll and take care of the input in a fairly consistent manner.

These points go against a lot of the “common knowledge” that is repeated time and time again in input lag related discussions. My guess is that most of that “knowledge” is simply made up/assumed and the people that repeat this stuff haven’t really bothered to check if it’s actually true or of any significance.

So, what if we put the results into perspective and compare to a real SNES? First of all, the RetroArch results presented in this post could be further improved by simply increasing the frame delay setting. I used 6 because the laptop can’t handle any higher. My 6700K can handle a setting of 12. That would reduce the average by another ~0.36 frames, to just 3.54 frames. I don’t believe anyone has ever demonstrated input lag this low in SNES emulation previously.

What we see is that we can actually match the SNES’s average input lag, despite using an LCD monitor. Granted, when I’m analyzing the videos I stop counting as soon as I see a hint of the pixels changing, so you might want to add a few milliseconds to compensate for the pixel response time. While my monitor isn’t the fastest when it comes to response times, some LCD monitors can be pretty quick (I have not investigated the possibility of getting a monitor that has as low input lag as mine, but with faster response times, though).

The one area where the real SNES does have a small advantage is in minimum input lag. Theoretically, we could almost match this as well, but it would require us to max out the frame delay at 15 ms and that, in turn, would require a very fast computer. Not really practical. However, RetroArch with a frame delay of ~12 would just be 0.2 to 0.3 frames slower in minimum input lag and I’d go out on a limb and say that noone would notice the difference.

One caveat should be mentioned here, in regards to the tests of the actual SNES: The tested console is a PAL version. The input lag result presented earlier is reported as number of frames, with the assumption being that PAL and NTSC consoles would respond in the same number of frames. Obviously, the PAL version would respond slower in absolute terms, since it has a lower frame rate (each frame takes 20 ms, compared to 16.67 ms on the NTSC version). I have not seen anything to suggest that there would be any additional lag on a PAL console, other than that caused by the lower frame rate, but without testing an NTSC console myself I can’t guarantee that it would respond in the same number of frames. As I’ve mentioned in an earlier post, there is around 0.3 - 0.4 frames of input lag in the average result for the PAL SNES that I’ve been unable to account for. Maybe it’s there on the NTSC version as well and maybe not. Even if the NTSC console were to respond quicker than the PAL version, the window for improvement is just those 0.3 - 0.4 frames, so it wouldn’t fundamentally change things.

To round this post off, we can finally conclude that the old adage that “emulation is by definition laggy”, isn’t really true. What is true is that emulation is often laggy to some degree, due to bad or incorrectly setup software, incorrectly chosen components (or component choices that were necessitated by other factors) or due to performance constraints, such as in the case of the Raspberry Pi and similar hardware. However, it’s good to see that it doesn’t have to be this way. It’s also good to see that there’s no exotic hardware or software needed. These tests have used a regular desktop monitor and laptop. Well, I guess the most exotic things here are the Raphnet adapter and the SNES Classic controller. However, Raphnet offer a version of their adapter for the original SNES controller, so that’s an easier/cheaper way for most to get this kind of fast input. It’s just a shame that manufacturers of USB controllers aren’t more serious and at least offer the ability of using a faster poll rate.

Thanks for reading!

You hit the nail on the head here. Great investigation and really encouraging results!

Thanks!

Yep, to be honest it’s pretty maddening reading discussions on this subject on places like Reddit, NeoGAF, etc. I try not to get involved in those anymore, since it’s a losing battle trying to fight ignorance that way. Hopefully, this whole thread has at least added some substance to the collective knowledge anyway.

In a way, my post above feels sort of like the culmination of almost two years of obsessing over this stuff. I personally feel I now have the knowledge I set out to find. It started with some pretty rudimentary tests and a thread over at Byuu’s forum. I re-read my post over at Byuu’s a while ago and realized that I managed to answer all of those questions (and then some) pretty much on my own. It was also interesting to see that despite the questions being pretty clear and valid, I got all sorts of over-complicated answers and borderline irrelevant questions. Elitism? Incomplete knowledge on the subject? Who knows, but for all the frustration it brought at the time, I believe it was a valuable experience in the end.

With all the knowledge gathered over these years, what I’d really like to do now is start over, crazy as it may sound. The way it’s presented now, it’s all just a bit too fragmented. I’d like to put together an article that incorporates all the findings, presents fresh test data in a concise way and collects all the conclusions in an easy-to-read manner. This kind of article would take a lot of planning and require a huge amount of time and for that reason it will have to remain a dream, at least for a long time to come. Due to my current life situation, I can’t afford to spend any more time on this, at least over the next couple of years. Sometimes, I wish I had the same amount of spare time that I did 10 years ago, but then I wouldn’t have all the other great stuff in my life. These days, if I do get an hour or so of spare time (which is suprisingly rare), I try to spend it on actually playing some of the old classics - with low input lag!

It would be awesome if someone stepped in and continued the work though…

Hi @Brunnis,

We use the Leo Bodnar device to check input latency on monitors if you haven’t heard of it:

http://www.leobodnar.com/shop/index.php?main_page=product_info&cPath=89&products_id=212

I believe www.displaylag.com also uses it for their tests.

Thanks

Hi @Brunnis

You might also find the following article about input lag testing useful: http://www.tftcentral.co.uk/articles/input_lag.htm

@Brunnis Nice work, I would have loved to see the results for the SNES classic itself though!

I bought one for the controllers as well (to use with Retroarch Wii U and PC), but I’ve found that I really like having a dedicated SNES box that turns on almost instantly. So I find I’m using it more these days (strictly with Nintendo’s Canoe emulator) but I’ve been wondering about the input lag, although it does “feel” ok to me.

It’s not scientific at all, but I get similar results from the 240p test suite’s manual lag test on my SNES Mini and Nvidia Shield ATV.

I also tried passing a bunch of canoe options that specifically mention affecting latency and saw no improvement in performance vs the default options.

Great work Brunnis and I really appreciate all the work you’ve compiled up here. So in the end do you feel it’s better to have lower input lag or accuracy? I know it’s a subjective question but since you’ve dedicated so much time I figured you’d have some sort of valid opinion.

Also which cores are the ones you’d recommend sacrificing accuracy for speed? perhaps we should make a list for everyone to make that decision themselves. That list with all the relevant lag settings should be stickied in this forum to help all newer people under exactly what these settings do and what combination would work best for their setup.

@Brunnis: Did you test the current RetroArch on the Raspberry Pi 3 using the GL driver with the new working max_swapchain=2 setting? If I am right, we should be have the same low-latency input that we have on the dispmanx driver with max_swapchain=2… but now on the GL driver!

Also, did you do input latency tests on the Snes Mini itself? How good is it?

Yeah, I’ve looked at it and did consider getting one, but decided I don’t want to spend that money right now. Also, would be nice if the device didn’t have hard coded resolution, but rather could default to using native resolution of the connected display.

Thanks for the link, but I believe I read that article back in 2016.

I only emulate NES and SNES right now. NES is pretty trivial to emulate and I just use Nestopia and have been happy with that. The hardware I use for my main emulation needs isn’t very powerful, so for SNES I’m best off using snes9x/snes9x2010. That means I give up some accuracy, but as long as I don’t notice any obvious glitches, I tend not to worry too much about that. I definitely understand those that strive to go the other way, though. When you do have the hardware for it, such as a high-end i7, the difference (input lag-wise) between using bsnes-balanced and snes9x/snes9x2010 is just 4-8 ms of frame delay, which isn’t exactly huge.

I’m probably not the best to give such recommendations, given my pretty limited emulation needs. I also think it might be hard giving general recommendations. I’d personally use the most accurate core my system can handle, unless it’s unproportionally demanding. For example, if one core provides good accuracy while allowing a frame delay of 12, while another is ever so slightly more accurate and allows no use of frame delay, I’d choose the former.

Not yet! I’ll try to get that done as well. I’ve not forgotten about it.

I didn’t. I’m guessing that the SNES won’t output DVI via a HDMI to DVI cable, so that excludes using my HP Z24i. I’ll need to use a different display and I’ll also need to test RetroArch at 720p on that same display. I have a small Samsung 1080p LCD TV that I can use for this. If I test RetroArch with the same system and settings as I used in my previous post, we can get the input lag difference between the HP Z24i and the Samsung. Then we can subtract that from the SNES Mini figure to get a feeling for how it would perform on a really fast display.

Don’t know when I’ll have time for such a test, though…

I should mention that I have read the small amount of info there is regarding input lag on the SNES Mini. My current guess, based on what I’ve seen so far, is that they may have been able to get it down to around 4.2 - 4.4 frames for the test case I’m using (i.e. Yoshi’s Island). They’ve probably optimized their emulator to be able to generate the frame and push it to the GPU within a single frame period, i.e. similar to hard GPU sync or max_swapchain_images = 2. Coupled with fast input handling, that’s where I believe they would end up. It’s still not as good as can be accomplished with RetroArch (the difference being mainly the possibility of also using frame delay), but it’s really good. All speculation for now, of course, but would be interesting to run some tests to see if it’s correct or not.

I’m happy to say that with tips in this thread, a CRT, and a heap of stubbornness, I’ve achieved real provable next-frame response time — sub-16ms lag!

First, my testing procedure:

RetroArch on Linux in KMS mode

CRT (Sony Trinitron)

iPhone with 240fps slow-mo video capture

Here’s a simple video showing my methodology and results: https://www.youtube.com/watch?v=lBwLSPbHWoc

What’s great about using a CRT for these slow-mo tests is you can see the scanline move down the tube, and know exactly when a frame begins and ends. No need for fractional frame analysis and averaging. At 240fps, you get four captured frames per TV frame. Obviously, the faster slow-mo camera you have, the better. But iPhones and other smartphones do just nicely.

Like @Brunnis said (at some point), it’s unnecessary to hack together wires and LEDs to controllers for personal testing. Deliberately/flamboyantly pressing a button on the controller while simply holding it front of the TV is enough. At 240fps, there’s only about a single slow-mo video frame (or 4ms) of ambiguity as to whether a button is hit or not.

I’ve always used Super Mario Bros. on the NES as my casual lag litmus test, and let my muscle memory be the judge. It seemed logical to use for my slow-mo tests too. I have a real NES (well, an AV-modded Famicom) for comparison using the same CRT.

Early in the process, I discovered two things:

1: On real hardware, Mario has a one-frame lag! I feel completely betrayed all these years having never known this! The soonest you’ll see an input response (jumping, etc) is greater than 16ms after your input.

2: RetroArch’s Nestopia core seems to have an additional frame of lag unaccounted for, bringing the total minimum lag for a Mario jump to over 32ms. QuickNES does not have this additional frame of lag.

With these two findings, I decided to find another game to test. I chose Pitfall! for the Atari 2600, using the Stella core.

With Pitfall, I witnessed a response on the very next frame. In the video linked above, you can clearly see me hit the button near the end of one frame, and on the next, Harry jumps! Essentially no way to improve compared to original hardware. Pack it up. We’re done here

This method of testing has worked really well for me, and was not very difficult at all. With a video app that allows for frame-by-frame movement (on macOS, I just use QuickTime Player), counting frames is easy. A CRT is of course not required, but watching the beam race down the tube lets you know exactly when a new frame is coming. And hopefully armed with the knowledge that Stella can react with zero lag can help others test their own equipment much easier.

Eliminating lag has been a crusade of mine for years. It had been distracting enough that merely enjoying old games was nearly impossible. Maybe now I can play my old games without “worry”.

Extra stuff:

There’s been talk about digital-to-analog converters (HDMI to VGA, or DP to VGA) being a source of display lag. Yeah sure, if you want to be pedantic (we all do), every signal transformation TECHNICALLY produces additional lag, but in most cases, the lag is beyond minuscule. Less than a single millisecond.

Think about it: There’s not much hardware in most cheap converters. Each and every frame of 1080p video contains over 2MB of data. If the adapter has sizable lag, where is it going to PUT those frames it’s supposedly holding onto?

Full specs:

CPU: i5-5675C

Graphics: Intel Iris 6200

OS: Ubuntu 17.04

Latest RetroArch Nightly

Display: Random DisplayPort to VGA adapter

Extron VSC 500 (not required, but makes my VGA 240p signal look nicer)

VGA to circuit feeding CRT jungle chip

Sony Trinitron TV

Linux Settings:

Custom 1920x240p EDID via DisplayPort

RetroArch launched in KVM mode through basic command-line (not GUI)

Linux RetroArch settings:

VSync On

Maximum Swapchain Images: 2

Frame Delay: as high as you can go without stuttering. 15 works for me for 8-bit systems

Integer Scaling

No filters

Input device: Super Famicom controller

Dual SNES controller to USB adapter - V2, configured to 1ms of lag. Website specs say 2ms minimum, but hey, 1ms was an option in the controls, and Linux reports 1ms poll time…

Beautiful! Thanks for sharing your video verification!

That’s interesting about Nestopia. We’ll have to poke around and see if we can figure out what’s up there.

All updated NES cores (Nestopia, Fceumm, Mesen, QuickNES) react in 2 frames internally here.

Mario checks input at the end of the frame, near scanline 257 (or was it 247?). Even if you press jump in time for that, he won’t jump the next frame, but will jump the one after that. So, on a CRT, if Mario is at the very top of the screen, you might see him jump ~20ms later, but at the bottom of the screen like he typically is, it’ll probably be at least ~30ms until the CRT updates Mario’s sprite. On an emulator (or using anything that doesn’t update the picture in real time like a CRT), this means that the minimum lag will always be at least 2 whole frames, as far as I know.

I’m uploading my raw footage of CRT tests for Super Mario Bros, Real NES vs. QuickNES vs. Nestopia, if anyone’s interested in studying further. When this post is an hour old, it should be ready for viewing here: https://www.youtube.com/watch?v=x2Y9orESg8A

I’m going to sleep now, so hopefully the upload doesn’t fail

FWIW, I noticed the additional lag in Nestopia on earlier LCD screen tests as well, using a different computer running Ubuntu 17.10.

The Advanced Test core should also show next frame response with the latency test, if you want to take any possible latency from games out of equation and test the latency of RetroArch itself.

This is great work guys. I’ll be switching over to QUICKNES from now on. A nice breakdown of each core would be great to test. Mame vs fba would a great one to try out.

Eversince I tried Retroarch for the first time there was no doubt in my mind that it was the future. It overcame the crippling input lag that plagues many stand alone emulators and the future looks bright as this will only get better as computer hardware gets faster and cheaper so someday we’ll be able to use a 15 frame delay on the BSNES accuracy core. Although with the exception of only a handful of games I’m pretty hard pressed to find inaccuracies in the games that I play between BSNES balanced and SNES9X.

QuickNES is amazing! However it is very bare bones. It doesn’t run any Japanese titles or even have a turbo button function lol. Nestopia may have more input lag, but it has the ability to do so much more. The input latency on Nestopia is already so incredibly low that I don’t feel held back at all, and I’m on an LCD TV. QuickNES will be my go to for American releases for sure. Nestopia will be for everything else.

As always amazing work Libretro!

I tested SMB a bit with Hard GPU Sync 0 frame + the frame delay setting.

It runs fine with Frame delay on 13 with QuickNES and Fceumm, 12 Nestopia, 10 for Mesen.

I have to go in the Nvida panel settings and put Power management to “prefer max perf” or the GPU will regularly throttle making the game slow down.

With that on, I could keep a shader on without problem (crt-geom).

(i5-3570k@4GHz, win7 x64, Geforce 770.)