I’m not interested in nostalgia or some dubious notion of “authenticity.” I’m interested in what actually improves the objective picture quality. If someone had offered me a high end RGB monitor in 1991, or if I was able to afford one, it would have been a no-brainer to go for the RGB CRT. It would have been slightly nuts to refuse the RGB CRT in 1991. Objective picture quality > nostalgia. As far as half of the screen being pure black… well, that’s what a CRT screen does. A CRT screen consists of mostly black space, with the image consisting of brightly colored dots.

Also, look at that RGB monitor used on the Toys R Us SNES display unit. Flat screen, check. RGB, check. Sharper than any consumer TV, check. This is in the early 90s.

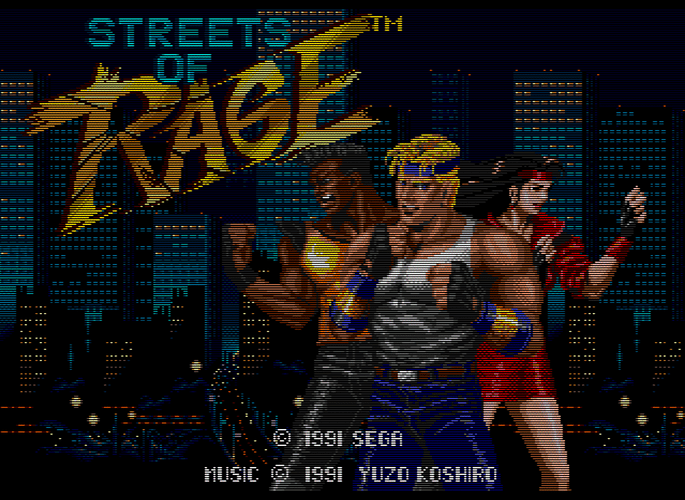

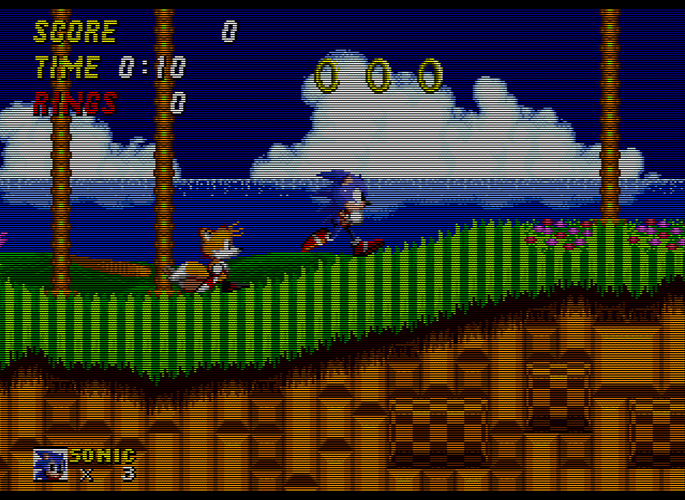

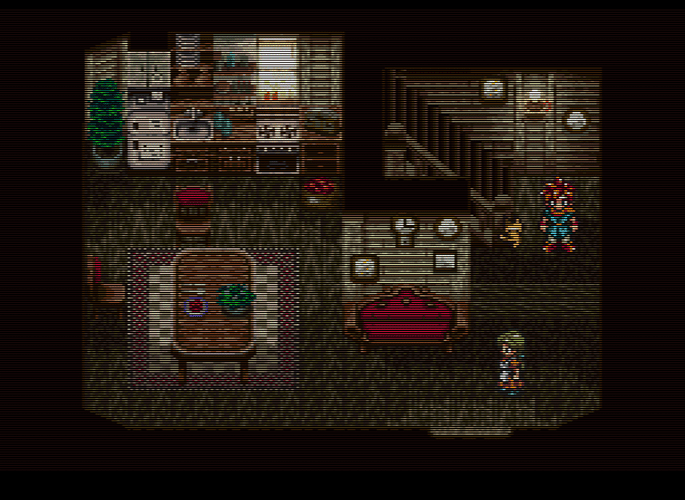

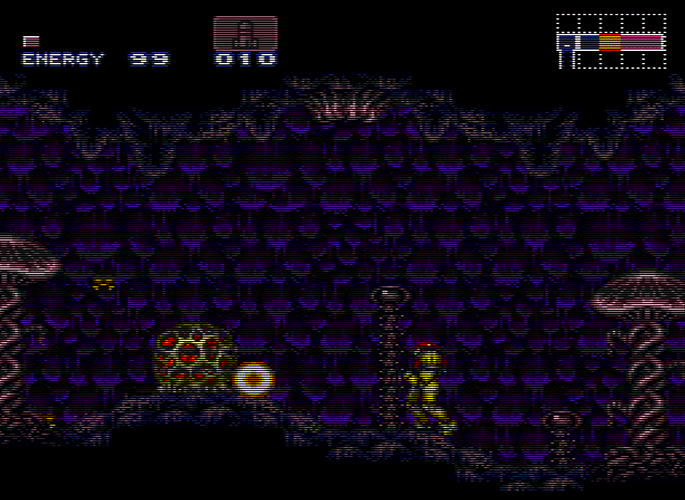

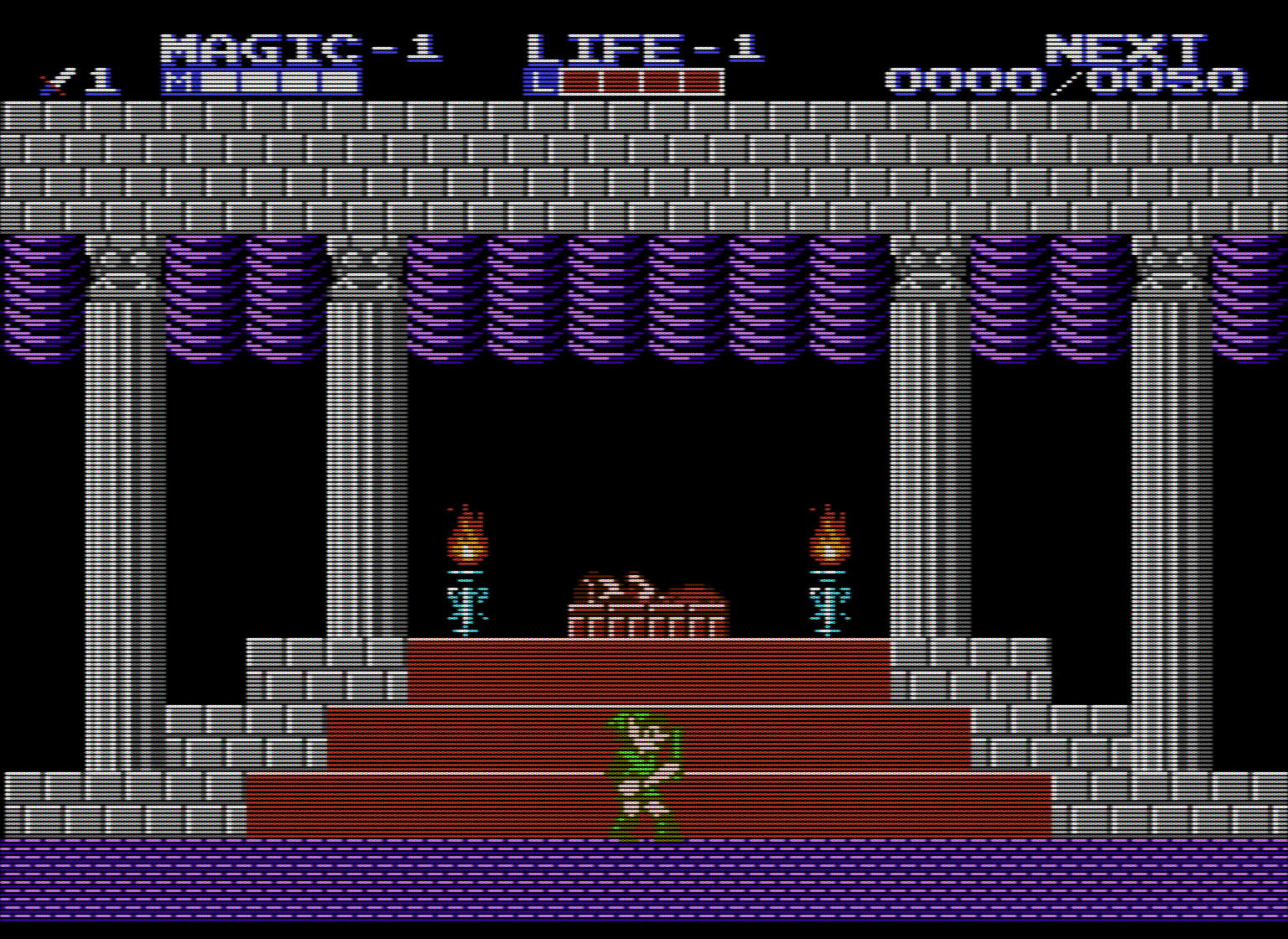

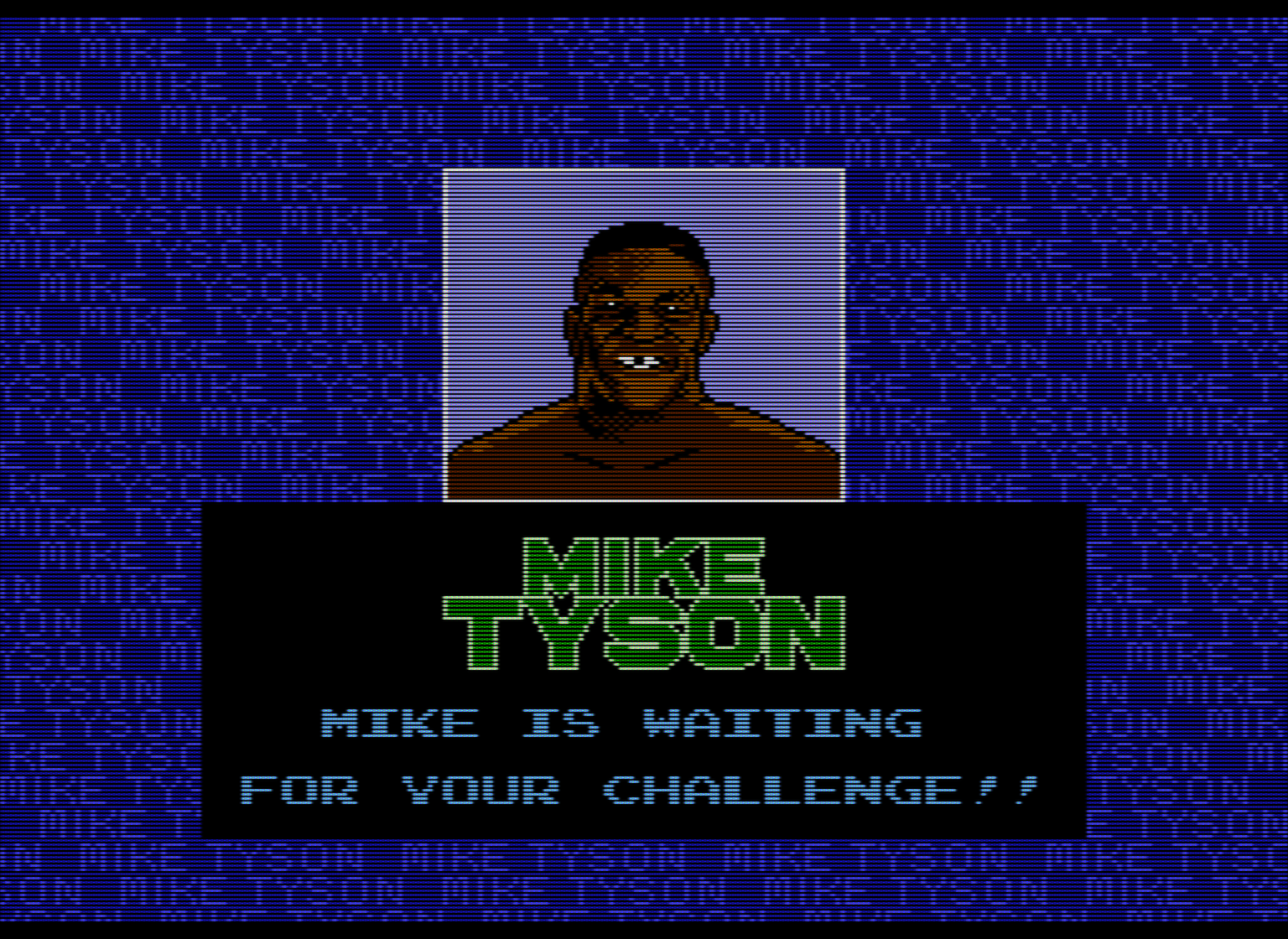

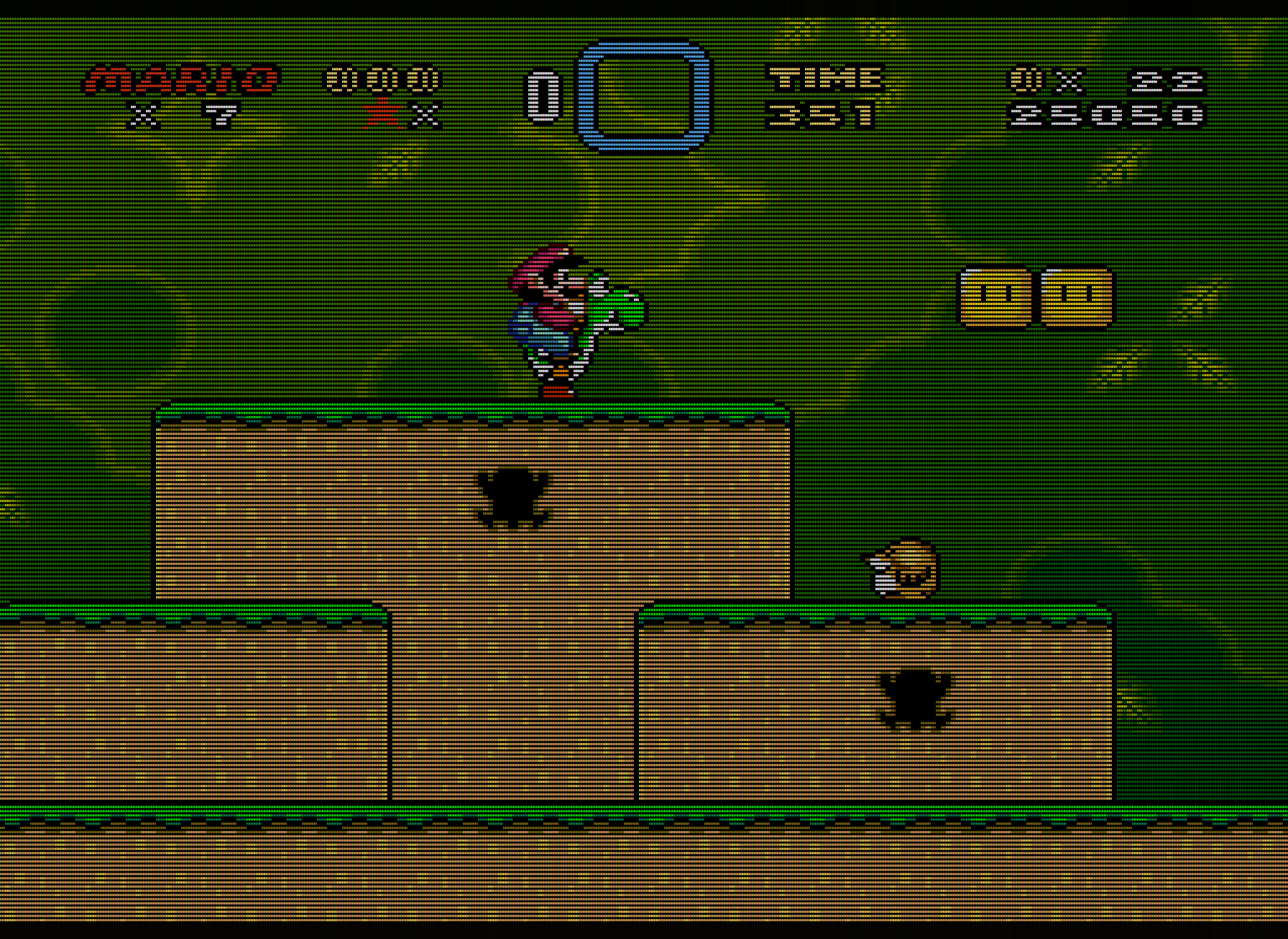

The scanlines and shadowmask are essential to recreating the native display environment for 240p content. Without scanlines, you’re looking at an image that is visually identical to a line-doubled image. Without the phosphor structure and lower TVL of the mask, the image is far too smooth (which may be counter-intuitive). The scanlines and mask are both essential for reducing the high-frequency content and makes the image much easier on the eyes, objectively. This relies on a well-known feature of the human visual system which is also seen in pointilist painting. 240p content was never meant to be displayed as raw pixels; it’s like nails on chalkboard for your eyes.

The image I posted immediately above is actually a nearly perfect recreation of a 360 TVL aperture grille CRT without any of the artifacts that people sought to eliminate on their CRTs. For reference, a 20" TV from the 80s or 90s was around 300 TVL. The only thing lacking is the mask strength, which can’t be increased any further without compromising too much on brightness. This actually looks more like a 20" Trinitron than any shader I’ve seen at 1080p.

At 1080p, you can’t add too much glow/bleed/blending without significantly reducing the emulated TVL, which winds up making the image look blurrier than any well-functioning consumer CRT ever was. At 720p you can just forget about mask emulation.

I think a lot of people are misidentifying what it is about a CRT screen that makes the image look better. CRTs weren’t blurry; they were lower resolution. A lower resolution image is not the same thing as a blurred image. People are mistakenly conflating blur with smooth appearance. A smooth-looking image is not the same thing as a blurry image, and an image on a CRT appeared smooth for reasons that have almost nothing to do with blur.

At 4k and higher resolutions we can have more accurate blending (really, 8k is needed to be truly accurate), but it becomes moot with HDR capable displays. With HDR you can create actual glow and blending by cranking up the brightness and getting the individual emulated phosphors in the RGB mask to glow as brightly as the real thing on a CRT.

EDIT: I just realized this effect could be good for emulating old computer stuff that wasn’t 240p and didn’t have scanlines, so I suppose it’s best to leave it in there.

EDIT: I just realized this effect could be good for emulating old computer stuff that wasn’t 240p and didn’t have scanlines, so I suppose it’s best to leave it in there. LCD backlight should be adjusted to 100% and all the room lights should be turned off

LCD backlight should be adjusted to 100% and all the room lights should be turned off