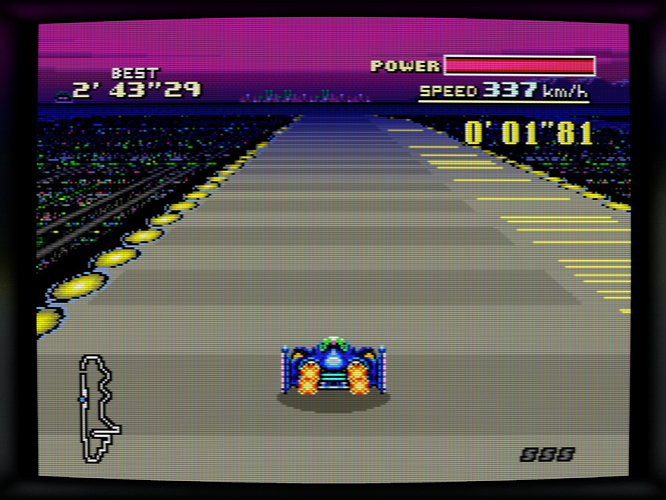

The bezel shader consumes more power than the entire rest of the shader chain combined, so if you have issues start there.

The next heaviest shader is scanline-advanced, largely because of anistropic filtering for masks and geometry.

Each shader has a bypass parameter that will eliminate most processing for that shader stage. I made it for debugging and fun, but it also has the benefit of finding out where there are performance bottlenecks.

While I’m developing this on a modern rig, I have a laptop with an iGPU that I’ll use to develop the scanline-basic shader. Originally scanline-basic was just a scanline shader without geometry and phosphors simulation (and it is the one, ported to GLSL, I still use to this day for 86Box). I’m not sure what scope the basic shader will expand to cover, probably a simplified composite/S-video chain, (e.g. notch only and a single YUV filter stage). Work on that probably won’t happen for a while though.

The plan right now is to make presets for all the major consoles from NES to N64. This will cover the majority use case of RetroArch and give enough of a sample to work through a lot of bugs. (I’ve already seemed to fix the scaling issue we discovered, so with the next release you can use an arbitrary scaling factor like 3x instead of 4x and get some performance back.)

Most of the screenshots of the composite/S-video shader you see, the ones before I did the bezel, use a single-pass composite filter. This was a clever implementation, but I soon ran into limitations based on the degree of analog modeling I wanted. Still, the single-pass shader I think still gives good results and I may salvage it for scanline-basic if it is indeed better performing than the two-pass method.