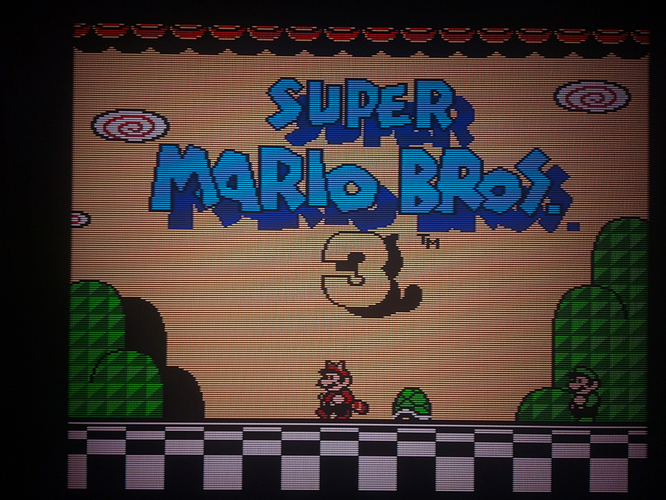

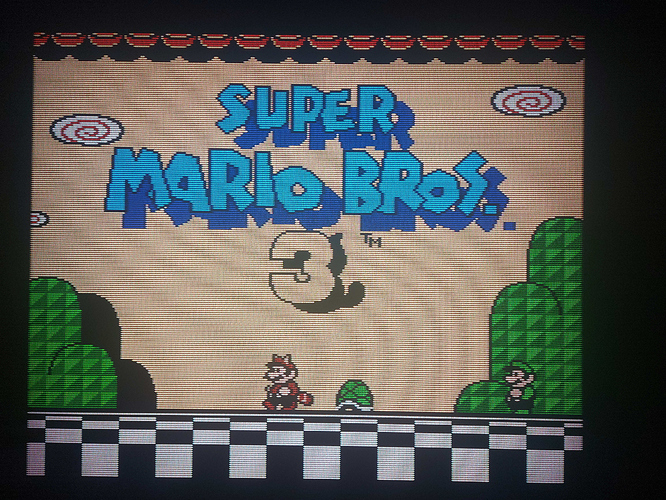

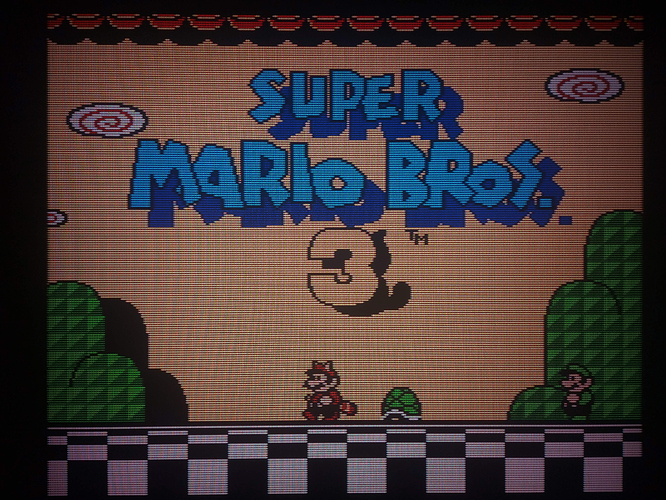

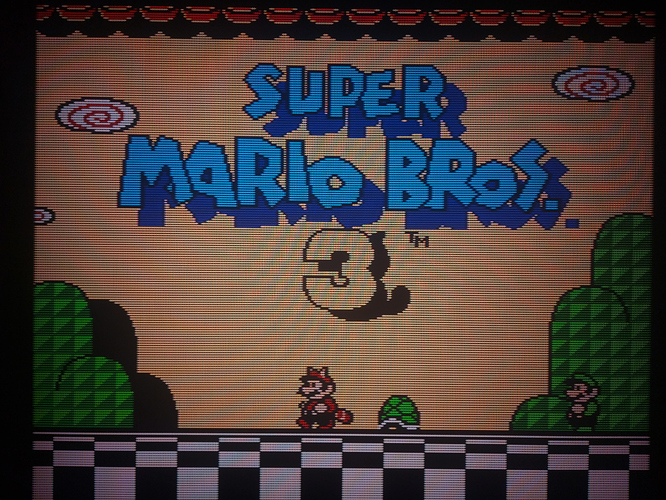

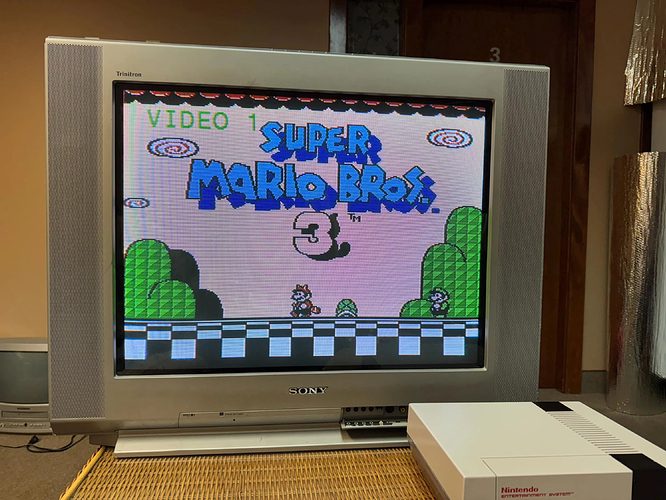

Now that we have several very talented people working on highly accurate NTSC emulation, it might be a good time to talk about sharpening circuits.

Currently, we have several options for emulating a composite video signal, and now we even have people working on console-specific outputs, comb filters, and such. To my knowledge, no one has yet attempted to digitize the sharpening circuits used by TVs to sharpen high frequency content. This is basically what the user is controlling with the “sharpness” setting on a CRT.

Here’s what ChatGPT gave me… I have no idea if it’s useful  Are we already doing this? Can the accuracy be improved by looking at the data sheets for actual TV circuits?

Are we already doing this? Can the accuracy be improved by looking at the data sheets for actual TV circuits?

Replicating a 1980s CRT sharpening circuit in a shader involves simulating an analog video peaking filter using digital signal processing techniques. The basic principle is to detect and amplify high-frequency signal changes, which correspond to sharp edges in the video image.

The analog circuit: A peaking filter In 1980s consumer CRTs, the “sharpness” control adjusted a peaking filter in the video amplifier. The circuit is designed to boost high-frequency components of the video signal, which represent details and edges. A typical peaking filter is a second-order bandpass filter. The digital equivalent of this is applying a convolution filter with a specific kernel to the source image. This can be done in a pixel shader using fragment coordinates and texture lookups.

Shader implementation steps

- Sample neighboring pixels Inside your shader’s fragment function, sample the texture at multiple points: the current pixel’s coordinate and several neighboring pixels. The number of samples and their offsets determine the filter’s “radius” and strength.

// Get current pixel color vec4 color = texture2D(inputTexture, texCoord);

// Define offsets for neighboring pixels float dx = 1.0 / textureSize(inputTexture, 0).x; float dy = 1.0 / textureSize(inputTexture, 0).y;

// Sample neighbors vec4 neighbor1 = texture2D(inputTexture, texCoord + vec2(dx, 0.0)); vec4 neighbor2 = texture2D(inputTexture, texCoord - vec2(dx, 0.0)); vec4 neighbor3 = texture2D(inputTexture, texCoord + vec2(0.0, dy)); vec4 neighbor4 = texture2D(inputTexture, texCoord - vec2(0.0, dy));

- Apply a convolution kernel The core of the sharpening circuit simulation is a convolution operation. A common sharpening kernel is a simple unsharp mask, which can be expressed in a shader as:

- Original pixel * (1 + weight)

- Neighboring pixels * (-weight) For a basic cross-shaped filter, the kernel can be applied like this:

// Unsharp mask kernel weights float centerWeight = 5.0; // Adjustable “sharpness” float edgeWeight = -1.0;

// Apply the kernel vec4 sharpenedColor = centerWeight * color + edgeWeight * (neighbor1 + neighbor2 + neighbor3 + neighbor4);

- Handle signal “ringing” A side effect of aggressive analog peaking filters was “ringing”—an over-emphasis that created a halo or double-edge effect around sharp transitions. To replicate this authentically, you can:

- Prevent clamping: Allow the result of the sharpening kernel to go outside the normal [0, 1] range for a more authentic, artificial look. This will create noticeable bright halos.

- Implement a “soft clip”: Simulate the analog electronics by gradually clamping the sharpened value back toward the normal range, as the original circuits would have.

// A simple way to introduce bloom from over-emphasized signals vec4 finalColor = vec4(sharpenedColor.rgb, 1.0);

// Use a separate bloom pass after this step, or mix in some over-bright pixels // for an easy simulation of the halo effect. vec4 overbright = max(vec4(0.0), finalColor - vec4(1.0)); finalColor.rgb = min(vec3(1.0), finalColor.rgb) + overbright * 0.5; // Example bloom

Horizontal Filter Parameters

Horizontal Filter Parameters