It does indeed, but… well who played the MegaDrive on hires computer monitors in 1991? More than half of the screen is pure black. If sharpness is the target well you said it yourself, a 1080p LCD will be sharper. Why then bother with black scanlines and shadowmasks?

I’m not interested in nostalgia or some dubious notion of “authenticity.” I’m interested in what actually improves the objective picture quality. If someone had offered me a high end RGB monitor in 1991, or if I was able to afford one, it would have been a no-brainer to go for the RGB CRT. It would have been slightly nuts to refuse the RGB CRT in 1991. Objective picture quality > nostalgia. As far as half of the screen being pure black… well, that’s what a CRT screen does. A CRT screen consists of mostly black space, with the image consisting of brightly colored dots.

Also, look at that RGB monitor used on the Toys R Us SNES display unit. Flat screen, check. RGB, check. Sharper than any consumer TV, check. This is in the early 90s.

The scanlines and shadowmask are essential to recreating the native display environment for 240p content. Without scanlines, you’re looking at an image that is visually identical to a line-doubled image. Without the phosphor structure and lower TVL of the mask, the image is far too smooth (which may be counter-intuitive). The scanlines and mask are both essential for reducing the high-frequency content and makes the image much easier on the eyes, objectively. This relies on a well-known feature of the human visual system which is also seen in pointilist painting. 240p content was never meant to be displayed as raw pixels; it’s like nails on chalkboard for your eyes.

The image I posted immediately above is actually a nearly perfect recreation of a 360 TVL aperture grille CRT without any of the artifacts that people sought to eliminate on their CRTs. For reference, a 20" TV from the 80s or 90s was around 300 TVL. The only thing lacking is the mask strength, which can’t be increased any further without compromising too much on brightness. This actually looks more like a 20" Trinitron than any shader I’ve seen at 1080p.

At 1080p, you can’t add too much glow/bleed/blending without significantly reducing the emulated TVL, which winds up making the image look blurrier than any well-functioning consumer CRT ever was. At 720p you can just forget about mask emulation.

I think a lot of people are misidentifying what it is about a CRT screen that makes the image look better. CRTs weren’t blurry; they were lower resolution. A lower resolution image is not the same thing as a blurred image. People are mistakenly conflating blur with smooth appearance. A smooth-looking image is not the same thing as a blurry image, and an image on a CRT appeared smooth for reasons that have almost nothing to do with blur.

At 4k and higher resolutions we can have more accurate blending (really, 8k is needed to be truly accurate), but it becomes moot with HDR capable displays. With HDR you can create actual glow and blending by cranking up the brightness and getting the individual emulated phosphors in the RGB mask to glow as brightly as the real thing on a CRT.

Those two shouldn’t matter; you can just ignore those.

Of course the scanlines and mask are essential, I never said or even implied that 240p content should be displayed raw (nails on chalkboard indeed!) but picture quality is both objective and subjective. And I think that the screen you posted, as accurate as it might be in let’s say scientific terms, is too sharp and dark. I wouldn’t want to play games that way. I’m also a huge fan of crt shaders, and to me something like this looks much nicer. But to each their own I suppose : )

Objectively, that shot you posted is actually far blurrier than any consumer CRT using a decent video signal. Subjectively, it looks nothing like any CRT I’ve seen in person. That much blur causes the eye to constantly strain to find the edges of objects, and would give me a headache after just a few minutes. I can’t stand bilinear filter or artificial blur. CRTs weren’t blurry like that; I’ve owned several over the years. I also have 20/20 vision, for reference. Also, the pincushion distortion stuff is really weird, that’s the kind of thing that you would typically try to eliminate via the service menu. Again, though, I’m not really motivated by nostalgia.

You suggest that the shot I posted is too dark - did you try adjusting your backlight to 100%, as I suggested? On my display (an ASUS VG248QE, for reference), doing so results in a peak brightness of around 200 cd/m2, which should provide plenty of “punch” in a room with a few lights turned on. Most CRTs had a maximum brightness that is slightly lower than this. This should also mitigate the sharpness when viewed at a normal viewing distance, since the human eye will blend things together when the emulated phosphors are objectively bright enough. Any LED-lit display should be capable of attaining adequate brightness with these settings.

Regarding sharpness, here’s the problem: at 1080p, you can only go up to 360 TVL, with each “phosphor” being one pixel wide on the LCD. The “glow” of a phosphor on a CRT is less wide than the width of an individual phosphor when viewed in extreme close-up. So we can’t actually add much (if any) glow at 1080p without either overly blurring the image or reducing the TVL. However, the next lowest TVL we can emulate at 1080p is 180 TVL, which is far lower than even the cheapest consumer CRTs. The solution is to create actual glow by cranking up the display backlight to 100% in order to get the emulated “phosphors” to output as much light power as the real thing on an actual CRT.

Furthermore, “glow” isn’t something that (just) happens directly on a CRT screen. It’s something that occurs somewhere between the surface of the screen and the eye of the observer. To have “accurate” glow, we need to replicate what a CRT screen is actually doing. A CRT is basically a very large amount of black space (more than half of the screen), with a bunch of very brightly colored tiny dots making up the image (or tiny slivers on an aperture grille). In terms of how a CRT screen actually works, I don’t see much room for improvement in the settings I posted. The mask strength could be improved, but only with a brighter display than the one I currently have.

I calibrate TVs as a hobby and occasionally as a side job. Very often, a calibrated image appears too dark to someone who is used to viewing their TV on “torch mode,” with the contrast and brightness set too high. The calibrated image shows more detail and is easier on the eyes. Sometimes it takes a few days before someone adjusts to the calibrated settings. Sometimes they just never see the benefit. A person might subjectively prefer an image that is objectively worse in terms of visible detail and eye strain. Even after being shown test patterns which prove the objective picture quality of the calibrated image is superior, they still don’t think it looks good for some subjective, non-scientific reason. In such a case, the problem doesn’t lie with the image, but with the visual-cognitive system of the observer.

I read the whole thing, I really appreciate your technical insight and I wish I could see your screen in person. No need to get defensive.

I’m not a screen calibrator, but I always work, play and see films on Spyder-calibrated screens, because what I am is a photographer and a videographer. So no, I’m not half blind as you seem to suggest. Again, videogaming is about as scientific as it is artistic. I admire your scientific approach (I really do), and I think there’s a lot of interesting stuff to learn from people like you. It’s just that from an artistic point of view, those ‘superior’ settings get in the way.

Do you play modern games like that? Do you watch films like that? Good for you if that’s the case, but I wouldn’t, for obvious reasons that can also be applied to retro gaming.

I didn’t mean to come off as defensive and I apologize if that’s the case.

It’s just that I’m not sure what you’re objecting to in the image I provided, unless you don’t like it for some mysterious subjective reason which is inherently difficult to articulate. Not much to talk about if that’s the case. You mentioned brightness earlier but unless you’re used to blasting your eyes with too much brightness and contrast, a peak brightness of 200 cd/m2 should be plenty bright in a room with average lighting conditions.

I’m not sure what you’re asking - I always watch films and play modern games on screens calibrated to the sRGB standard because it’s easier on the eyes and provides more accurate color and more detail, and is closest to what the creators intended you to see. Are you saying that you don’t watch movies or play games on a calibrated screen? Of course, I wouldn’t use the posted settings for modern games, if that’s what you mean. If you’ve found something you’re satisfied with, good for you, but I personally can’t understand why people would want to add so much artificial blur to their games. Blur isn’t why 240p content looks good on a CRT, IMO.

Here’s an image with a bit more blending/bloom between the scanlines, which may be more to your liking. Again, this will most likely require you to max out your display backlight to get an adequately bright image. There’s no alteration of the colors and no added blur or other artifacts added to “enhance” the picture. The scanlines here are a bit less than 1:1, which is probably closer to what you prefer, and the RGB mask strength has been increased somewhat. Actually, I think I might prefer this to my original shot.

I adjusted “maskDark” to 0.20 and “maskLight” to 2.00; this leads to a bit of clipping but it’s not too terrible and shouldn’t be noticeable when playing a game. I then changed “mask/scanline fade” to 0.60.

Here’s a comparison shot. Which one is the CRT and which one is the shader? Well, it’s pretty obvious due to the scaling artifacts that are currently unavoidable with PSX emulators, but if you ignore those, the two images are very close. Also bear in mind that the image of the CRT is overbloomed and the CRT would exhibit less bloom/glow when seen in person; a lot of that is added by the camera (scanlines should still be clearly visible over white on a properly calibrated screen), and I’m not confident that the CRT has been calibrated to the right colorspace. It’s also worth remembering that things look different in person than they do in photos. It’s really kind of amazing how cranking up the backlight on an LCD using these settings can approximate the glow and halation seen on a CRT, but that’s almost completely lost in the photo.

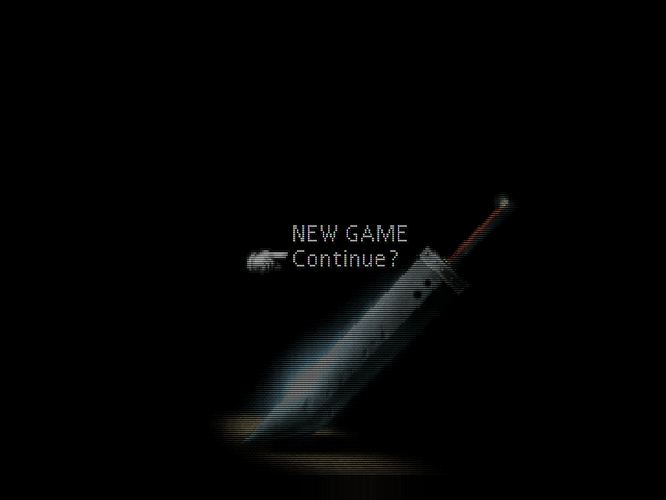

Here’s a gpu screenshot of the first image that does a better job of showing the mask and scanlines:

Does Retroarch support HDR? I would have imagined that Retroarch runs as SDR content, and therefore even on an HDR-capable display it would not take advantage of HDR peak brightness levels.

When this does become possible I agree that phosphor emulation will be very close to real phosphors.

AFAIK, you don’t need to actually call on HDR, you just need to max out the display backlight on an HDR-capable display. Just to meet the HDR spec, an HDR-capable display should have enough spare brightness to accomplish accurate phosphor emulation.

I’m not sure if it’s just a matter of maxing the backlight. As far as I recall, for Windows at least to output in HDR to an HDR-capable display it needs to be actually running an HDR app or game. Otherwise the display will simply detect an SDR signal and the peak brightness will be limited accordingly.

TBH, I’m not 100% sure. It’s my understanding that you don’t even need to use HDR at all to accomplish what we’re talking about. Also, I’m pretty sure Windows actually has an option to manually enable HDR and has its own brightness adjustment that can then be applied to whatever application you’re running.

That is impressive, yes… but see the difference in sharpness between those shots? The CRT is way softer. Look at the diagonal line in the capital N. You can almost see raw pixels there. Regarding brightness, my other ‘complaint’, I suppose you have got a blazing, modern, HDR capable LCD. That’s why I said I would love to see things running on your screen with my own eyes.

I mentioned these points already but they bear repeating.

-

The CRT photo, because it’s a photo of a CRT, is going to naturally show more bloom than what is actually there in person. That’s just pretty much inevitable given the nature of how CRTs and cameras work. It’s not a very good photo TBH; I kind of regret posting it. The CRT is not calibrated correctly and/or the camera is adding its own bloom.

-

Both images that I posted, the gpu screenshot and the emulated shot, are going to show less bloom and glow than what is actually there in person with the LCD’s backlight cranked up, and again, the camera is doing its own thing to the image. See that halation, though? That’s real.

Didn’t mention this earlier, but it’s also two completely different mask structures - the CRT is a slotmask, while the emulated images are simulating an aperture grille; aperture grilles are inherently sharper…

Also, no idea what kind of signal the CRT is being fed- RGB, composite, or whatever.

As far as the display I’m using, I mentioned it earlier - it’s an ASUS VG248QE. Not HDR capable. It’s on the brighter end of LED-lit LCDs, but it’s not really exceptional. The max advertised brightness of this display is 350 cd/m2, but you can push it above 400 cd/m2 if you max out both contrast and brightness.

A lot of these settings are display-dependent because displays vary in their peak brigthness and motion clarity. Another reason I can’t stand blur is that the LCD already adds blur when things are in motion, and adding blur on top of this just completely wrecks the picture quality when things are in motion. Due to differences in individual displays, we can’t effectively share settings, but we can share what methods we used to arrive at our particular settings.

Good work!

So guys, where can I find now the optizied (or best) version of zfast_crt+dotmask.glslp and who gives me advice how to use it on my RPI?

Go to the very top of the thread and about 6-8 posts down Hunterk has the code posted for zfast_crt+dotmask.

Unfortunately, adding the dotmask makes it a bit too slow to run full speed on the RPi (to my knowledge). That’s why the original zfast crt shader just has the one mask effect.

Would it be possible to have a slang version of zfast_crt+dotmask.glslp, please ?

the same!

Would it be possible to have a slang version of zfast_crt+dotmask.glslp

Did I never post that?

I could’ve swore I slapped together a build for that.

It does now!