I think that’s a side effect of the camera’s dynamic range rather than an actual behavior. That is, I believe it’s just bloom/flare-out in the photo.

Well, maybe the crt brightness is leaking over the mask, if that’s possible.

You could probably mimic the effect by modifying the mask strength by the inverse of the luminance, but this monkeys with the black level in a way that I don’t love:

OTOH, this also happens with luma-linked beam width, but you and guest have handled that admirably, so maybe this isn’t that big of a deal

We need to make sure we’re comparing apples to apples, I think.

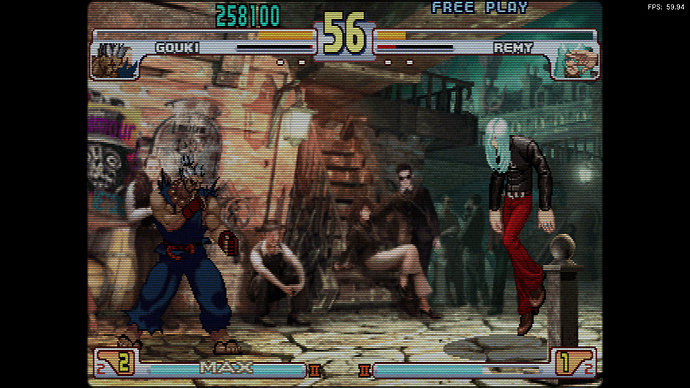

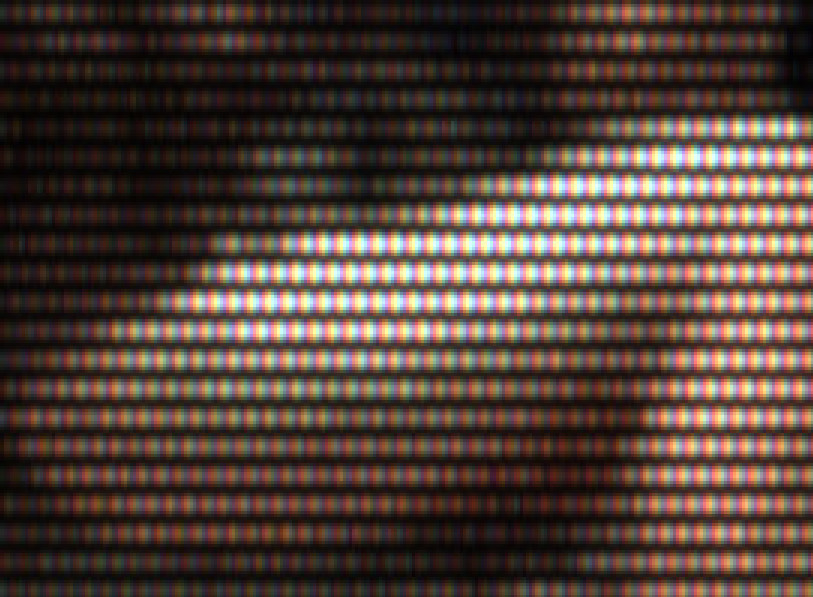

For the comparison to be useful, we need a photo of the aperture grille CRT with the individual phosphors in-focus (ie., able to see the RGB strips). Same thing with the shader, it should be a photo of the actual LCD screen (preferably a 4K one), with the emulated phosphors in-focus. Check out the Megatron shader thread for some excellent side-by-side comparisons.

Crt displays imo had different chromatics/lighting properties. A good example is that you need at least 2x more nits with a flat display combined with a full mask strengts shader to properly ‘emulate’ a 1x nits value crt tv display. The images above might be taken with about the same lighting strength.

are you saying you need (for example) 200 nits + full mask strength to equal a 100 nit CRT? That sounds right for the 2px masks, which result in a 50% reduction in brightness. For the 3px masks I think you need 3x nits, and for slotmask it’s even worse.

I started experimenting with -50 mask value with crt-guest-advanced and I am happy with the results. Try and see if this is something that interests you. While using HDR it looks super bright at 200 paper white (800 peak white)

I wrote/meant you need at least a ‘2x nits’ modern display, but you are quite right about the increasing necessarity for brightness.

I’m not sure about these nit comparisons as a CRT is a pulse display and a LCD is a sample and hold. Therefore one has a very intense pulse of nits then a steep fall off and the other is constantly spitting out a stream of photons at a relatively steady rate. They’re very different graphs.

Sure you can average out the pulse display but I’m not sure our brains interpret it like that. As in I think that intense pulse is being interpreted as many more nits in total than it actually is spread out over a longer period of time - with the peak lower.

Thats in the temporal dimension alone then take into account the spatial dimension differences.

From my limited testing with a HDR600 monitor it gets reasonably close in brightness to a 2730 PVM. Certainly not quite as bright but I think a modern QD-OLED or LCD will surpass it with 100% mask and a 4px mask. Whether the colorimeters agrees with that over the whole screen is a different matter.

What it won’t do is mimic the pulse though - which is really the next step and is started to being taken with backlight strobing as I’ve said (bored you with  ) elsewhere.

) elsewhere.

Something is fundamentally different from the way the light comes off a real shadow mask and how it comes off of these mask shaders. I have always ended up turning masks off because it looks too artificial. The effect isn’t convincing.

Maybe HDR is the solution, but even at high brightness something looks off about the mask effects. It looks ‘pixelly’ I guess.

I once tried preblending the mask colors but it didn’t good. Instead I wonder if we take existing masks and substitute green with white, if that would have a better effect. I can’t remember if there are subpixel masks that do that.

A fundamental problem of masks and scanlines are currently panel response times, combined with sustained brightness levels. Sometimes one must invest into a proper display, best bets are OLED, second best bet is a 180Hz or 240Hz display for sub-frame effects. I’m still a bit cautious about OLEDs and very high time count of masked content, it needs some shifting mechanisms over time to avoid burn-in. But OLEDs otherwise can offer the best crt emulation experience.

Add a notch of mask mitigation and a proper viewing distance, then the experience should be very nice.

You can belive me, that the normalized sum of mask weights over the entire mask effect width (i.e. 3 for RGB) should be (1.0, 1.0, 1.0) or very close to it, or there will be notable tinting.

This is a pretty old thread.

Yes, you need around 250 nits on full white with shaders applied for it to feel like a CRT, in my experience.

For a while, 100-200 nits with shaders was deemed “adequate,” but my recent experiments with HDR indicate that we really need more than this. 100 nits on LCD isn’t the same as 100 nits on a CRT, the CRT phosphor gets to like 10,000 nits for a few nanoseconds.

I think the solution to this is just more brightness. The Phosphors are still Red, Green and Blue in the CRT screenshot. If the camera settings were not overexposing things, we might see the Phosphors appearing as Red, Green and Blue.

Similarly, we can get that same overexposed effect with the LCD/Shader combination if we overexposed the shot via the camera settings.

This is something easy to experiment with and try out for yourself.

Also, FWIW, local dimming has led me back to no mask / light mask setups, so everything just comes full circle.

Instead of asking “is this adequately bright?” I’ve been asking “is this too bright?”

A MiniLED LCD is still a backlit display and depends on brightness for its contrast ratio.

I agree with @anikom15 regarding the pixelly look of masks on digital displays, it always bothered me a little bit but I assumed that there’s not a lot to do about it, and given how good crt shaders look in general, I moved on.

And I always admired @Nesguy 's commendable quest for 100% masks looking as realistic as possible, even as we argued endlessly about whether it was too dark or not. These days I know a little more, understand his methodology better, and see great potential in it.

One day by the way, while experimenting with masks (precisely trying to make them look more organic and round) I got this effect. I know it’s completely unrealistic, but I find it pretty cool, so I saved the preset. What do you guys think? I call it the “egg mask”

lmao no way, I don’t believe it!

I wonder how the underlying LCD pixel layout affects things. The other parts of shaders do a lot of work to smooth out and soften the image, and in a way the masks take things a step back, put it against a grid, and somehow it leads to that pixelly look. I don’t know why. Perhaps our brains are aware of the underlying grid in some way and we perceive it as sharper than what we get from a real CRT.

I know what you mean, I think it’s more of an issue with slot mask setups. Aperture grille is already kind of pixelated, (basically the same structure that modern LCD uses) so either it’s not as affected by the subpixel structure or I just expect it to look that way.

I tweak the NTSC Resolution Scale and Sharpness in order to tweak the overall Phosphor and image finish.

In other cases, I additionally use upscaling shaders like XBR-LEVEL-2 and tweak them so that they don’t destroy all of the aliasing and “Retroness” of the image.

We have to understand that CRT Phosphor are shaped slightly differently from LCD/OLED subpixels so that’s a different foundation upon which we are building our image on.

CRT Royale has a uniqueness about its look that is a little less digital in my opinion. This is probably because it includes dust/noise/degradation that is present in real CRT phosphors.

OLED’s glass panels, near infinite viewing angles and inky colours in addition to black help bolster its impactfulness but it does fall short in several areas as well and those are just as important.

I struggled with getting red to look red at lower saturation levels.

So a lot might be overlooked when it comes to those displays.

Perhaps we could try to somehow apply antialiasing and/or interpolation to the masks themselves?  I actually asked about that recently, here

I actually asked about that recently, here