This weekend is the tenth anniversary of my first public post about a CRT shader I was working on, which to my knowledge was the first CRT shader.

I should stress that there were important predecessors, such as the long history of scanline filters as well as Blargg’s ground-breaking NTSC libraries. And there were CPU-based television or CRT simulations such as XAnalogTV from 2003 and the CRT simulation in Stella that was announced a few weeks before my first shader. I should also highlight the very impressive crtsim from a few months later.

I’m impressed by a lot of the ongoing discussion and development, although unfortunately I am not completely up-to-date on the full spectrum of CRT shaders today. I’d like to go through the simulation pipeline and try to highlight some successes and areas that could use more attention.

- NTSC/PAL video decoding. Blargg's filter is still great and there are also some shaders. But it would be better to more accurately simulate some decoders used in actual televisions, which also made use of comb filters. (I played with the latter a few years ago.) Here there's also a need for better integration with the emulator, so that an accurately encoded signal can be passed through to the decoding shader.

- Video amplifier. This is somewhat connected with the previous step, since the problem can be treated as an issue of signal processing. Since this applies before the gamma ramp, it can cause darkening of high-contrast areas — this is why gamma charts traditionally use horizontal lines. I'm not aware of any serious attempt to accurately simulate this. One way of doing so would be to display some test images on a CRT. These would have to contain high-contrast areas with varying horizontal frequency; a filter could then be designed to reproduce this in the simulated CRT. There's a good discussion of this in the following article:

Hu, Q.J. & Klein, S.A. (1994). Correcting the adjacent pixel nonlinearity on video monitors. Human Vision, Visual Processing and Digital Display V BE Rogowitz & JP Allebach, Eds, Proc. SPIE 2179, 37-46. - Gamma ramp/tone curve. This is under reasonable control, although it would be nice to have some real measurements on historical displays. Also: people who write shaders should be very careful about unintended sources of nonlinearity such as scanline blooming or glow effects. It could be useful to display a gamma chart to test the full effect of the shader.

- Geometry. In principle this can include curvature of the screen along with the usual set of adjustments: pincushion, trapezoid, rotation, etc. Misconvergence was a common and important defect that is simulated in e.g. MAME's HLSL and CRT-Royale. I think it's also important to make the raster behave more dynamically, and it's encouraging to see the "raster bloom" effect implemented in crt-guest-dr-venom.

- Spot shape. Here a Gaussian or some variant is reasonable. It would be nice to have some real empirical input to make any blooming effect that varies the spot shape be realistic.

- Halation or veiling glare. I will start by quoting myself from 2011 (with a couple minor edits):

Recently I've looked into the issue of veiling glare or halation (which seem to be the same thing, as far as I can tell). Here are a few references:

Some version of these effects is implemented in various shaders including my own. Note that the effects involving light yield coloured halation, whereas effects involving electrons will eventually hit a random phosphor and yield monochromatic halation. Again, some careful comparison with real CRTs would be useful.- The first one (alternative link) basically establishes that halation is important for subjective image quality.

-

The second one (alternative link) is the best of the three. First, it describes the sources of veiling glare:

- Internal reflection in the faceplate. This is the most important, and is a local effect.

- Light leaking out the back of the phosphor layer, scattering inside the CRT, and making its way back out the front. This tends to have a uniform effect over the whole display.

- Electrons scattering between the phosphor layer and the shadow mask. This is a short-range effect.

- Electrons backscattering off the shadow mask, and eventually hitting the phosphor layer. This is a long-range effect, like light leakage.

- The third article (alternative link) shares some authors with the second, and has some somewhat more sophisticated measurements of veiling glare.

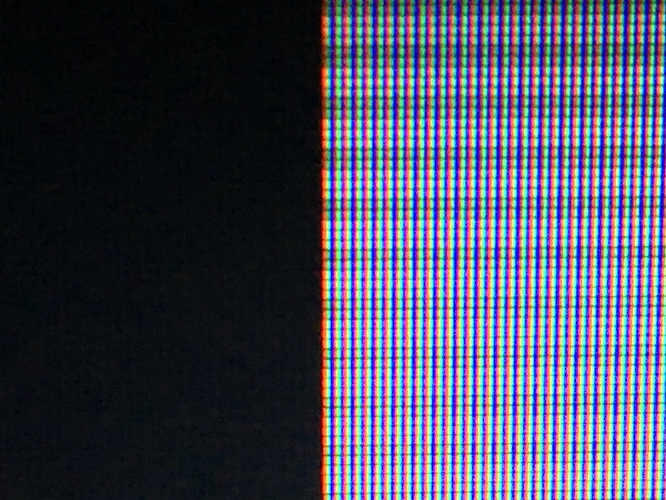

- Shadow mask / aperture grille phosphor pattern. With 4K displays, it should be possible to produce a reasonably realistic result. One problem I should point out with many currently used patterns: they fail to account for the RGB subpixel pattern of the LCD they will be shown on. For instance, a common and simple aperture grille pattern has single-pixel RGB stripes. But at native resolution on an LCD, this appears as R...G...BR...G...BR...G...B, i.e. the green stripes have three dark subpixels on each side but there is no dark subpixel between B and R. Two simple solutions: either add a black stripe between B and R, or double the number of bright subpixels with the pattern R..RG..GB..B, which is also four pixels wide (red, yellow, cyan, blue).

- Colour primaries and white point. With a colour meter these should be measurable and we can try to replicate them, or we can rely on manufacturers' specs. I think it's important for anyone who produces a colour correction to specify whether it came from an actual reference or based on what "looks right"; the latter is really not trustworthy.

- Short time behaviour of phosphors. This is tough to simulate on sample-and-hold LCDs. Black frame insertion / backlight strobing should help; ideally the "on" time for the backlight should be as short as possible.

- Phosphor decay/persistence. It can be tempting to dismiss this as negligible because the exponential decay times are much shorter than a frame. However, for sRGB the intensity has to decay below (1/255)/12.92=0.0003 to be negligible, which corresponds to a lot of decaying. Furthermore, the decay can eventually follow a power law, which is much slower than exponential. The result is that a visible excitation might remain even after a second has passed. There are some good references here including some decay curves, which are colour-dependent. I included a power law decay in crt-geom-deluxe — it's trickier to implement than exponential.

- Miscellaneous. Having the image reflect off the frame of the CRT could add to the immersion. Interlacing should be better supported starting from the emulator.

):

):

I’ll get those pushed up to the quark repo and get them converted to RetroArch’s formats, as well.

I’ll get those pushed up to the quark repo and get them converted to RetroArch’s formats, as well.