EDIT (8/4/2019): I’ve changed my mind about a lot of things said in the below post, but keeping the original post intact for the sake of the discussion. In particular, I now find that a certain amount of blur, when used with the right mask effect and scanlines, more closely approximates a CRT than the nearest-neighbor scaling used in the below images. Additionally, the mask effect has to take into account the LCD subpixel structure, which the mask pattern used in the below images fails to do. My current settings and example screenshots can be seen here.

[Copied from other thread for context]

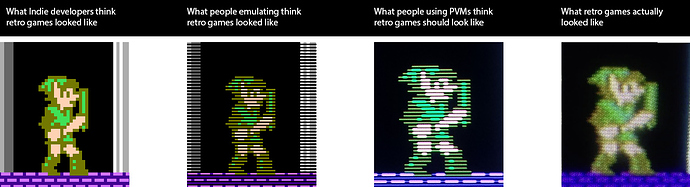

I have a very different perspective when it comes to CRT emulation. I think what this thread really demonstrates is just how exaggerated most CRT shaders are. Most of these images have far too much bloom/bleed/blur etc, and I don’t understand why people want that in their games. A CRT image is “soft” for reasons that have very little to do with blur. It’s the scanlines, lower TVL, phosphor structure and glow that gives a CRT image it’s characteristic “softness,” which people mistakenly conflate with blur.

It seems that most shaders are created by either looking at photos of CRTs or by looking at a CRT in person and trying to “eyeball” it. While the latter approach is superior to the former, it’s still inferior to an approach that starts with a sound conceptual understanding of what a CRT screen is actually doing to the image, objectively, along with an understanding of what it is about CRT screens that enhance the objective image quality for 240p content.

Yes, I understand that maybe your crappy low quality TV from the early 90s may have, in some ways, resembled these shaders. The question is, why are you trying to replicate a crappy TV from the 90s instead of a high quality CRT from that era? Back in the 90s, if someone had offered to replace my low quality consumer TV with a high quality RGB CRT, it would have been crazy to refuse. People bought RGB CRTs when they had the money and if they were available, because the image quality is objectively superior. Why people want to add blur, pincushion distortion, signal distortion, bleed, etc is beyond me, but nostalgia isn’t really a factor for me, nor do I care about some poorly defined and dubious notion of “authenticity.”

For those who don’t care for the hyper-exaggerated, over-bloomed, and distorted look you get with most shaders, you might be interested in a more minimalist approach that is focused on getting an LCD screen to actually function in a similar way as a high quality CRT screen that is free of the undesirable distortions that people sought to eliminate on their CRTs, and which are found in many shaders.

The following images should be viewed with your display backlight adjusted to 100%, or else they will probably look like crap.

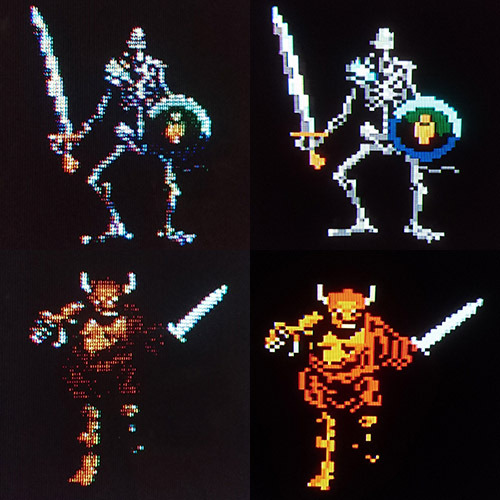

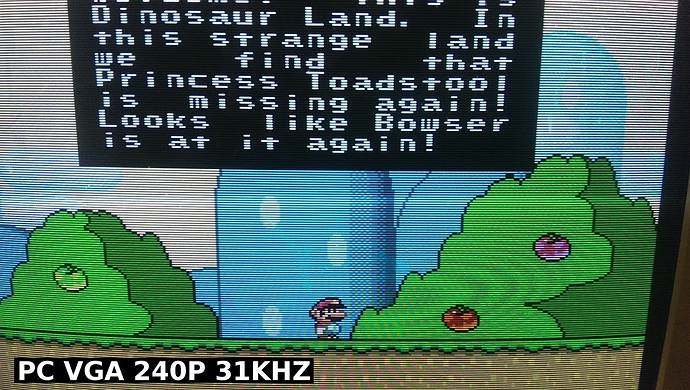

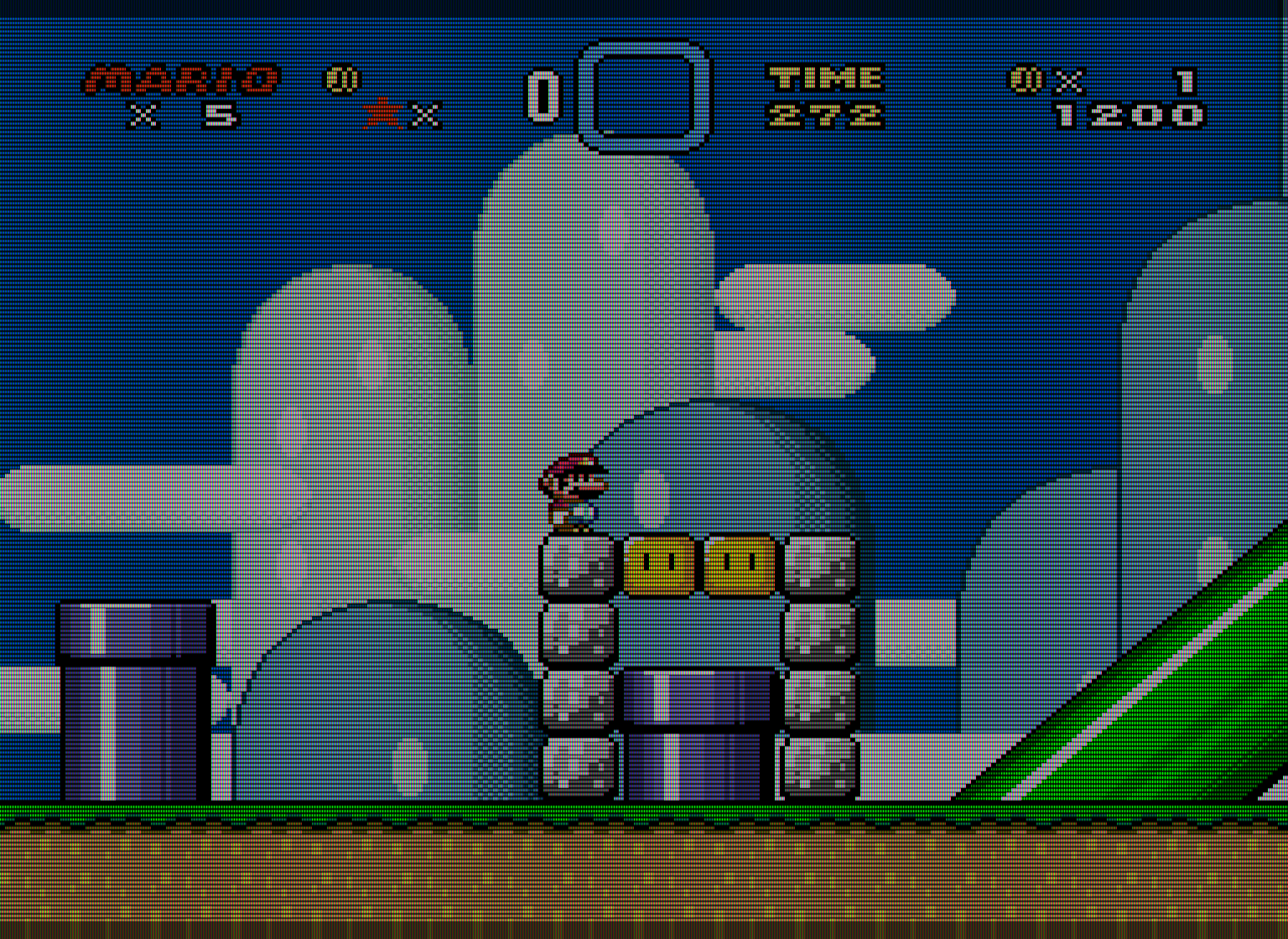

Example shot

What’s going on in this image? The above is a perfect rendition of a 360 TVL RGB CRT. The only things being added here are scanlines, the RGB phosphors, and some slight vertical blending between the scanlines. The scanlines are a bit less than 1:1 with the vertical blending added.

I’ve adjusted my display backlight to 100% and I’ve made some adjustments to the mask strength to find the ideal compromise between brightness and mask strength. With this configuration, my screen has contrast and peak brightness levels comparable to that of a high quality CRT, and the mask is as accurate/strong as possible while maintaining brightness. At normal viewing distances, the emulated phosphors act very much like those on a real CRT, exhibiting similar glow and halation effects and blending together in a similar way, Viewed in person, this looks better than any shader I’ve tried, and I’ve tried all of them. Get up close and it’s as ugly and incoherent as what an actual CRT looked like up close. Gradually move away from the screen and all the different elements blend together as a result of the way the human eye works, just like with a real CRT!

With my display backlight at 100%, I’m getting a peak brightness of around 200 cd/m2, which is slightly brighter than what was common for CRTs. With HDR capable displays, it should be possible to max out the mask strength while maintaining peak brightness levels that match or even exceed that of a CRT. In other words, with an HDR-capable display, it will be possible to get the emulated “phosphors” to glow as brightly as the real thing on a CRT. At that point it will be really pointless to add glow or halation effects via shaders.

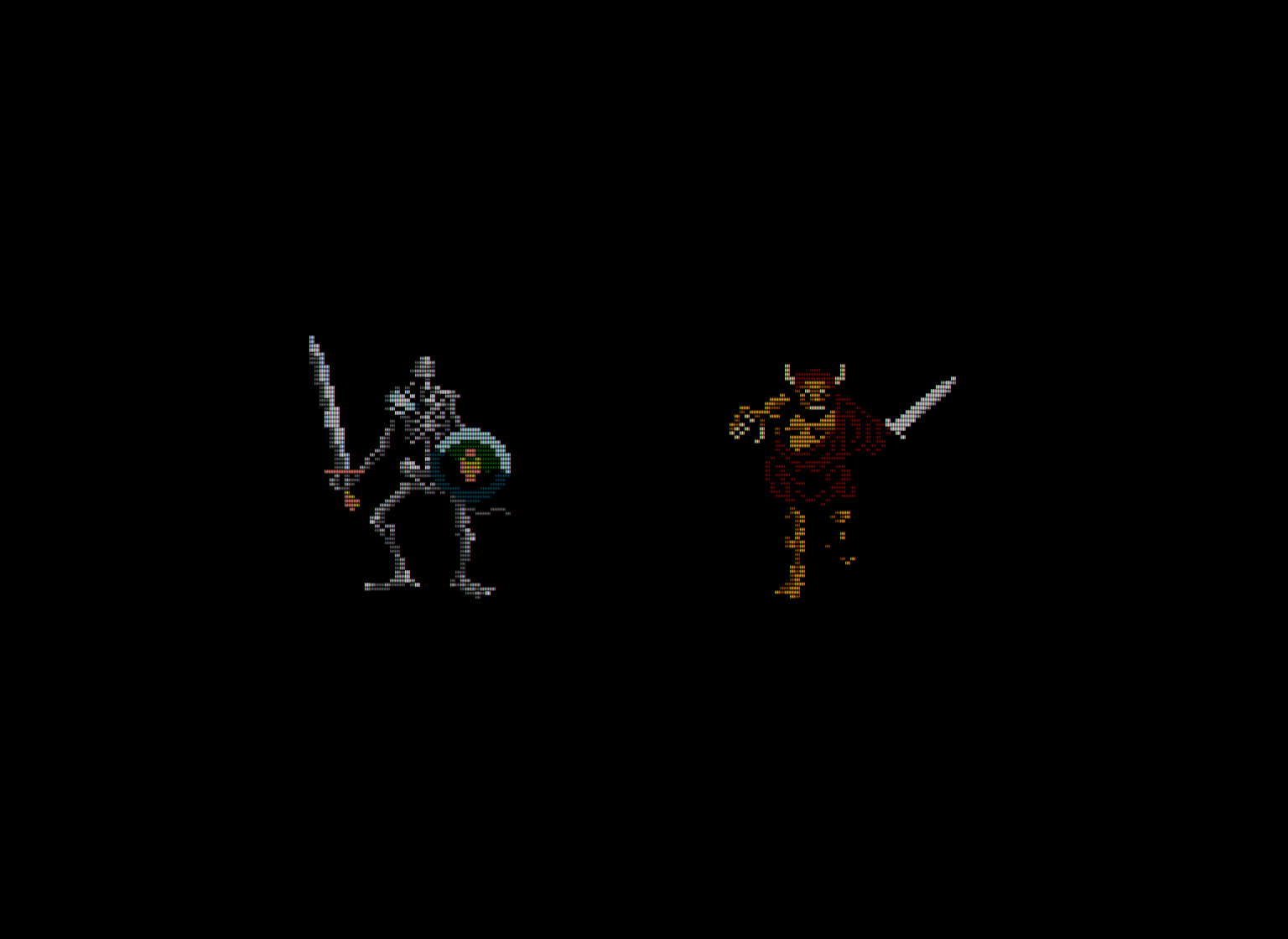

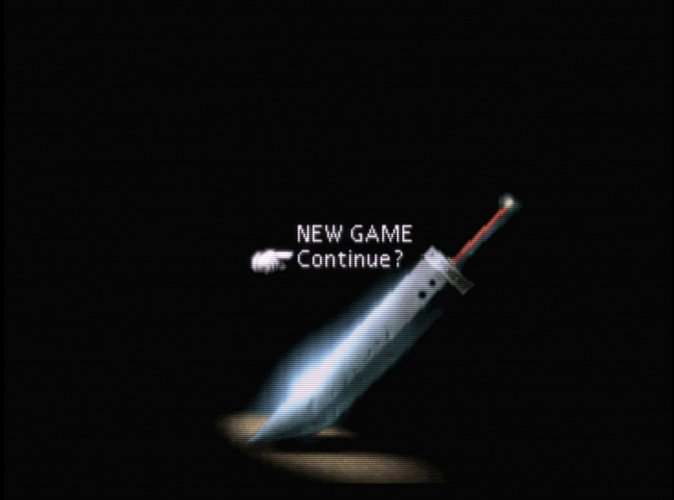

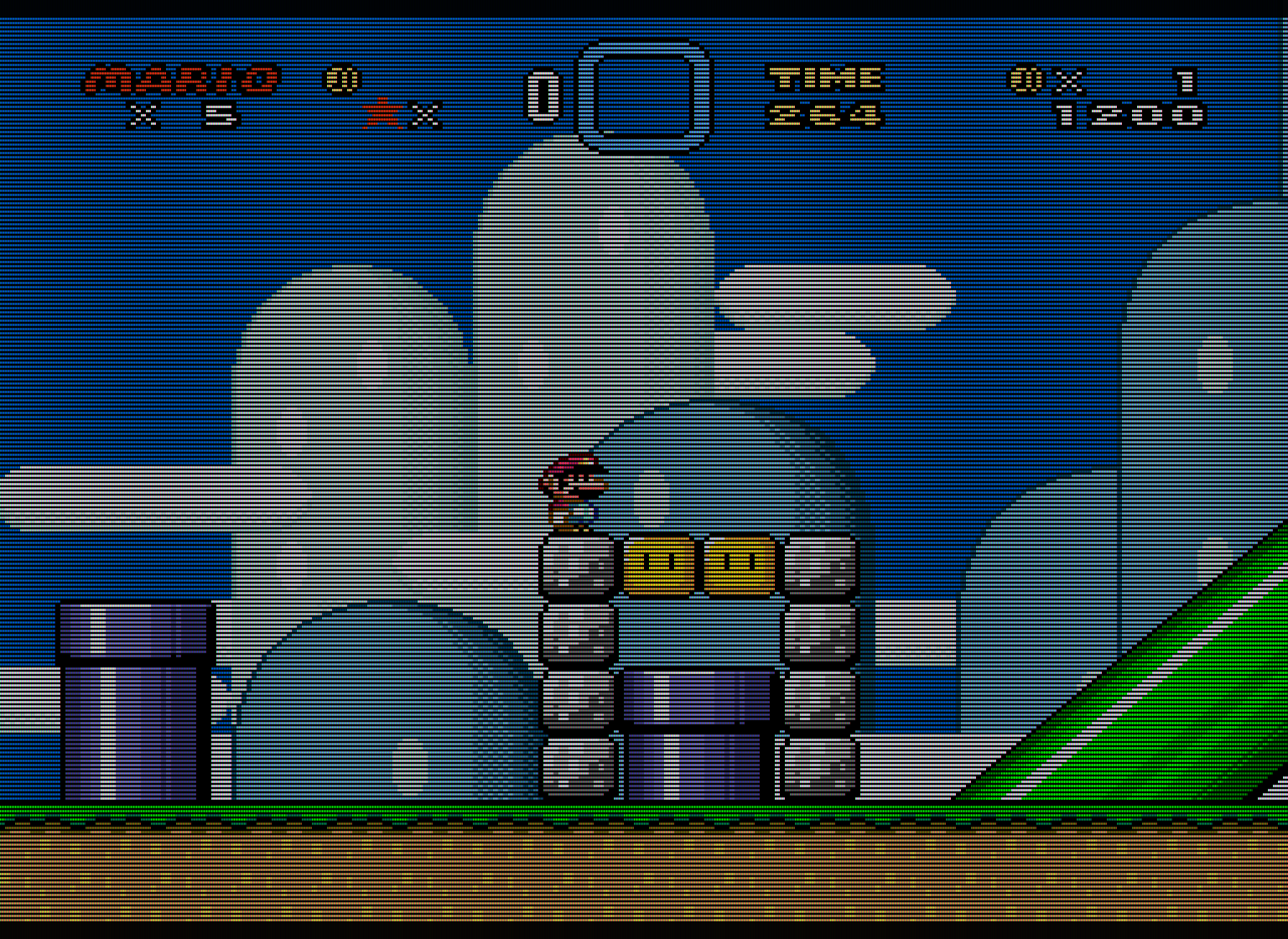

Here’s another emulated image without any vertical blending added- just 1:1 scanlines and the vertical RGB phosphors.

Here’s a close-up image of an actual high-quality CRT (a Sony FW900, I believe), to show the range of what’s possible regarding image quality on a CRT. You’ll notice that this image is far sharper and less “bloomy” than what the vast majority of shaders do to the image. Actually, the CRT image is much sharper and exhibits less bloom than the first emulated image that I posted above, and looks more similar to the second emulated image with “perfect” scanlines. The scanlines in the CRT image are even more pronounced, being as close to 1:1 as possible.

The shader being used in the emulated images is the “zfast + dotmask” shader that HunterK put together, although I’m unsure if it has been added to the shader repository. I’ve stacked this with the “image adjustment” shader for gamma correction. Here are the changes I’ve made to the parameters:

BLURSCALEX = “0.000000”

BRIGHTBOOST = “1.000000”

DOTMASK_STRENGTH = “0.300000”

HILUMSCAN = “8.000000”

ia_monitor_gamma = “2.200000”

ia_target_gamma = “2.400000”

LOWLUMSCAN = “9.000000”

MASK_DARK = “0.000000”

MASK_FADE = “0.600000”

maskDark = “0.200000”

maskLight = “2.000000”

shadowMask = “2.000000”

bilinear filter turned OFF in the video options, shader filter set to “nearest.” Integer scale turned ON in the video options, custom aspect ratio enabled (6x5 integer scale).

NOTE: These settings are partially display-dependent. The goal is to maximize scanline and mask strength while maintaining CRT-like peak brightness, getting the scanlines as close to 1:1 as possible and the mask strength as close to 100% as possible without compromising on brightness. As such, settings will differ depending on the display being used. For reference, I’m using an ASUSVG248QE, which has an advertised peak brightness of 350 cd/m2. If one wants to add some vertical blending, adjusting the “mask fade” parameter will accomplish this. This will also brighten up the screen somewhat and allow the mask strength to be increased, at the expense of making the scanlines less prominent than the “perfect” 1:1 scanlines one sees in the second emulated image and the CRT image.

Also, bear in mind that using this approach limits you to using integer scale, and the horizontal integer scale must be a multiple of 3 in order to avoid ugly artifacts that resemble color bleed. This isn’t that big of a deal when you consider that 6x5 is already very close to a 4:3 ratio, and that the geometry of CRTs varied widely depending on how they were calibrated and was never perfect. Also, bear in mind that using this approach limits you to using integer scale, and the horizontal integer scale must be a multiple of 3 in order to avoid ugly artifacts that resemble color bleed. This isn’t that big of a deal when you consider that 6x5 is already very close to a 4:3 ratio, and that the geometry of CRTs varied widely depending on how they were calibrated and was never perfect.

EDIT: I guess I should also mention that it’s entirely possible to add blur with this shader- just set the shader filter to “linear.” Adjust BLURSCALEX if more blur is desired.

[/end copied post]

I do think that for console games made around the 80s, like the NES, composite or a composite-like look is most suitable, since sprites where designed with the blended picture quality of composite in mind.

I have never seen any actual evidence to support the assertion that this was common practice. What we know is that the graphics were created on RGB monitors after being hand-drawn on graph paper. Furthermore, I think one can make a sound argument that the image quality for NES era games is objectively superior through RGB, even given the loss of some dithering effects. The loss of one or two dithering effects (I’m looking at you, Sonic waterfall) is more than outweighed by the vastly superior color, sharpness and overall clarity of the image through RGB.

But it was fun because at home we had only ATARI’s 2600 hocked to TV’s, much more gruesome ;D

Everything changed when we got the Amiga Computers at home with the 1080 Monitor,

the picture was so clear finaly. We allways searched for better Picture Quality <- see! hehe

Today we can run Games from mid '90 or new games from today with the look of the '80, of course this makes no sense, this never happened.

But if you lived and played for real at that time (in the '80) it makes sense because

it reminds you and brings back the feelings of this cool times.

I dont know why the new generation should play it this way, because there is no

real connection and everything looks bad, but its up to everyones taste

But it was fun because at home we had only ATARI’s 2600 hocked to TV’s, much more gruesome ;D

Everything changed when we got the Amiga Computers at home with the 1080 Monitor,

the picture was so clear finaly. We allways searched for better Picture Quality <- see! hehe

Today we can run Games from mid '90 or new games from today with the look of the '80, of course this makes no sense, this never happened.

But if you lived and played for real at that time (in the '80) it makes sense because

it reminds you and brings back the feelings of this cool times.

I dont know why the new generation should play it this way, because there is no

real connection and everything looks bad, but its up to everyones taste