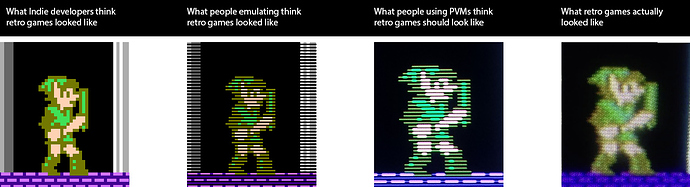

I think he does want to come across like that actually. And more out of pedantry than anything else, I would add. Video experts and crt savants not all agree on the sterile (good word to express my point) look, I can guarantee that to you. This is not hard science we are dealing with.

Seriously? Lighten up. I made my intentions clear with my reply to shenglong above. I am not being pedantic in my criticisms, I’m criticizing the objective picture quality of your images and you’re getting all huffy and personally offended by it. Video experts would most certaintly agree when contrast, black level and color accuracy are all off because these are objective things which are measurable. Still no images from fudoh’s 240p test suite, I can see. It’s like it’s impossible to get a single word of reconciliation from you.

On the left, a BVM. On the right, a Nanao 15khz arcade monitor. Completely different, both high quality (not so much according to Nesguy, but believe me, Nanaos are good 2). Needless to say, I much prefer the arcade monitor and its translucent scanlines that merge with the art in a lovely, natural way, rather than devouring half of it.

I don’t like either of those images very much, the BVM is a little harsh even for me. Both of the monitors are good, yes, but in terms of objective quality, the BVM is pretty much the gold standard.

Someone is pushing his personal preference as an ideal standard of godly quality that everyone should switch to right away. If at least said preference resulted in extremely good looking games, or maybe an accurate replica of something like the BVM-Metal Slug image posted above, I would understand the attitude. However, based on the screens posted, it doesn’t seem to be the case.

A blatant mischaracterization which shows that you’re only interested in stirring shit.

from the preceding post: “I think when it comes to how 240p graphics are displayed, the most we can say is that raw pixels on an LCD are wrong. There are many approaches that are acceptable. I think Blaarg’s NTSC composite filter looks very good/accurate, if one is into that sort of thing.”

Shenglong’s kid’s preferring my settings is no surprise at all. It’s as close as it gets to someone who isn’t already invested in an opinion sharing their untainted view of what is superior.

The reason my settings look good, objectively, is because I’ve increased the contrast around highlights and I’ve made the lowlights even darker, both of which contribute to increasing the dynamic range of the image. That’s what the scanlines and mask structure do to the image, and it’s one of the primary reasons why things look good on a CRT, IMO.

Evidence… well arcade games came together with those screens! A pretty strong message from their makers I reckon. They were the standard.

No they didn’t, and no it isn’t. As per the article that Hunter posted, the graphics were made on RGB monitors, the sharpest screens they had available. Sometimes the output was then tested using composite connected to a TV, but it’s unclear just how widespread a practice this was. Even more unclear is how much various characteristics of the CRT informed the pixel artist’s work. The only evidence we have is that they used something called the 0.5 dot technique, which relied on scanlines and the generally lower sharpness of TVs.

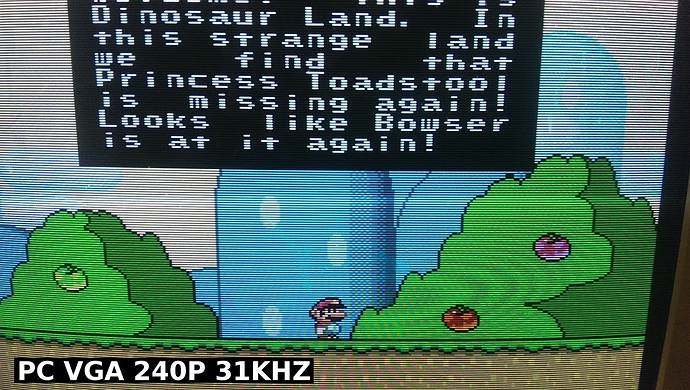

The image you brought from hunter’s blog is good, yeah… and much brighter than your preset, man. Does your setup look like that ‘in person’? If so, I think you should start taking photos of your display with a camera instead of internal captures, so we can see how it really is with the strong backlight, instead of having to infer and imagine from abstract values like ‘200cd/m2’

It seems obvious to me that you are trolling at this point. I’ve said probably 10 times now that my screenshots must be viewed with the backlight turned up, and that this is going to vary depending on the display being used. I can absolutely guarantee that the image using the settings I’m using is actually brighter and has more contrast than the shot of the CRT from Hunter’s blog, although again, you cannot accurately judge these things from photos. As a photographer, one would think that you would understand how cameras all do their own thing to the image. So yeah, I could post some screenshots using a camera phone but it’s going to have it’s own inaccuracies which you will then use to make baseless straw man criticisms. For reference, typical CRTs had a peak brightness of around 175 cd/m2 so that’s why I targeted 200 cd/m2. It’s actually almost too bright for extended viewing, so I can’t help but continue to laugh at this baseless criticism.

That N comparison is crap for all the reasons which I already discussed. Two different cameras taking pictures under different lighting conditions and I’m not even sure how that CRT is calibrated. The CRT shot is obviously overbloomed, so the fact that your N resembles it more closely in that respect is exactly the problem I’m talking about. The scanlines shouldn’t disappear over white like that, and you can find that information in pro manuals for calibrating CRTs.

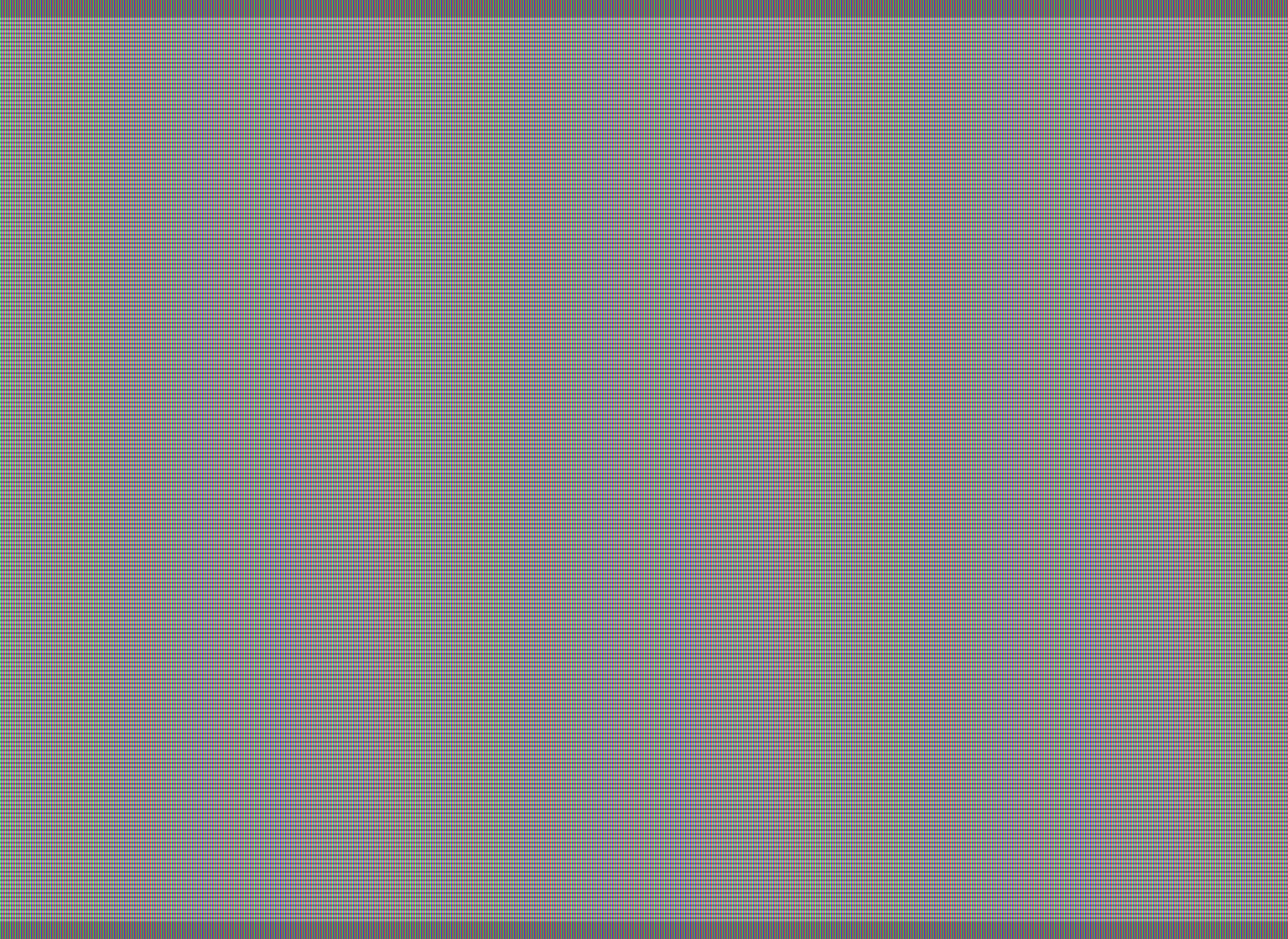

Furthermore, an extreme close up like that is highly misleading. I cannot stress enough the importance of proper viewing distance. CRT tech is an additive visual system, meaning it emits light and the light combines after leaving the surface of the screen to form the colors that you see. Get close enough to the screen and you can’t even tell what’s going on. At the proper viewing distance that N looks nowhere near as sharp as when its zoomed in like that, because I’ve gotten the emulated phosphors to glow as brightly as the real thing, which causes the emitted light to blend together and form a smoother image when viewed at the correct distance (this is in addition to the natural “pointilist” effect resulting from the visual cognitive system). Of course this is all completely lost in photos. Needless to say, getting a 1080p LCD to resemble a CRT at every possible viewing distance is an impossible task.

I will grant that the transitions from white to black are somewhat more abrupt than what you observe on a CRT up close, but that’s the kind of detail that you need 4K or higher resolution to capture accurately, and which is greatly exaggerated by adding blurs.

So yes, your example does look closer to the CRT shot in respect to the bloom that it shows, which is precisely the problem, because that shot is overbloomed, whether from the camera or the CRT itself. When it comes to the actual mask/phosphor structure, I think it’s obvious that my settings are doing a much better job of capturing what’s going on. On an actual CRT the mask/phosphor structure should be even stronger than what’s going on in my images, but I’m already pushing the limit of what my display is capable of regarding brightness.

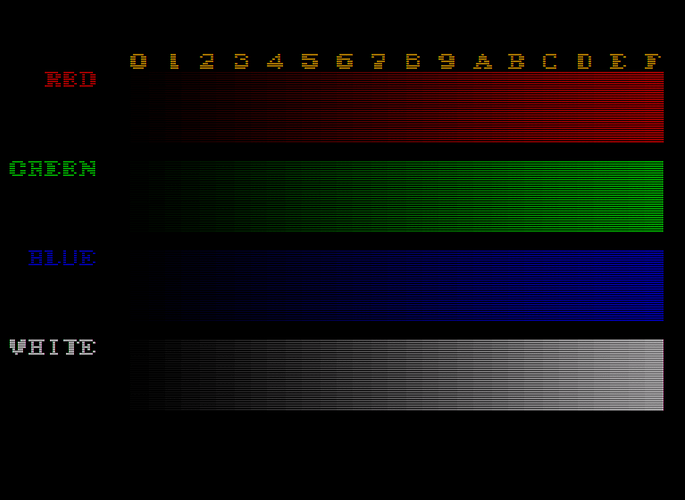

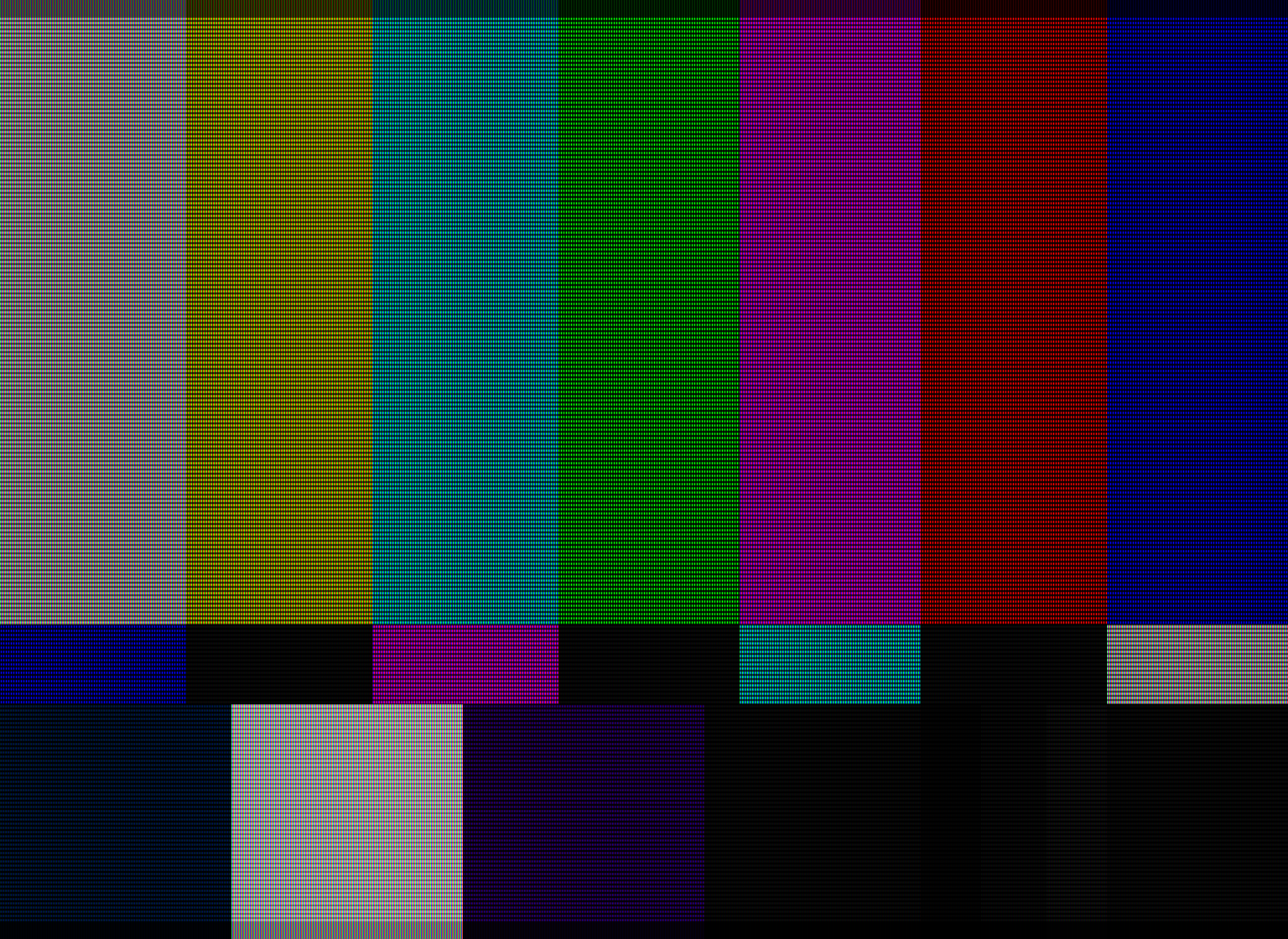

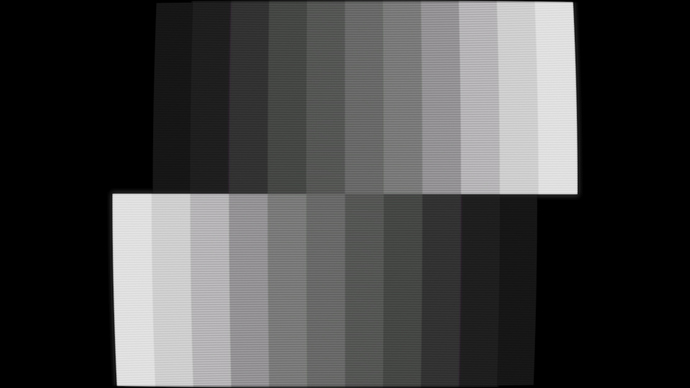

Now lets see those test image patterns from Fudoh’s 240p test suite. Then we can talk about something other than opinions.

And sure, I can be more politically correct. Also I think this new one should actually start with an earlier post, this one

And sure, I can be more politically correct. Also I think this new one should actually start with an earlier post, this one