No the reason why we are seeing higher relative power consumption on the Radeon Cards is because we’re comparing last gen AMD to current Gen nVIDIA. Last gen nVIDIA built on Samsung 8nm process were actually more more hungry and less power efficient than the Radeon RX 6000 series built on the superior TSMC 7nm process.

Why do you think nVIDIA had to design such an exotic cooling solution for the RTX 3000 FE graphics cards?

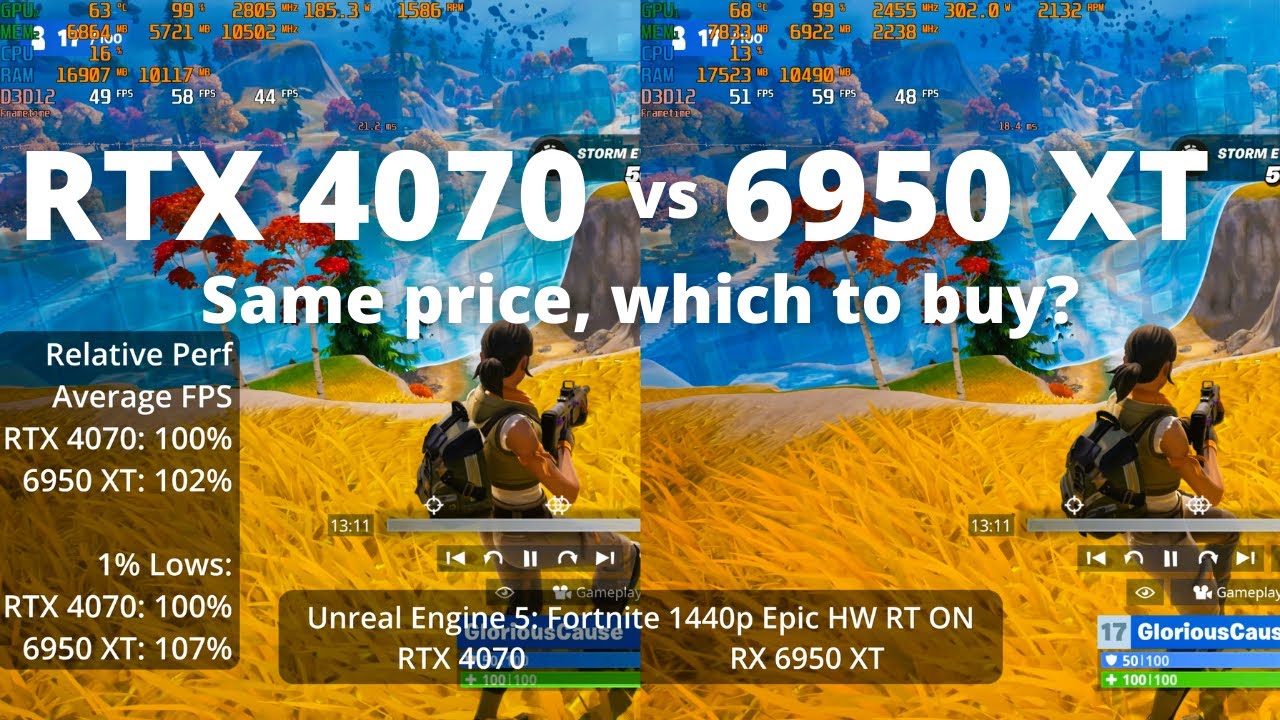

Who says 6000 series GPUs cannot do RayTracing? That’s not true. RayTracing performance per tier is lower on AMD but a higher tier RX 6000 graphics card like the Radeon RX 6950 can match a lower tier nVIDIA graphics card in RayTracing.

In the Hardware unboxed review I shared above you can skip straight to the RayTracing results to see that you can do RayTracing on an AMD 6000 series GPU.

@1080p RTX 4070 was 12% faster on average using RT.

@1440p it was 11% faster, while @4K it was 2% faster when RT was enabled in the titles tested.

In that same video if you switch to the 4K non RT results you’ll see that the RTX 4070 is 17% slower on average at 4K than the Radeon RX 6950 XT. Doesn’t the user want to use the graphics card for 4K gaming?

At 1080p resolution in non RT titles, the Radeon RX 6950XT’s lead shrunk to 12% on average, while at 1440p it increased to 15%. Notice as resolution increased the performance gap between the RTX 4070 kept increasing in favour of the Radeon RX 6950XT. I wonder if that extra 4GB VRAM could have anything to do with that?

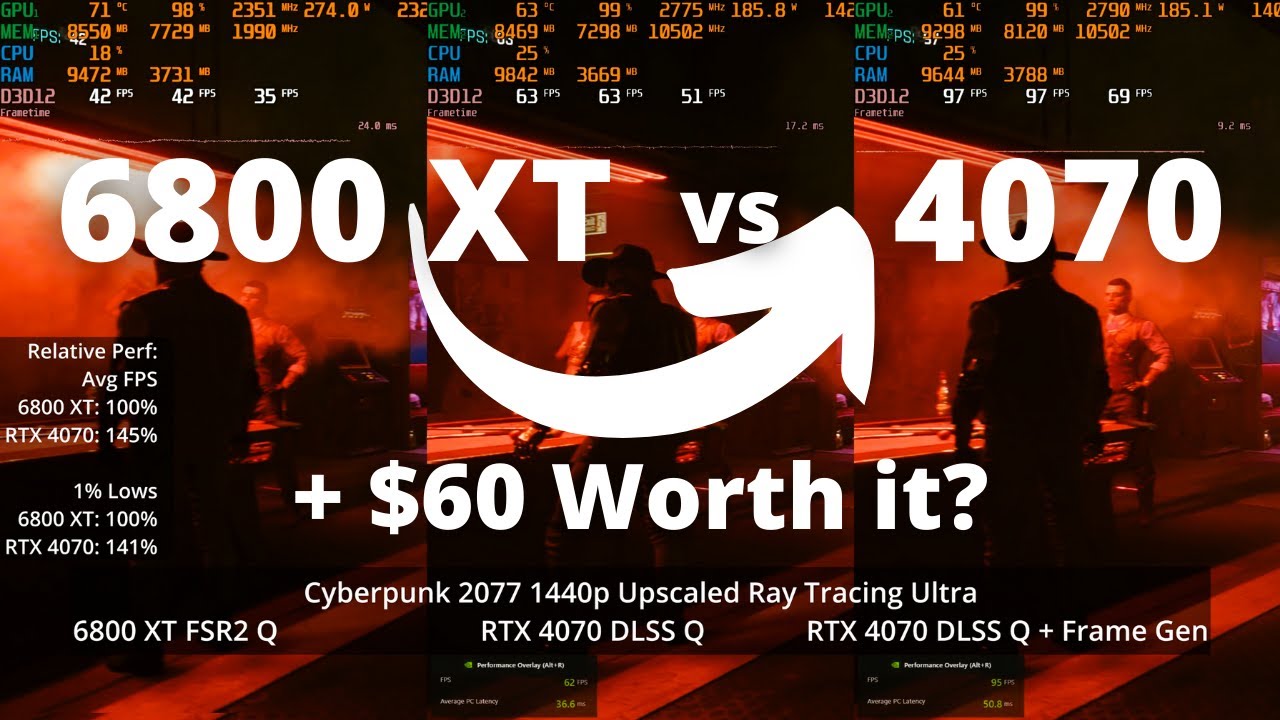

Here is another comparison video.

We got the same price for what tier SKU or what level of Gen on Gen performance increase?

I’d say we’re getting less of a performance increase for most SKUs gen on gen at much higher prices.

3000 series only looked better based on MSRP or rather less worse because of how horrible value 2000 series was proceeding it. In reality however what percentage of gamers were to purchase any 3000 series GPUs at anywhere close to MSRP?

Also, while the 3070/Ti matched the previous flagship’s 2080Ti’s performance at lower resolutions, nVIDIA chopped off 3GB VRAM leaving 4K performance worse.