NSX, back straight

You seem to be enjoying yourself. Which preset is this?

Once you dial in your specific Peak and Paper White Luminance values, you can use them as a starting point or guide for setting those values for other presets.

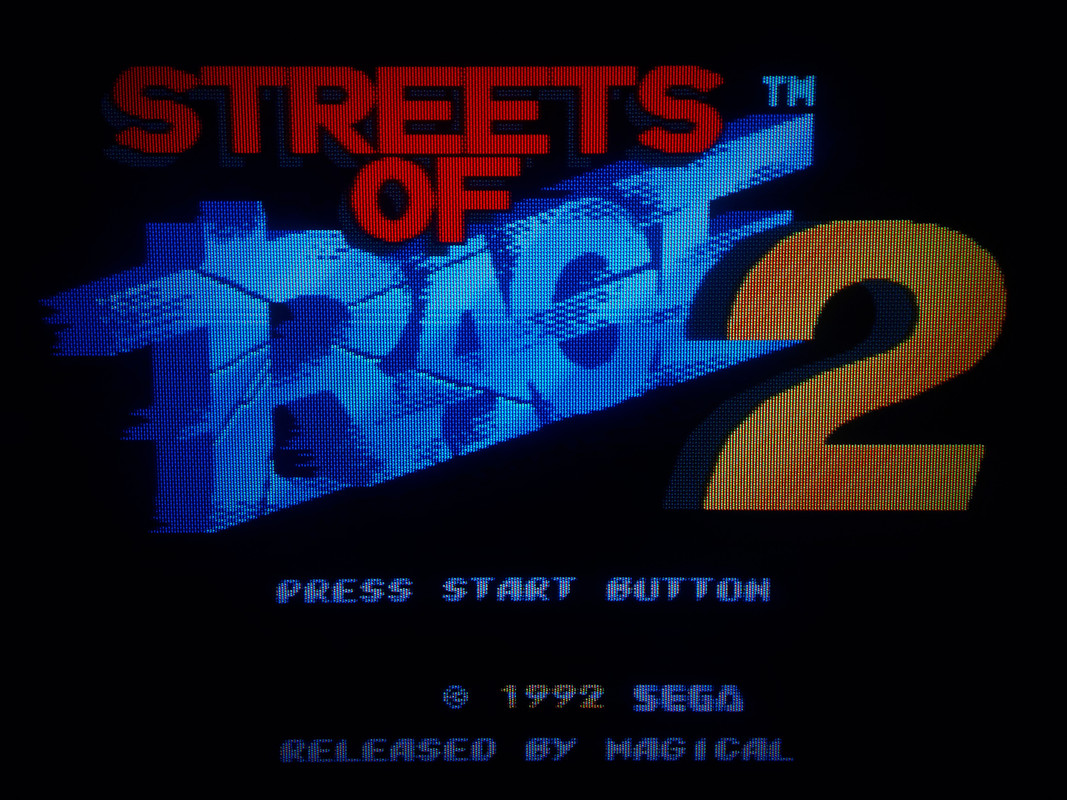

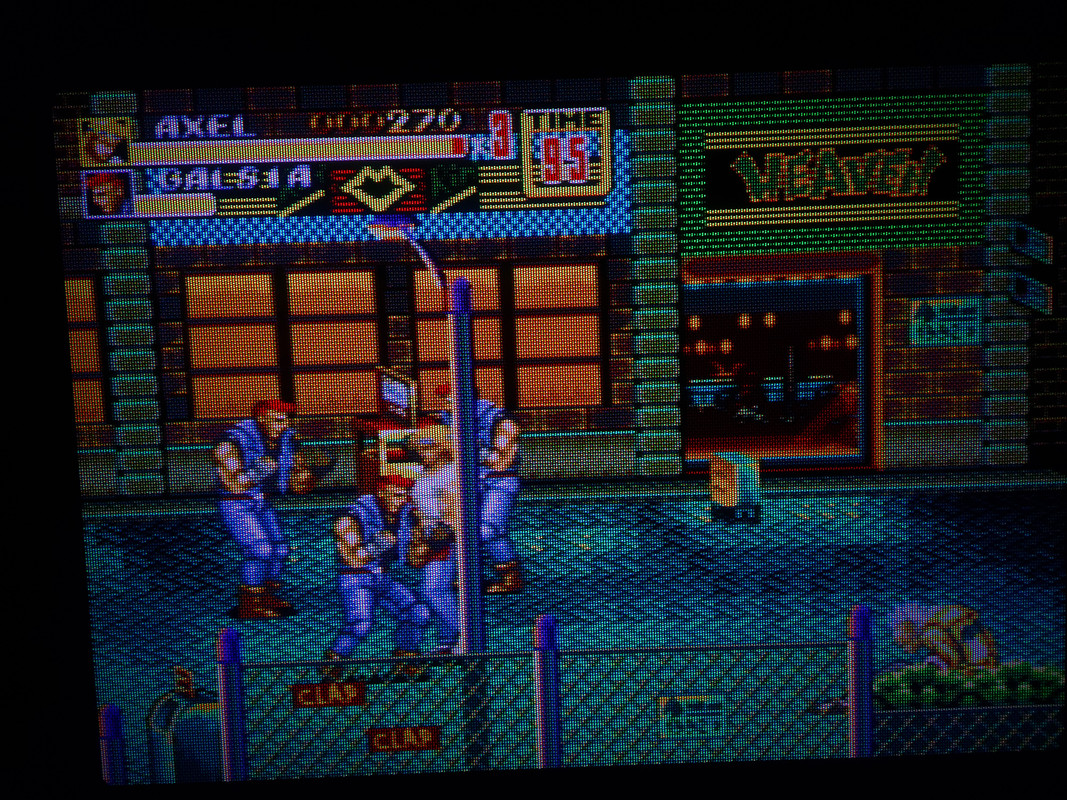

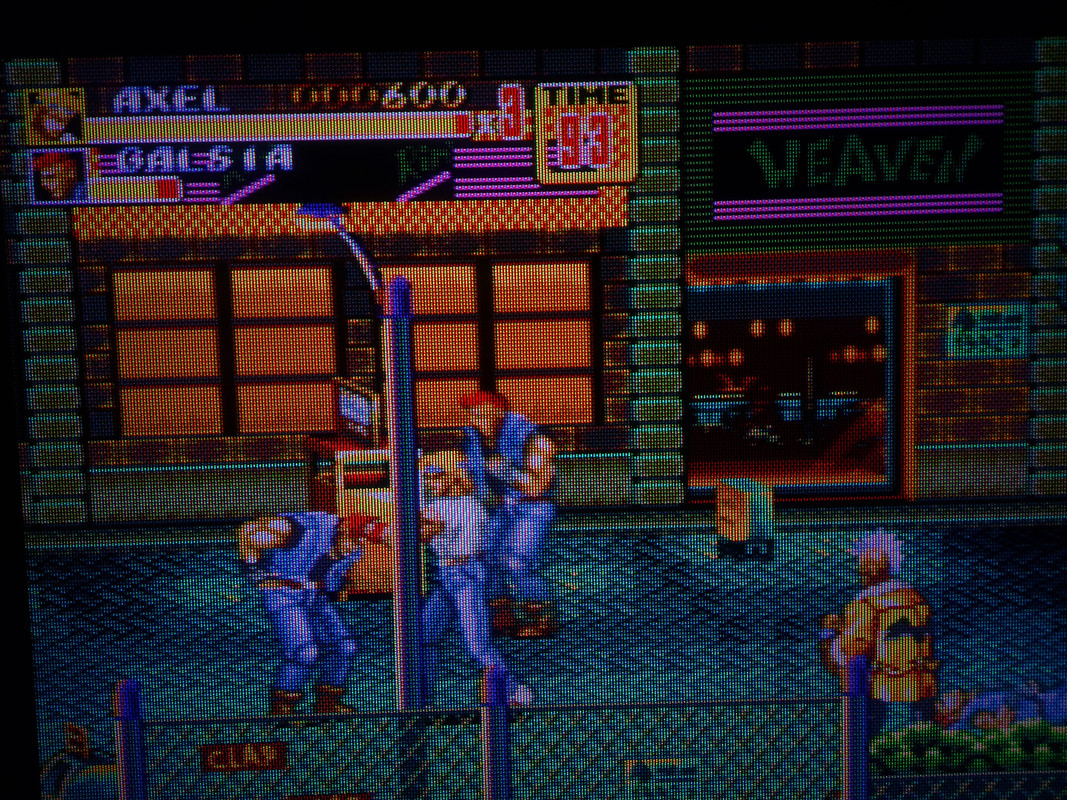

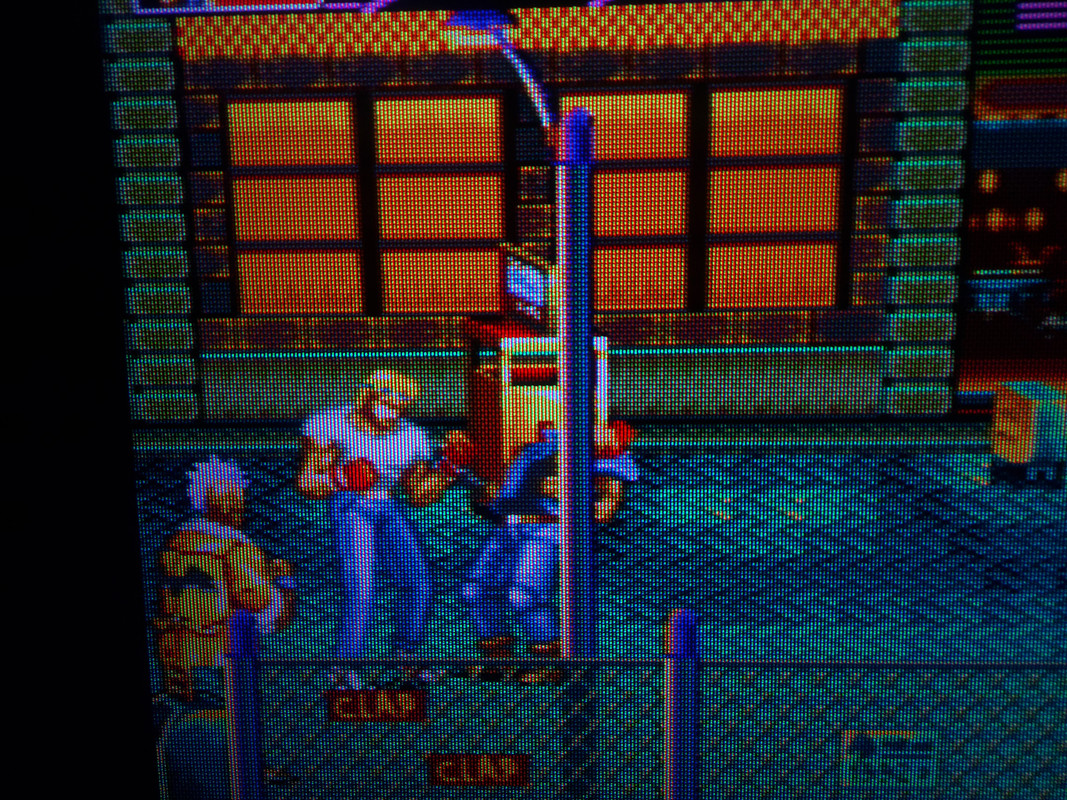

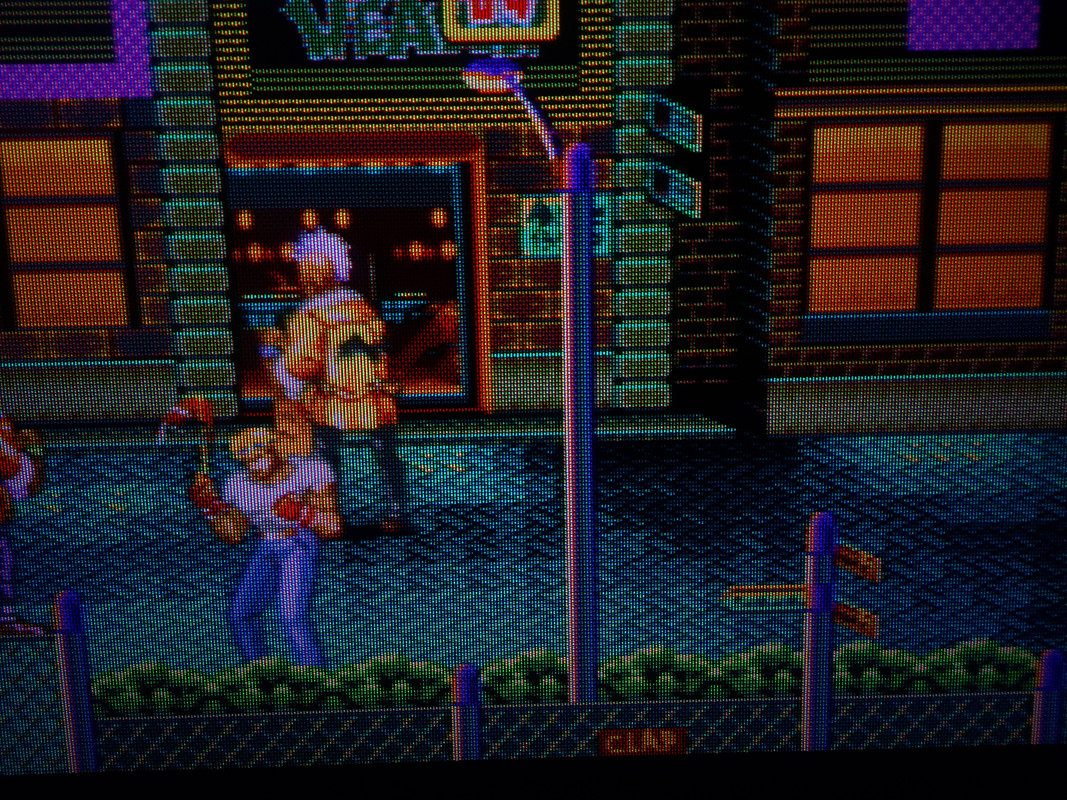

Cyber Lab Megatron miniLED 4K HDR Game BFI PSX S-Video Cyber Tron Sharp Advanced Neo-GX Ultimate.slangp

NTSC-J

hows it look ?

Good that you’ve chosen one of the newer presets to work with and I see that your system is coping fine! Is this the old PC or the nes laptop? Right now I’m working on some tweaks to these newer presets. Mostly trying to fix mask alignment issues and reconfigure some of the higher TVL Shadow Mask offerings which show some strange colour artifacts.

So don’t be surprised if you see changes and/or improvements to presets which have looked a certain way before

By the way, it’s a good idea after adjusting a preset to suit your display, to save a Core, Game or Directory Preset with Simple Presets On. That way if there are any updates to the preset, they should continue to load seamlessly and your changes to things like the Peak and Paper White luminance will be preserved.

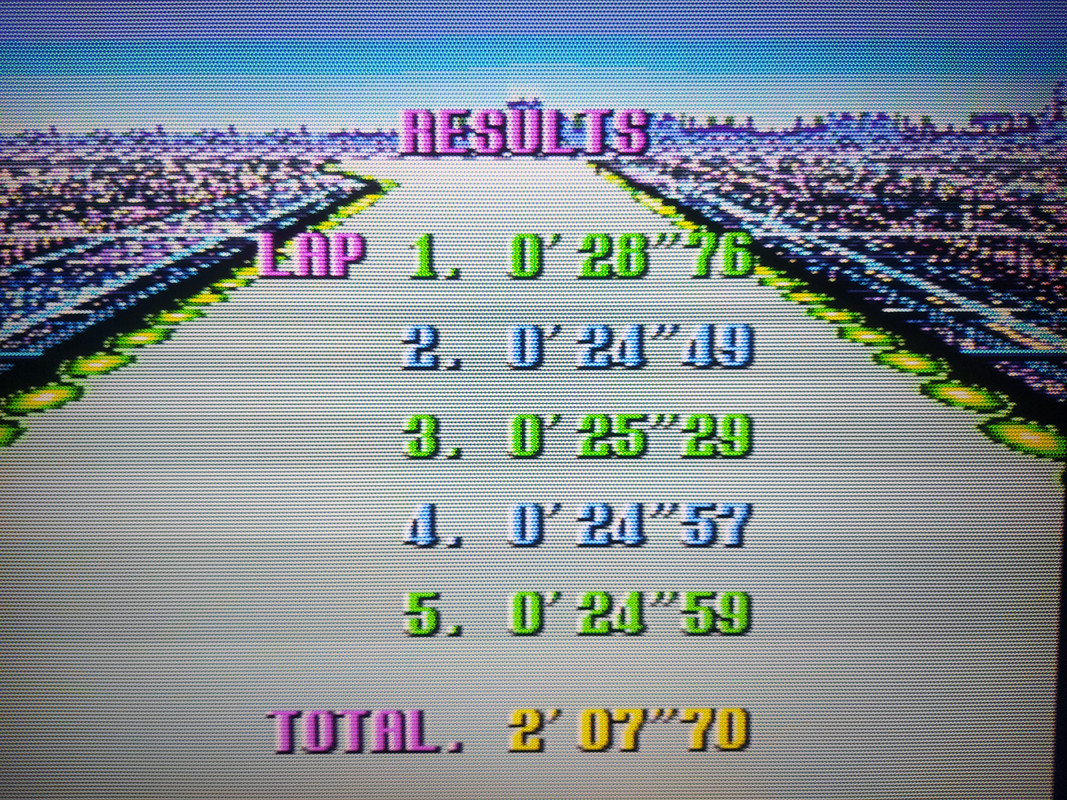

Well it seems proper, however, it looks like this is a GPU screenshot and not a photo of the screen. Unfortunately GPU screen!shots would look mostly identical on my (or any other) display so it’s not possible to make valid comparisons or get a good idea of what you’re experiencing unless you take some high quality photos then annotate somewhat the differences if any between the photos and what you’re actually experiencing on-screen in person.

I should be asking you what do you think so far?

An old 1050ti laptop I had on hand.

Funny enough, after we talked previously, this cheap 4K chinese monitor landed on my lap and I hooked it up to the laptop and gave it a run. It doesn’t reach the recommended HDR brightness suggested in the Sony Megatron thread, but at around 490 nits and some parameter adjustments it looks good enough for being free.

It’s a shame not being able to adjust certain parameters that won’t change on my end like peak brightness and display layout on a whole suite of the shaders all at once. But your starting points are fine, so all good.

Is there a better way to do it? Or are you talking about setting up a pro camera at the screen and taking a pic that way?

You were my gateway to shaders a while back so this aspect of the hobby is very much out of my wheelhouse. I think after a couple tweaks to peak brightness, display layout, 4K instead of 8K, region etc. they look great.

Well that’s not too bad. My Mega Bezel HDR Ready preset pack would probably work nicely with that but I don’t think the 1050Ti would be able to keep up.

You can try the CRT-Royale Preset Pack and also the SDR to HDR conversion recommendations.

Notepad++ Find (and Replace) in Files can do this.

It depends on what you’re doing now? Was I correct in thinking that the image you posted was a generated/captured screenshot and not a photo of the screen?

I just described how I would go about taking photos of the screen in another thread so here goes:

Now why would you want to interfere with this if you have a 4K display? On a 1440p or 1080p (or 8K) display that might be necessary but do you really want to alter the TVL of my presets which are already optimized for 4K displays?

So leave the parameter @ 8K even if the monitor is only 4K? I thought this was a parameter dependent on one’s personal display. If I’m wrong I’ll leave it alone moving forward.

yup

Precisely. These presets are already optimized for 4K displays so there’s no need to customize the Display’s Resolution or CRT-Resolution (TVL).

That’s only necessary for folks using other resolution who wish to find appropriate TVL’s/consistency for those resolutions.

8K doesn’t really mean it’s for 8K displays only. It just means divide the TVL by 2 but sometimes other characteristics are more desirable when choosing 8K over 4K, like the slot mask height used or in some cases scanline/mask alignment.

https://www.reddit.com/r/crtgaming/s/cCtMYLGzYz

https://www.reddit.com/r/crtgaming/s/yZp5OEItE2

https://www.reddit.com/r/crtgaming/s/OFr1x26rBn

https://www.reddit.com/r/crtgaming/s/ykdy52JsXV

These are from my brand new CyberLab Megatron miniLED Epic Death To Pixels HDR Shader Preset Pack

Be sure to click/tap on Download Original Image to view them in the most optimal fidelity.

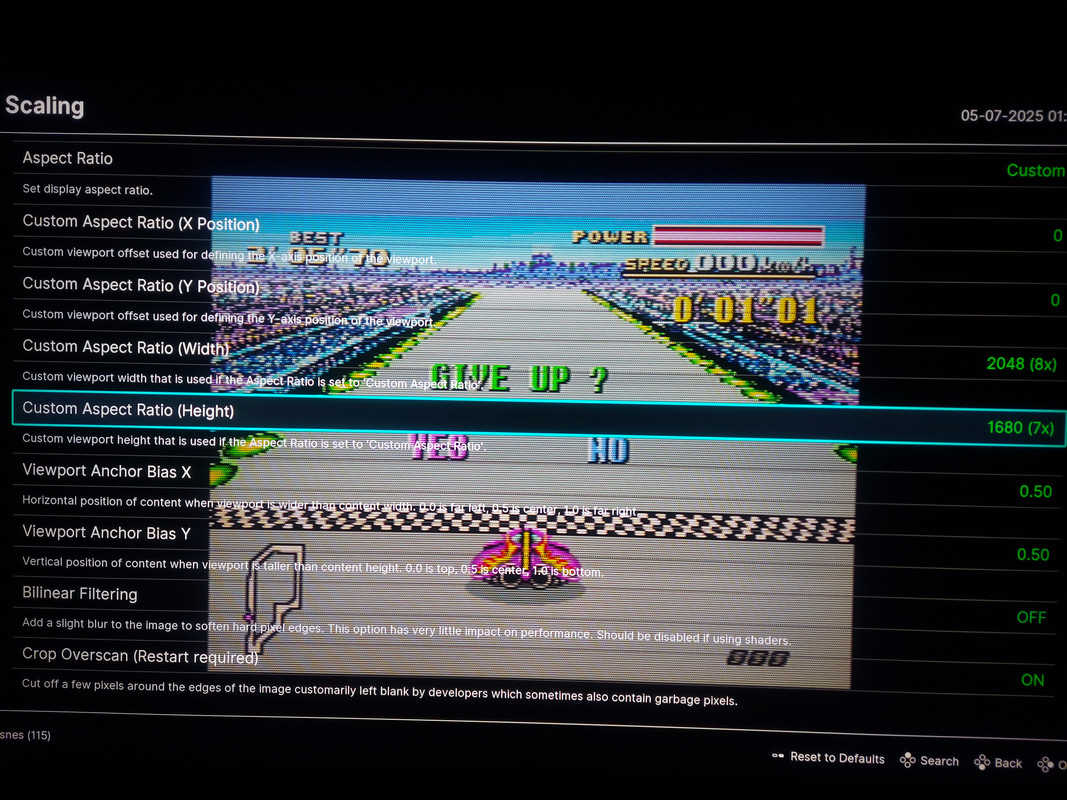

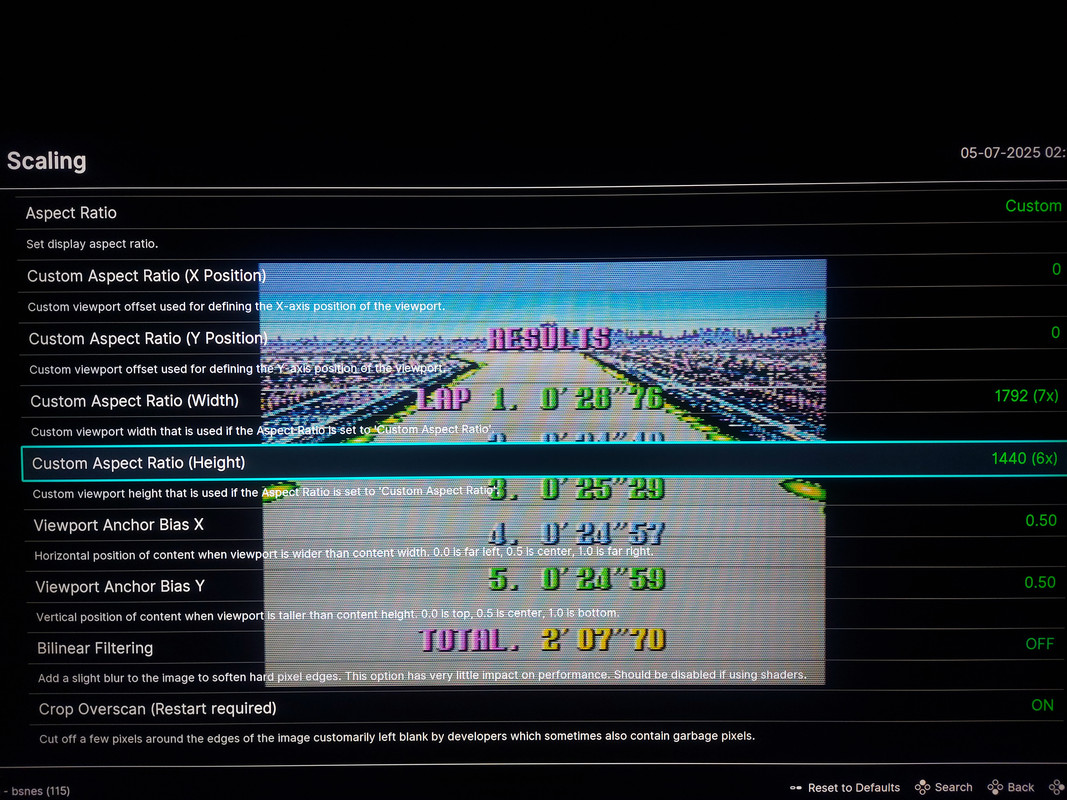

For a long time now, I’ve noticed while experimenting with lower scale factors that sometimes when the viewport is smaller the image looks more authentic and has a special vibrancy and sharpness to it which looks and feels better and more CRT like to me.

I’ve noticed every time I’ve switched back and forth between larger and smaller scale factors that there seems to be a sweet spot somewhere that may not necessarily be the largest possible image that the screen can display.

Then the possible reasoning behind this suddenly occurred to me. A lot of the improvement has to do with additional brightness output by the display when the viewport (window size) is smaller.

This leads me to believe that the higher tier miniLED TV on the market today for example the TCL QM851G, QM8K and the Sony Bravia 9 might be some of the best equipped displays for CRT emulation.

I have a TCL QM751G which is bright in its own right but I can imagine and get a taste of how useful the additional brightness headroom provided by the higher tier models might be. Not to mention the benefits of their increased dimming zones.

The brightest WOLED TVs might also fare well for example the LG G4 and G3, while the LG G5 and Panasonic Z95B might need some testing, validation and verification to see if current CRT shaders might need updating in order to support their novel subpixel layouts.

Note: One possible downside to using different scale factors is that it might interfere with the scanline and mask alignment.

CyberLab Megatron minLED 4K HDR Game BFI SNES 3D Comb Filter Shadow Mask Epic.slangp

https://postimg.cc/gallery/8CCLJfz

https://postimg.cc/gallery/yzz9B7x

CyberLab Megatron minLED 4K HDR Game BFI Genesis 3D Composite Slot Mask Epic.slangp

Hello Cyber. First of all thank you for your amazing work in trying to make retro gaming feel as authentic as possible.

I’ve been trying around and playing with shaders for a while now and decided to stick with yours.

To further enhance my experience and gather some knowledge in the process I have a set of questions which you’re hopefully able to answer. I hope they’re not too many.

Info: I play docked on the Steamdeck with 4K HDR output through Retroarch to my Sony A8 OLED. I mostly stick to your Mini LED packs.

-

It says BFI in the shader names and I know it stands for Black Frame insertion. Is BFI actually necessary for these to look as intended? Retroarch through Emudeck doesn’t support BFI yet AFAIK and my TV isn’t capable of 120Hz anyway. I thought they mostly look good without.

-

I sometimes have a hard time to decide between these very distinct names. Fine, Sharp, Ultimate, Ultimate Fine Advanced. You get the gist. When I get up close I CAN tell the difference but I’m not always sure WHAT it is. Sometimes the mask density is higher. Is it emulating different quality TVs?

-

Often the shader names already have the name of the mask in them. When I then go into parameters I can change the mask though. When I go into the shader parameters of a shader with “slot mask” in the title, is it advised to also change the setting to slot mask or can I change it to Aperture grill if I prefer?

-

I realized most of these have 4K in the title but out of box without adjusting parameters they always seem to be at 8K by default in the screen resolution parameters, so the masks look way too large and I can see the individual RGB pixels from afar, as each color is made of 16 pixels instead of 4 then. I manually have to set them to 4K.

-

I’m not quite sure what to set my peak paperwhite luminance as. I found out peak luminance for my TV (Sony A8) is 650nits, but there’s no info on the internet about peak paperwhite luminance. I saw a retrocrisis video where he set his to 50, but that image is just way too dark then.

-

Why are they actually divided by consoles? I get why they’re divided by cable signals for example, but some of the SNES titled shaders give me the see through Sonic waterfall just as the Genesis titled shaders. What’s the reason behind that?

Thanks in advance, I’m happy to learn.

Welcome @IlMonco, and thanks for your interest and these great questions.

I know everyone calls them shaders but I mainly make Shader Presets which are settings for shaders made by other awesome people. So feel free to thank the shader devs as well because without them, there probably wouldn’t be any CyberLab Shader Preset Packs.

No, that’s really a note to help me remember the settings used when creating the presets. Compensating for BFI when making a preset would make for a very different if BFI was off in the process but you don’t have to use it.

I make so many presets and am always evolving them that I probably couldn’t tell you the difference either but sometimes when I come back to improve or adjust something I go into experimental mode. That does not mean that there was something wrong with what I did before so the previous good preset is usually kept and the new preset is simply a variation so it needs a new filename in order to differentiate. Hmmm…how do you differentiate such minor or evolutionary changes? I tried I, II III e.t.c but got tired of it so now we have this hyperbolic nomenclature.

Don’t take it too seriously. Most of these presets are self contained so you can go through them one by one and delete the ones you don’t like or make note of the ones you do. I just made a new preset pack, the name of it is Epic. I’m going to try my best to only include my best of the best presets and not clutter it too much but I’m not making any promises…lol

I don’t think I’ll ever stop adding and making presets once I am able to.

In general the longer and more extreme the name the more refined the preset. In some cases you can look through the thread and see where I posted screenshots of photos and the names of presets and you can try those.

You can also try to stick to the newer presets as newer ones tend to be more refined. I’m always noticing stuff that can be improved and learning as I go along but not all the time a new preset or pack means that the old ones are obsolete or irrelevant. Sometimes, I perfect then I start over or try something different or new and I come up with a new naming scheme or new preset pack to reflect that fresh start.

Some of the terms have meaning though.

Fine means a higher TVL or smaller pitched mask features (aka higher mask density).

Sharp usually means, no (edge) smoothing is applied.

Advanced is a new generation of presets which have additional features like noise, rounded corners and the ability to crop overscan or garbage from within the shader parameters.

Ultimate presets are supposed to be more refined than vanilla presets but sometimes I refine an already Ultimate preset. Hmmm…Ultra Ultimate? Ultimate Ultra? Mega for Megadrive/Genesis? Super for SNES? Turbo for Turbo Duo/Grafx16/PC-Engine?

Do you see where this is going now?

You shouldn’t have to change a shader with “Slot Mask” in the title to Slot Mask in the Shader Parameters. That’s a bug or typo if you see something like that and of course you can change it to whatever Mask type you prefer, although I kinda already do that for most presets and I compensate for the differences in certain characteristics which happen when you switch Mask types. This is also one of the reasons why there are so many presets, for every preset there can be at least 3 versions, 1 for each Mask Type to start with.

This is not a bug, this is a feature. 8K and 4K just allow for different Masks to be selected which would give the approximate TVL thats listed in the Resolution parameter provided that you run the preset at the selected display resolution specified.

However, you can find that a mask with a different subpixel layout that is only available using the 8K setting looks better than a mask of a slightly different or similar TVL that’s available using the 4K Display’s Resolution. Sometimes the Mask height might be more appropriate for the 8K mask Vs a similar but not identical mask in the 4K set. I first started using 8K when I noticed that the centre of the Slot in one of my Slot Mask presets wouldn’t align exactly with the center of the scanline but the 8K mask lined up exactly!

In more recent times I noticed that many of the 8K masks that I liked used RYCBX instead of RGB and although they generally looked almost exactly like what I wanted, that additional subpixel bothered me so in the latest addition of my Epic preset pack, I modified all of them to use RGBX/RBGX/BGRX masks instead.

It’s still under the same 8K setting.

You can use the TVL settings while using any of the Display Resolution settings to adjust the size of the Mask features. You don’t have to switch to 4K to do that. You can’t easily adjust the height of the Mask in Sony Megatron Colour Video Monitor though. That seems to be fixed. Which is why I choose to use 8K over 4K in some cases. You complained about the Mask looking way too large but did you compare the height of the 4K and 8K masks? The 4K slot masks are taller than the 8K ones.

So you can have mask that’s just as chunky using the 4K setting if you lower the TVL.

These things are just a guide and a starting point to setting the shader up. It varies according to your Picture Mode, Tonemapping settings e.t.c.

You really have to use your eyes to dial these in perfectly. Too much brightness leads to harsh looking highlights and oversaturated, clipped colours.

Too little and it leads to darkness and very inaccurate looking colours.

The trick is to use the reported Peak Brightness/Luminance to get a close enough figure for your Peak Luminance, then crank up the Paper White Luminance until it looks bright and good to you without going overboard and clipping all the details. The number doesn’t matter. How it looks matters.

I don’t know how he arrived at a value of 50 for his Paper White Luminance for any TV but the vast majority of his videos are excellent while a few might sometimes be a bit oversimplified or its possible that he may not have fully understood what I might have been trying to convey in explaining how to go about setting up the shader or presets.

As you see, I’m the opposite in that, I try to provide as much details as possible in my explanations but this doesn’t always work for the masses. What RetroCrisis does is very appreciated at the end of the day. Maybe that settings of 50 is just what he needs for his display but definitely not anyone who owns an OLED TV of any kind.

On my OLED I used 630 Peak and 630 Paper White Luminance. For my "Near Field presets, I used 630 Peak and 450 Paper White.

If you have a similar WOLED based OLED TV, I don’t see your settings being vastly different.

Because different consoles have different output chips and circuitry even among the same generation and family. There are several variations of Sega Megadrive/Genesis hardware and each would look different on the same composite cable.

How would I capture these differences if I used one preset for all variants within the family?

Well there’s dithering in SNES games too as well as Turbo Duo family games and if blended properly can provide more colours and transparency effects so why not exploit what the software can do to bring forth such elements?

In my presets I try to achieve those things by default most of the time so it’s not necessarily something you’ll read much about or hear me talk about but you’ll definitely see in action when you use my presets.

You’re most welcome. Thanks for the impromptu Q & A and feel free to ask more and also to share more!

Formally announcing my intent to finally (optimistically using the word) ‘upgrade’ from my 9th gen Pioneer Plasma TV to the 2025 Panasonic OLED once it goes on sale next year.

Honestly motion smoothness is one of the big reasons I’ve stuck to the Kuro Elite for so long, knowing OLED’s struggle to preserve the same smoothness is a worry for me. And I was able to calibrate my set to really get super close to my HDR laptop with 4K HDR movies using MadVR’s tone mapping conversion. At this age it’s a little scary to anticipate how much work it will be to reach the same levels of content and satisfaction with a new set. x.x It’s more money on GPU’s, more worry about FPS, BFI, CRT Beam Emulation, etc. And then Micro LED hopefully comes to save us in a decade afterwards anyway. ;p

Wow! Thank God for the humongous strides made in motion interpolation and judder removal technology!

Based on this you might be very disappointed by the fact that your shiny new tandem OLED TV may or may not work with existing subpixel layouts.

You might actually be a good candidate to be a guinea pig to test and add proper working mask layouts for these new displays?

Why the Panasonic and not the LG G5 though, which is brighter and reportedly has better gaming features?

Pretty much everything they’re saying about the Panasonic over at AVS Forums. I think you watch the HDTVTest YouTube channel too?

Also LG seems like they aren’t even trying when it comes to calibration anymore. (I’m just parroting this off of articles I’ve read though TBH) At this age, I watch more movies than play games.

TBH I don’t understand subpixel layouts. :x My other friend got a Bravia 8 II, but he lives kinda far and isn’t as picky with the shaders as I am, and then I’m not as picky as a lot of people I respect around here. I wish I could loop him into helping you though.

I think you might have a certain affinity towards Panasonic and if so, there’s nothing wrong with that. Do enjoy your TV when you get it!

Yes, I look at that from time to time as well as many others. Note that HDTV Test arrived at a different result in the Overall Best TV of 2024 compared to the Value Electronics Shootout by Stop The FOMO and Brian’s Tech Therapy. So don’t be surprised if they arrive at a different result this year as well.

One thing about LG is that they tend to support their flagship TVs for a very long time so It’s very likely that anything that might be off with that particular model will be rectified in due course.

This is what actual owners have had to say about the G5:

https://www.reddit.com/r/LGOLED/s/fR5z0ZcYvg

I’m sure there’s an explanation here somewhere but I sent you a Link to the “OLED Subpixel, How Do They Work?” Thread so you might be able to learn a little from there.

You might have heard in the past that a pixel is the smallest element of a digital image or display. However each of those pixels are made up of smaller elements of individual colour called subpixels. The combination of the subpixel colours determine the final colour of each pixel.

What we try to do here is allow these subpixels to be analogous to the phosphors on a CRT. However CRTs mostly had they phosphors in an R-G-B layout in that order, but only some modern displays share that subpixel layout so we may not always be able to map to a CRT’s R-G-B phosphor layout 1:1.

Depending on the layout of the display, we might use BGR instead or RBG.

QD-OLED is not even in the question because of the shape of its subpixel layout, it wouldn’t be able to map 1:1 with any CRT Layout.

So it took a while before we figured out how to get acceptable phosphor to subpixel mapping on WOLED TVs. With this new RGB tandem OLED, the layout is different so either one of the other existing mappings might work or we might need to create a new mapping.

Hi Cyber,

If we are stuck with a QD-OLED monitor for now (I’m going to trade it in for one of those new AOC Mini LED for better HDR experience eventually), what are the best settings to change to mitigate the subpixel mismatch issue?

Something else that happened, when I updated RA from 1.20 Stable to 1.21 Stable, it broke a lot of your shader presets, specifically the 4k HDR presets. Like, distorted colours, blurry, etc. I tried installing 1.20 again but they were still broken…Weird issue, might be just me (I also get constant crashes upon game load on 1.21 with certain cores now), just thought to bring it up in case others have it.

Thanks!

Pioneer, and Sony actually. I guess I had a Panasonic VCR back in the 90s??? But I’m just not big on QD-OLED with their fringing and magenta push. Definitely disliked that on the QD-OLED PC monitors I’ve tried. Samsung forcing exaggerated saturation and avoiding Dolby Vision is an instant disqualifier ofc. (I keep accidentally winding up with DV encoded files which makes it a desirable feature) Sony has better SDR color accuracy and apparently the processors do more accurate upscaling, but I just watch over media files on my PC so…I’m not hooking a cable box or an NES up to it or something like that. Maybe 2026 Sony will not be using a QD-OLED panel and then I’ll wait another year for their sale, but I feel like they’re sticking with QD.

When it comes to speakers the Z95B’s 170W 5.1.2 sound difference is no comparison, I presume to help people avoid needing a separate soundbar purchase? But I use an external 5.1 speaker setup anyway so that’s not factoring in…

But because you brought it up, I went and checked the game modes; what am I missing here? 12.6ms input lag on the Z95B, 12.9ms input lag on the G5… FPS/RPG settings in Sound Mode…and 60hz mode that pushes input lag down to 8.6ms. CRT’s have 8.3ms input delay. Apparently the Z95B can further reduce input lag to 4.8ms with 120Hz signals.

I tried to go find the comparable interface for the G5 and I just find tons of videos saying it’s cooked for gaming. u.u I’m not educated on that, so DYOR.

HDTVTest explained what True Game Mode is on the Z95B here:

This is what @MajorPainTheCactus had to say about QD-OLED subpixel CRT mask emulation:

Just be careful and do your research because all miniLED displays are not the same and there are many factors can make or break the experience.

There’s no perfect TV for CRT Shader emulation yet in my opinion. Let’s hope you don’t miss the advantages of OLED when you “upgrade”.

I’ve been using 1.20 and then 1.21 for ages now. I doubt that would have broken my presets.

What might “break” presets or rather cause them to look differently than intended is if you updated your Slang_Shaders using the Online Updater without first backing up and then restoring the relevant/required versions of the shader for the particular preset pack.

If you read near the download links to the packs in the first post, it specifies these things in the installation instructions.

For my Mega Bezel Preset Pack you need Mega Bezel 1.14.0.

For my Sony Megatron Colour Video Monitor Preset Packs the version of CRT-Guest-Advanced is also specified.

Only my very first Sony Megatron Colour Video Monitor should be affected by an Online Update of the Slang Shaders though as all my other Sony Megatron preset packs look for the Guest Advanced shader in a different folder from its default installation folder.

From my Sony Megatron W420M Preset pack onwards, there’s no need to separately install the CRT-Guest-Advanced shader because it’s included.

I’m not sure why your games would be crashing. Perhaps you might need to also update your cores or do a roll back of the cores that no longer work as well.

As always sharing a log file using Pastebin will always help with getting to the bottom of these kinds of issues.

Also, if you want to downgrade RetroArch, you first need to rename or delete the retroarch.exe file.